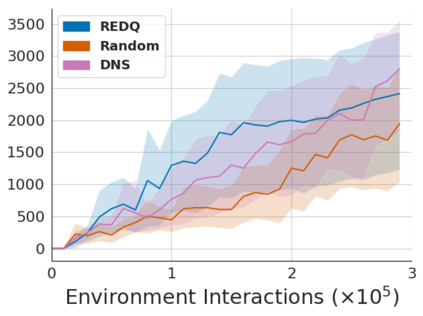

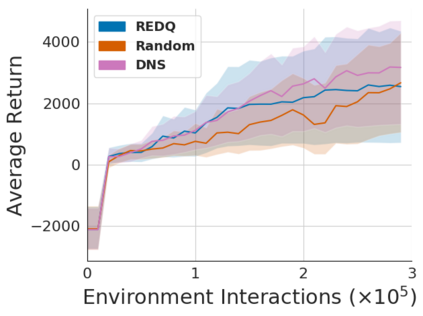

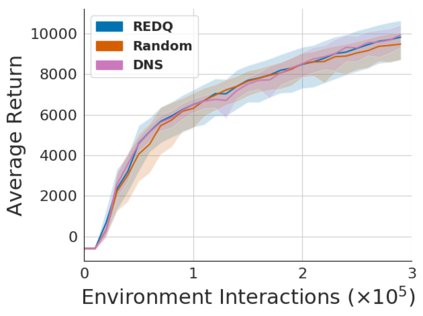

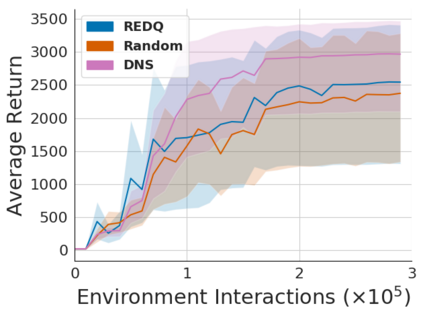

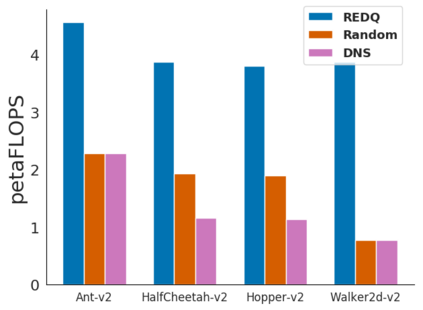

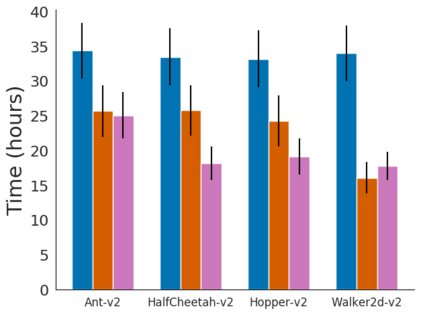

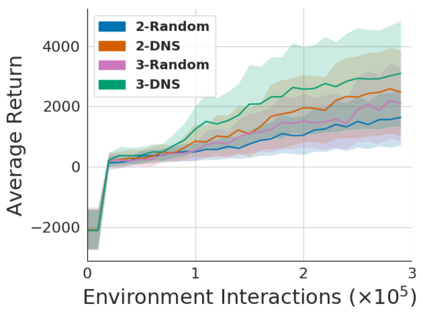

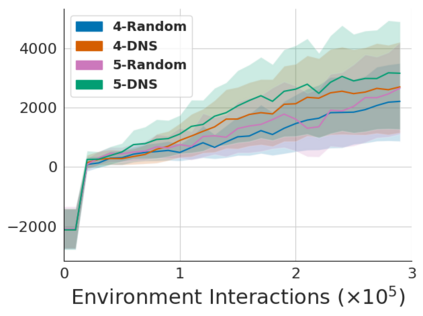

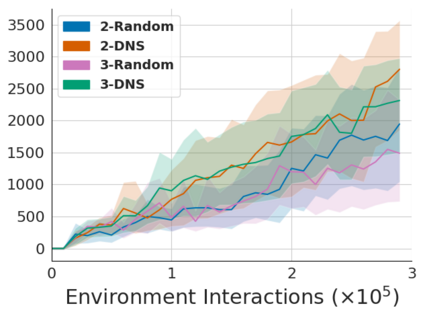

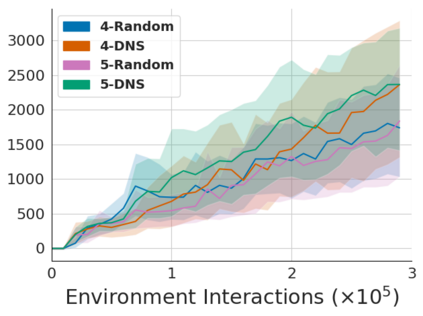

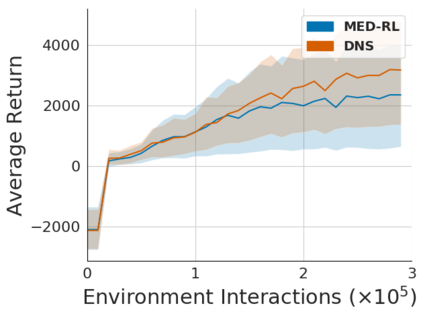

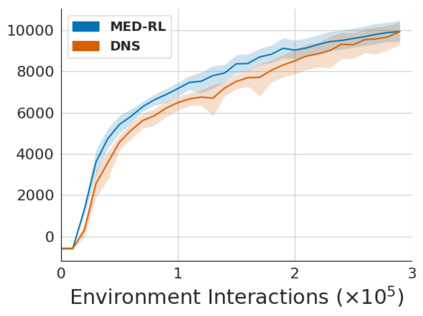

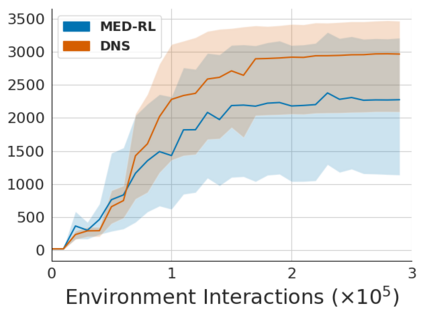

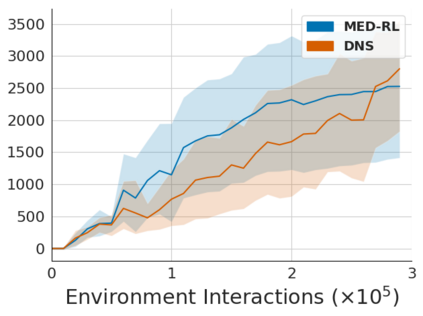

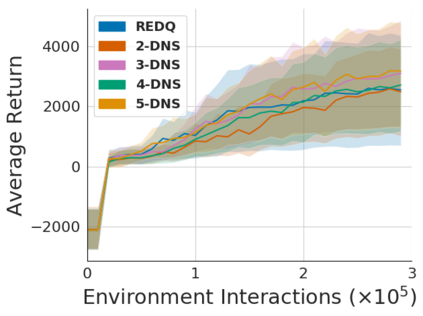

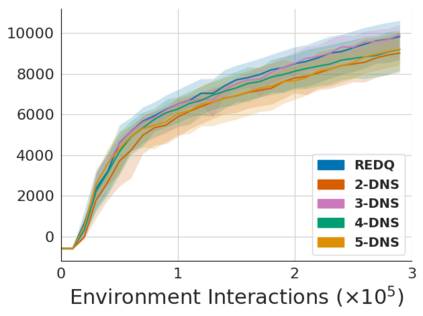

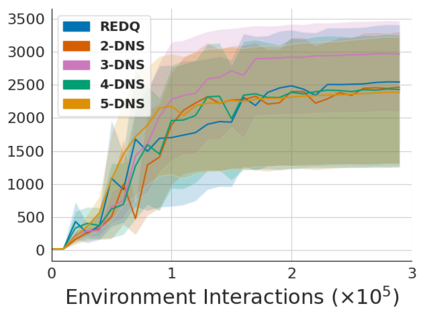

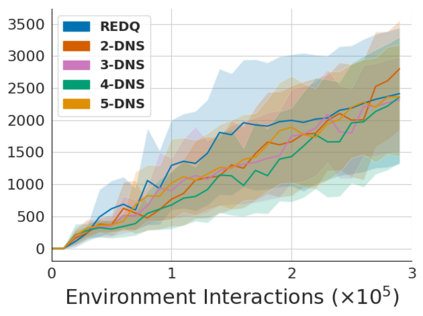

Application of ensemble of neural networks is becoming an imminent tool for advancing the state-of-the-art in deep reinforcement learning algorithms. However, training these large numbers of neural networks in the ensemble has an exceedingly high computation cost which may become a hindrance in training large-scale systems. In this paper, we propose DNS: a Determinantal Point Process based Neural Network Sampler that specifically uses k-dpp to sample a subset of neural networks for backpropagation at every training step thus significantly reducing the training time and computation cost. We integrated DNS in REDQ for continuous control tasks and evaluated on MuJoCo environments. Our experiments show that DNS augmented REDQ outperforms baseline REDQ in terms of average cumulative reward and achieves this using less than 50% computation when measured in FLOPS.

翻译:神经网络共同应用正在成为在深度强化学习算法中推进最先进的神经网络的一个迫在眉睫的工具。然而,在共同学习算法中培训这些数量庞大的神经网络的计算成本极高,可能成为大规模系统培训的障碍。在本文中,我们提议DNS:一个基于决定点过程的神经网络取样器,在每一培训步骤中专门使用 k-dpp 来抽样一组神经网络进行反演,从而大大缩短了培训时间和计算成本。我们将DNS纳入REDQ,用于持续控制任务,并在 MuJoCo 环境中进行评估。我们的实验表明,DNS在平均累积报酬方面加强了REDQ,在FLOPS中用不到50%的计算方法加以衡量。