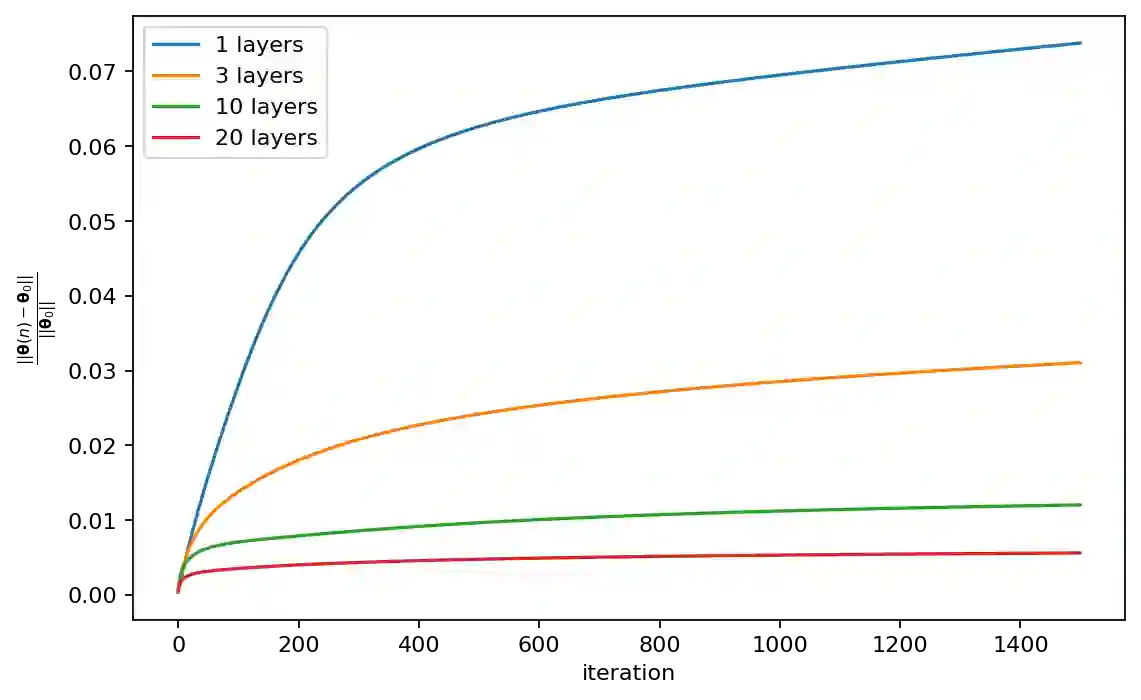

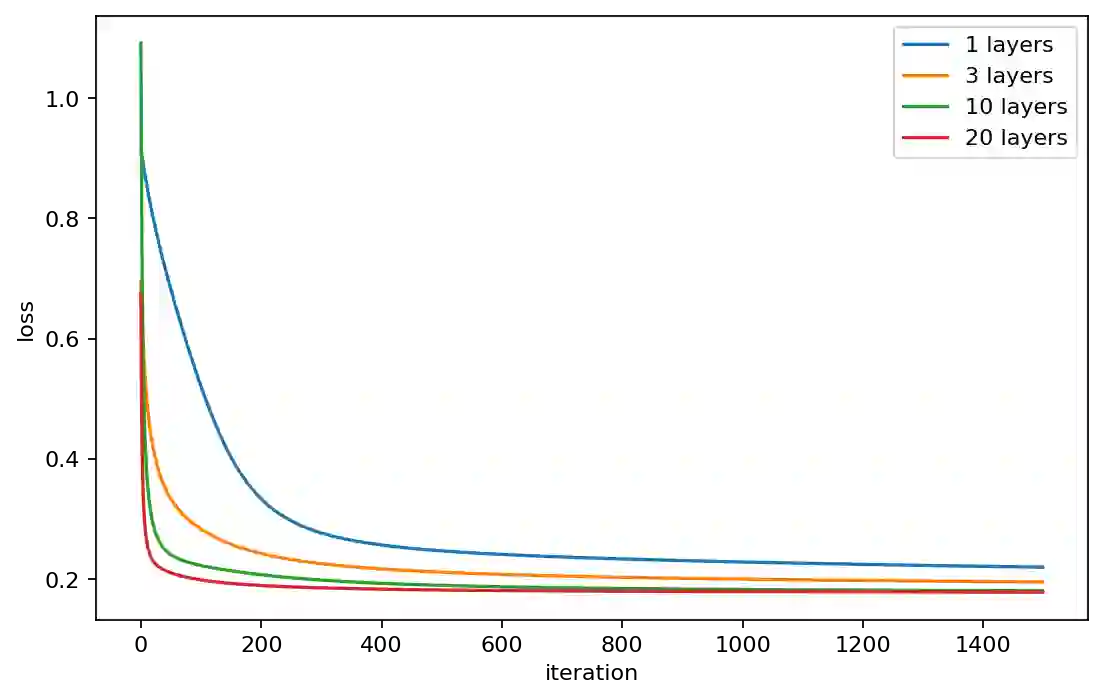

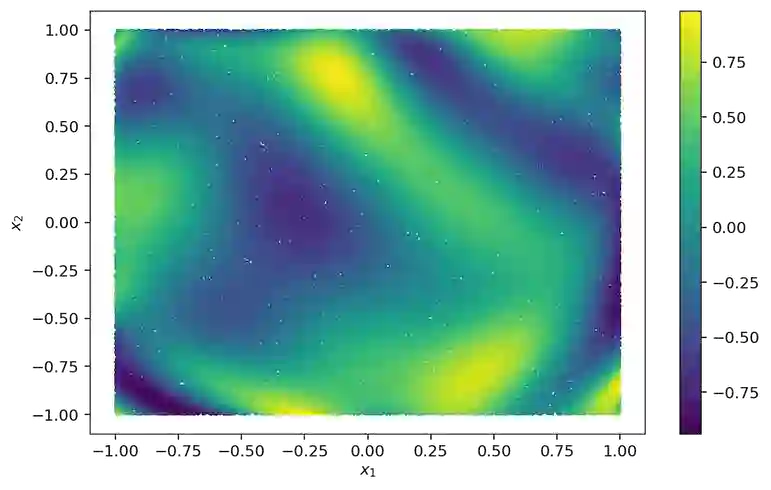

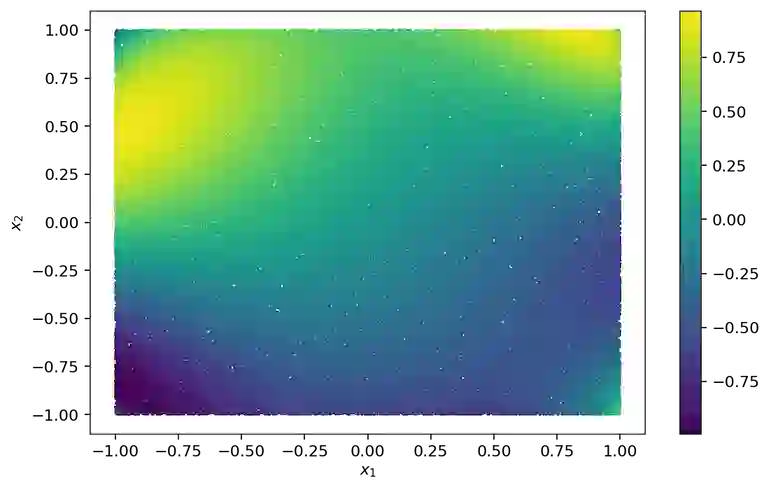

Quantum kernel method is one of the key approaches to quantum machine learning, which has the advantages that it does not require optimization and has theoretical simplicity. By virtue of these properties, several experimental demonstrations and discussions of the potential advantages have been developed so far. However, as is the case in classical machine learning, not all quantum machine learning models could be regarded as kernel methods. In this work, we explore a quantum machine learning model with a deep parameterized quantum circuit and aim to go beyond the conventional quantum kernel method. In this case, the representation power and performance are expected to be enhanced, while the training process might be a bottleneck because of the barren plateaus issue. However, we find that parameters of a deep enough quantum circuit do not move much from its initial values during training, allowing first-order expansion with respect to the parameters. This behavior is similar to the neural tangent kernel in the classical literatures, and such a deep variational quantum machine learning can be described by another emergent kernel, quantum tangent kernel. Numerical simulations show that the proposed quantum tangent kernel outperforms the conventional quantum kernel method for an ansatz-generated dataset. This work provides a new direction beyond the conventional quantum kernel method and explores potential power of quantum machine learning with deep parameterized quantum circuits.

翻译:量子机内核法是量子机学习的关键方法之一,它的好处在于不需要优化,而且具有理论上的简单性。由于这些特性,迄今为止已经开发出若干实验示范和讨论潜在优势。然而,正如古典机器学习的情况一样,并非所有量子机学习模型都可被视为内核方法。在这项工作中,我们探索一个量子机学习模型,该模型具有深参数化量子电路,目的是超越常规量子内核方法。在这种情况下,代表力和性能预期会得到加强,而培训进程可能因贫瘠高原问题而成为瓶颈。然而,我们发现,在培训期间,足够深量子电路参数的参数与最初值相比没有多大的移动,因此,并非所有量子机内核学习模型都可被视为内核的神经中核内核,而这种深变量量量量机学习可以由另一个突发内核、量核内核内核法来描述。内核的模拟显示,提议的量子内核内核电流可能是一个深度的离子,而常规的量心内核的动力法则提供了一种常规量内核的量研究法。