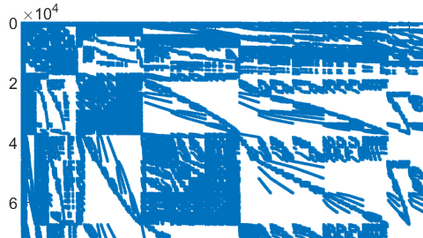

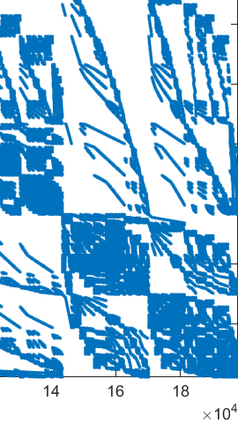

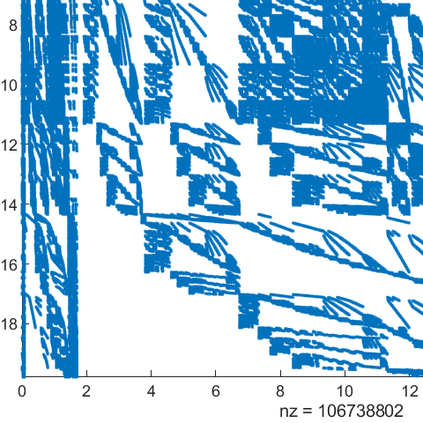

We examine the possibility of using a reinforcement learning (RL) algorithm to solve large-scale eigenvalue problems in which the desired the eigenvector can be approximated by a sparse vector with at most $k$ nonzero elements, where $k$ is relatively small compare to the dimension of the matrix to be partially diagonalized. This type of problem arises in applications in which the desired eigenvector exhibits localization properties and in large-scale eigenvalue computations in which the amount of computational resource is limited. When the positions of these nonzero elements can be determined, we can obtain the $k$-sparse approximation to the original problem by computing eigenvalues of a $k\times k$ submatrix extracted from $k$ rows and columns of the original matrix. We review a previously developed greedy algorithm for incrementally probing the positions of the nonzero elements in a $k$-sparse approximate eigenvector and show that the greedy algorithm can be improved by using an RL method to refine the selection of $k$ rows and columns of the original matrix. We describe how to represent states, actions, rewards and policies in an RL algorithm designed to solve the $k$-sparse eigenvalue problem and demonstrate the effectiveness of the RL algorithm on two examples originating from quantum many-body physics.

翻译:我们研究使用强化学习(RL)算法解决大规模电子价值问题的可能性,在这种算法中,理想的电子元值可以通过一个以美元计的非零元素稀疏的矢量来近似于所期望的原始问题,在这种矢量中,美元与要部分对等的矩阵的维度相比相对较小。这种类型的问题产生于一个应用程序,即理想的电子元值显示本地化特性,以及计算资源数量有限的大规模电子值计算。当这些非零元素的位置能够确定时,我们可以通过从原始矩阵的一行和一列中提取的美元=美元值的亚基值计算,获得与原始问题相近的美元-纯值。我们审查以前开发的一种贪婪的算法,以逐步推导出非零元素在美元中的位置,而其计算资源量则有限。当这些非零值元素的位置能够确定时,我们可以通过一种RL方法来改进最初的美元行和列的近似近似近似近似近似近度,我们可以通过从原始矩阵中计算出一个美元值的美元值的美元值来获得原始问题。我们如何在最初的物理矩阵中展示了一种精确的算法,从而展示了一种状态,并展示了一种精确地算法。