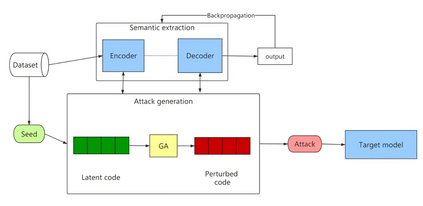

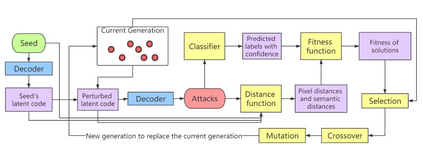

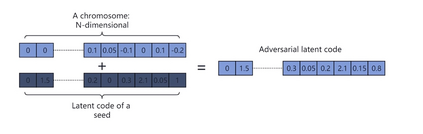

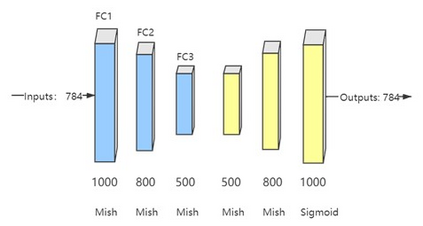

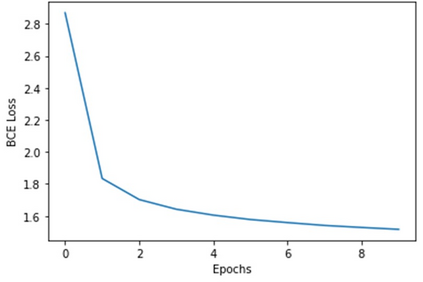

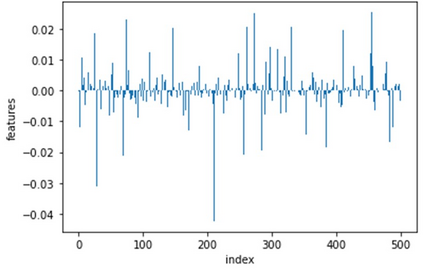

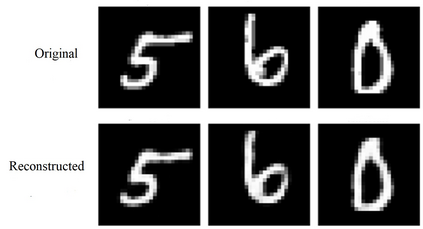

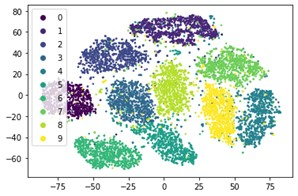

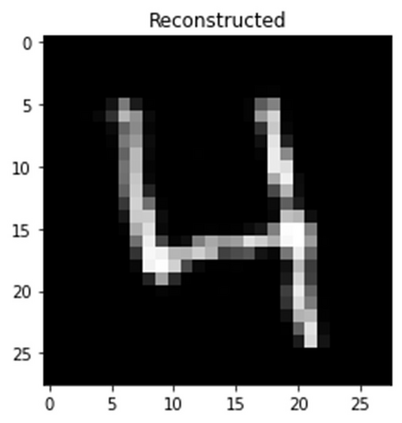

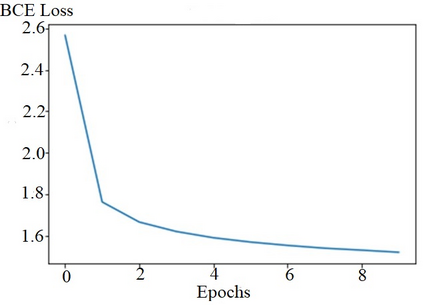

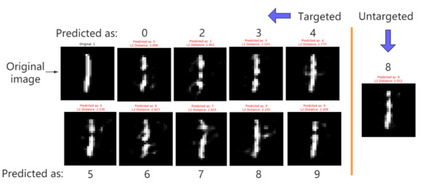

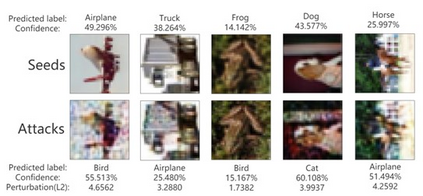

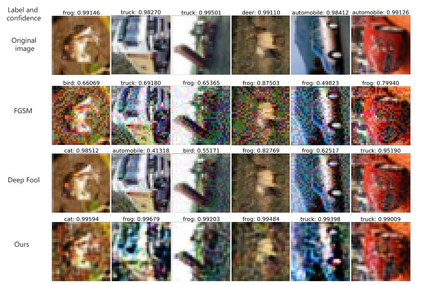

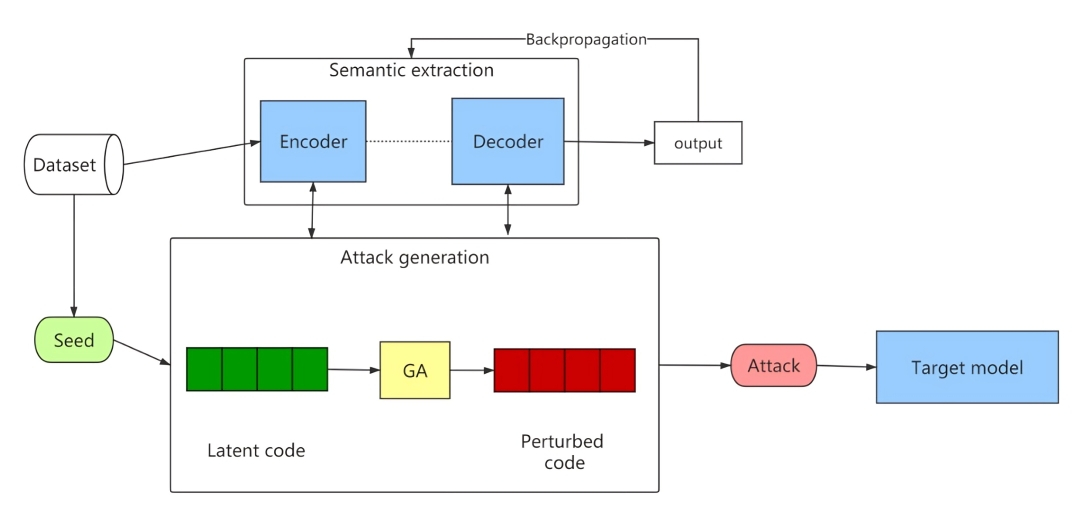

Widely used deep learning models are found to have poor robustness. Little noises can fool state-of-the-art models into making incorrect predictions. While there is a great deal of high-performance attack generation methods, most of them directly add perturbations to original data and measure them using L_p norms; this can break the major structure of data, thus, creating invalid attacks. In this paper, we propose a black-box attack, which, instead of modifying original data, modifies latent features of data extracted by an autoencoder; then, we measure noises in semantic space to protect the semantics of data. We trained autoencoders on MNIST and CIFAR-10 datasets and found optimal adversarial perturbations using a genetic algorithm. Our approach achieved a 100% attack success rate on the first 100 data of MNIST and CIFAR-10 datasets with less perturbation than FGSM.

翻译:广泛使用的深层学习模型被发现缺乏强力。 小噪音可以愚弄最先进的模型来做出不正确的预测。 虽然有许多高性能攻击生成方法, 但大多数都直接将扰动添加到原始数据中, 并使用 L_ p 规范测量它们; 这可以打破数据的主要结构, 从而造成无效攻击 。 在本文中, 我们提议黑盒攻击, 而不是修改原始数据, 改变由自动编码器提取的数据的潜伏特征; 然后, 我们测量语义空间中的噪音, 以保护数据的语义学特征。 我们在MNIST 和 CIFAR- 10 数据集上培训了自动编码器, 并利用基因算法发现了最佳的对立干扰 。 我们的方法在MNIST 和 CIFAR- 10 数据集的最初100 数据上实现了100%的打击成功率, 其触动率低于 FGSMM。