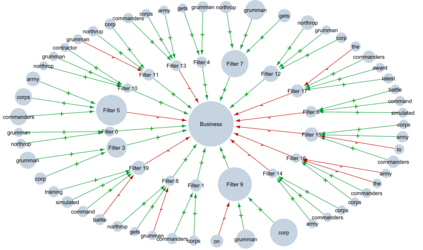

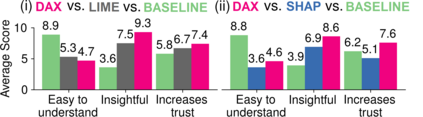

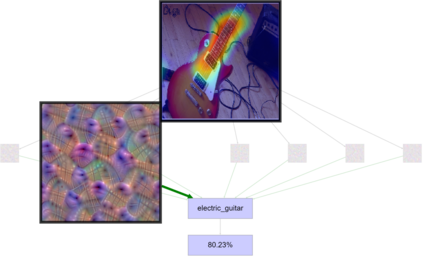

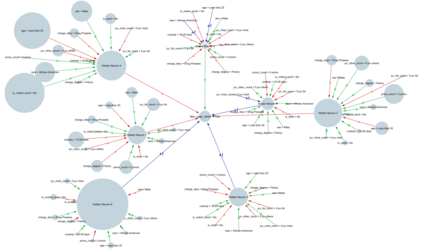

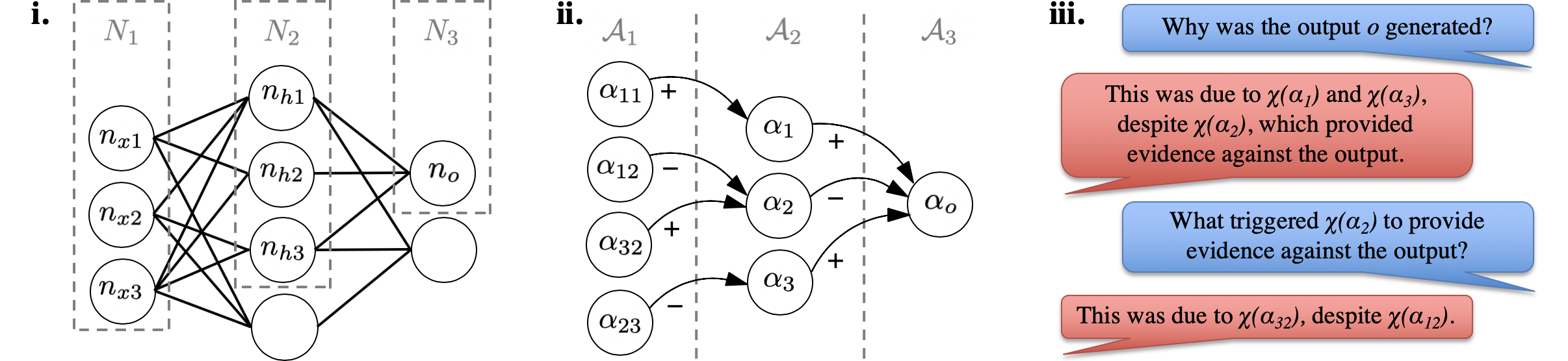

Despite the recent, widespread focus on eXplainable AI (XAI), explanations computed by XAI methods tend to provide little insight into the functioning of Neural Networks (NNs). We propose a novel framework for obtaining (local) explanations from NNs while providing transparency about their inner workings, and show how to deploy it for various neural architectures and tasks. We refer to our novel explanations collectively as Deep Argumentative eXplanations (DAXs in short), given that they reflect the deep structure of the underlying NNs and that they are defined in terms of notions from computational argumentation, a form of symbolic AI offering useful reasoning abstractions for explanation. We evaluate DAXs empirically showing that they exhibit deep fidelity and low computational cost. We also conduct human experiments indicating that DAXs are comprehensible to humans and align with their judgement, while also being competitive, in terms of user acceptance, with some existing approaches to XAI that also have an argumentative spirit.

翻译:尽管最近广泛关注可氧化的AI(XAI),但XAI方法计算的解释对神经网络(NNs)的功能几乎没有什么洞察力。我们提议了一个新的框架,从NNs获取(当地)解释,同时对其内部工作提供透明度,并展示如何将它用于各种神经结构和任务。我们将我们的新解释统称为深参数XS(简称DAX),因为它们反映了基本NS的深层结构,并且从计算论中的概念定义了它们,这是一种象征性的AI,提供了有用的推理抽象解释。我们从经验上评价DAX,表明它们表现出高度忠诚和低计算成本。我们还进行人类实验,表明DAX是人类能够理解的,并且符合其判断,同时在用户接受方面具有竞争力,同时在XAI的一些现有方法中也具有辩论精神。