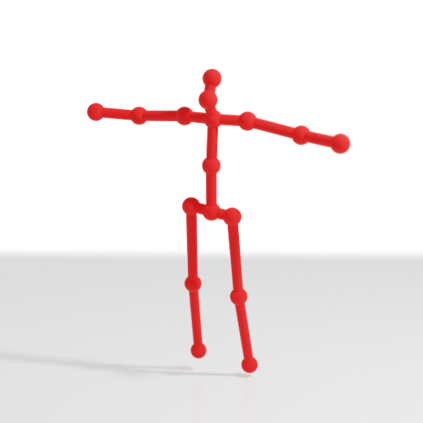

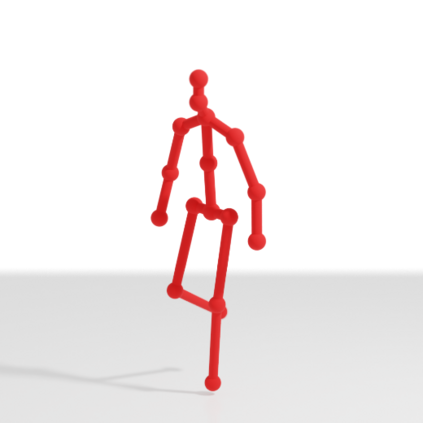

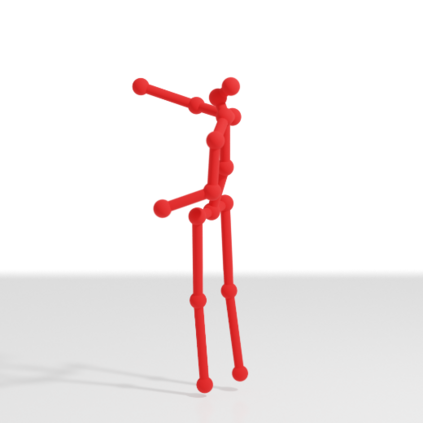

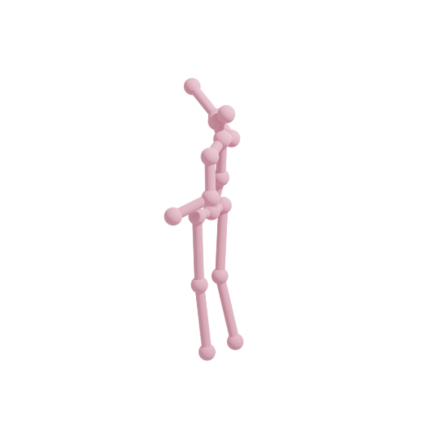

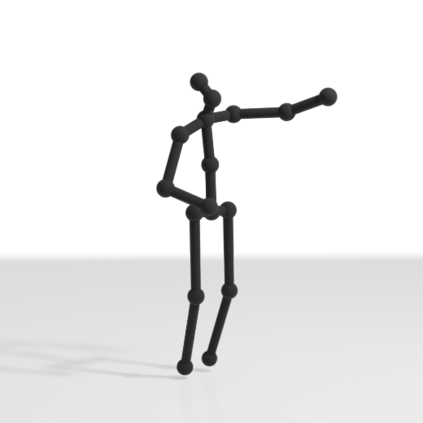

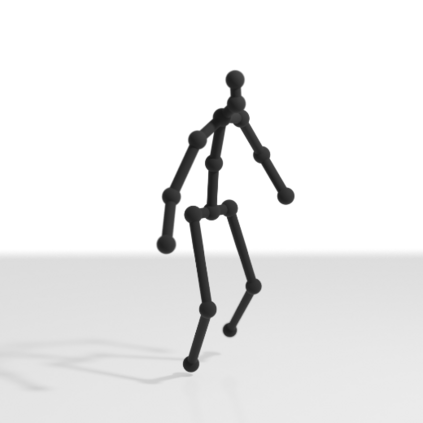

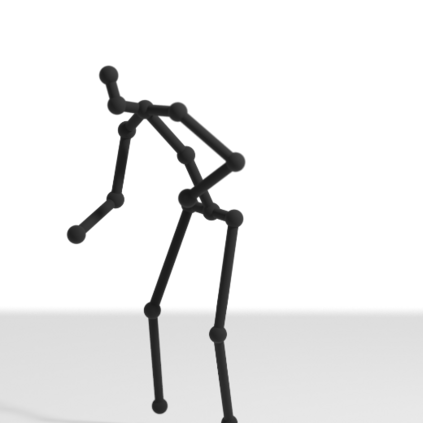

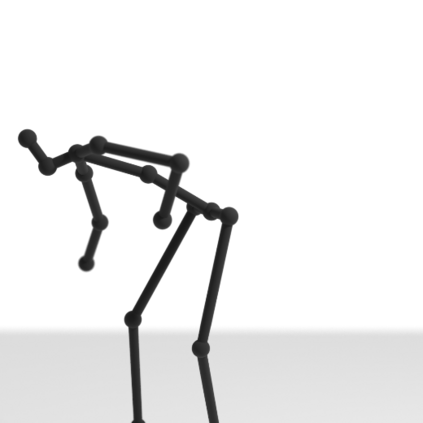

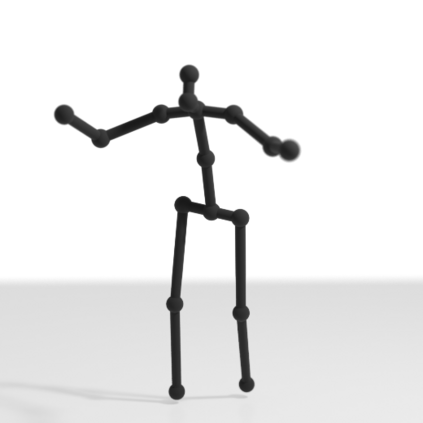

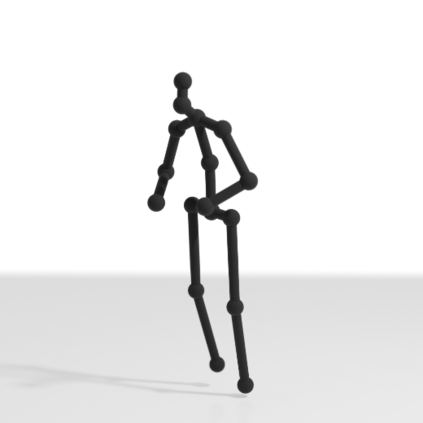

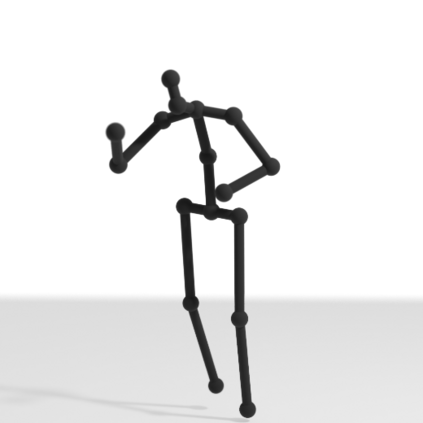

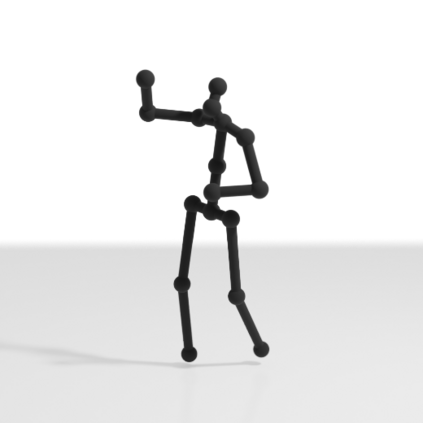

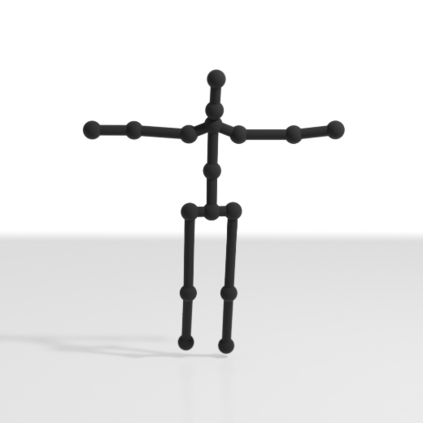

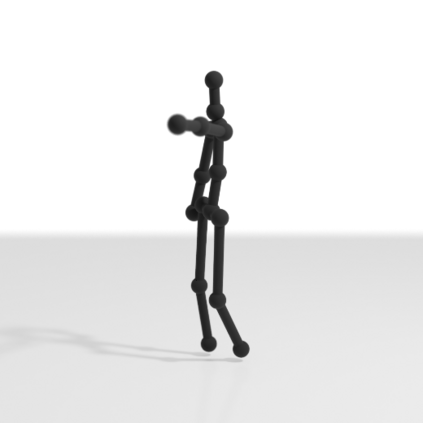

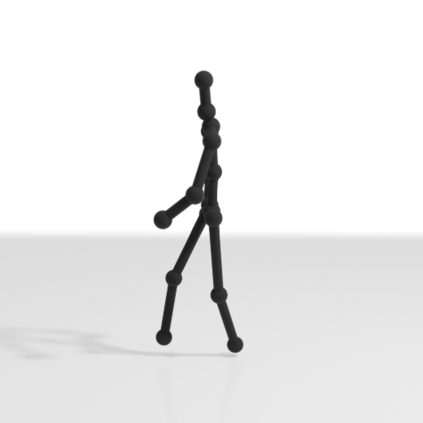

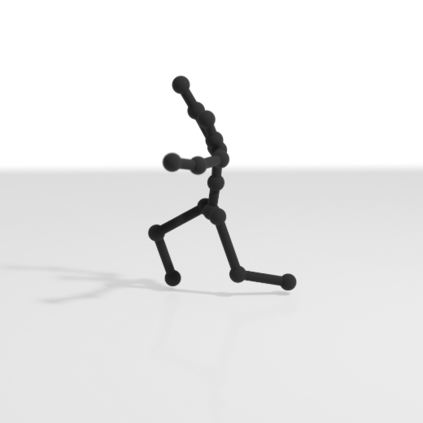

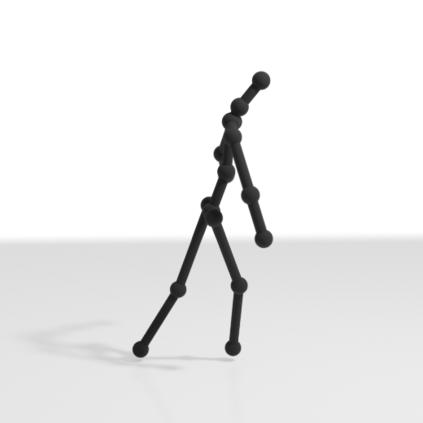

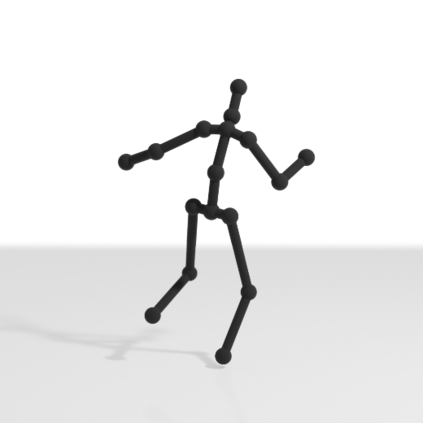

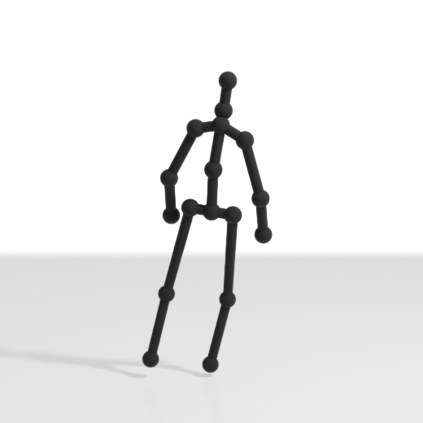

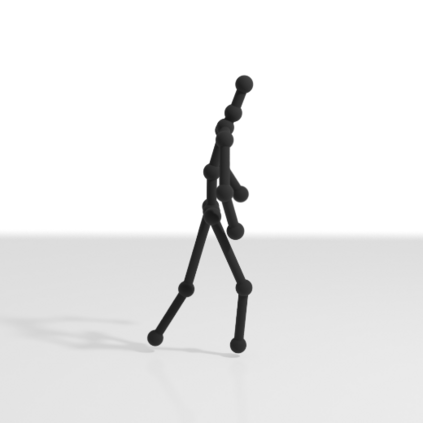

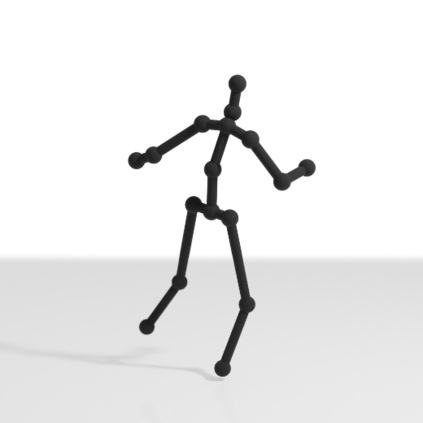

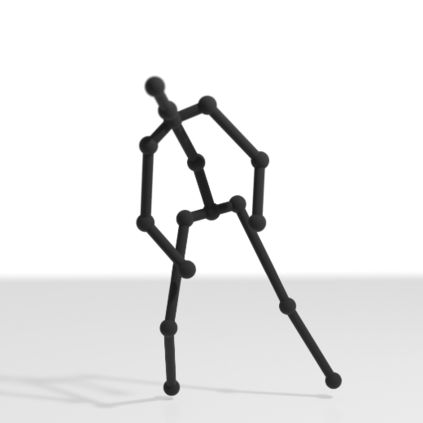

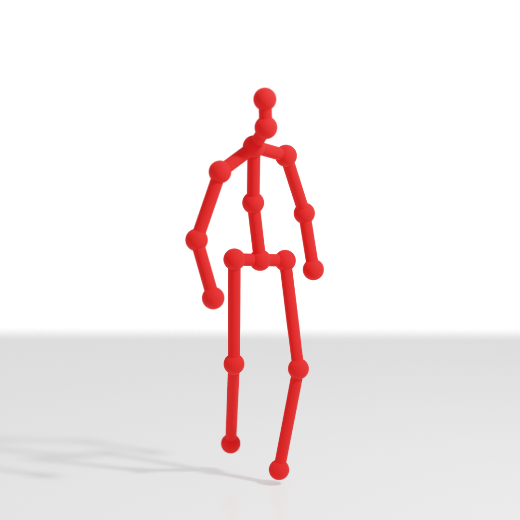

We present a simple, yet effective, approach for self-supervised 3D human pose estimation. Unlike the prior work, we explore the temporal information next to the multi-view self-supervision. During training, we rely on triangulating 2D body pose estimates of a multiple-view camera system. A temporal convolutional neural network is trained with the generated 3D ground-truth and the geometric multi-view consistency loss, imposing geometrical constraints on the predicted 3D body skeleton. During inference, our model receives a sequence of 2D body pose estimates from a single-view to predict the 3D body pose for each of them. An extensive evaluation shows that our method achieves state-of-the-art performance in the Human3.6M and MPI-INF-3DHP benchmarks. Our code and models are publicly available at \url{https://github.com/vru2020/TM_HPE/}.

翻译:我们为自我监督的 3D 人形估计提出了一个简单、但有效的方法。 与先前的工作不同, 我们探索多视图自我监督的旁边的时间信息。 在培训期间, 我们依靠三角对二D 机体对多视图摄像系统进行估计。 一个时间进化神经网络通过生成的 3D 地面图理和几何多视角一致性损失来培训, 对预测的 3D 人体骨架施加几何限制。 在推断期间, 我们的模型从一个单一的视图中收到一个 2D 机体的序列, 从一个单一的视图中预测3D 机体对每具的构成。 广泛的评估显示, 我们的方法在 Human3. 6M 和 MPI-INF-3DHP 基准中达到了最先进的性能。 我们的代码和模型在\url{https://github. com/ vru2020/TM_HPE/}上公开提供。