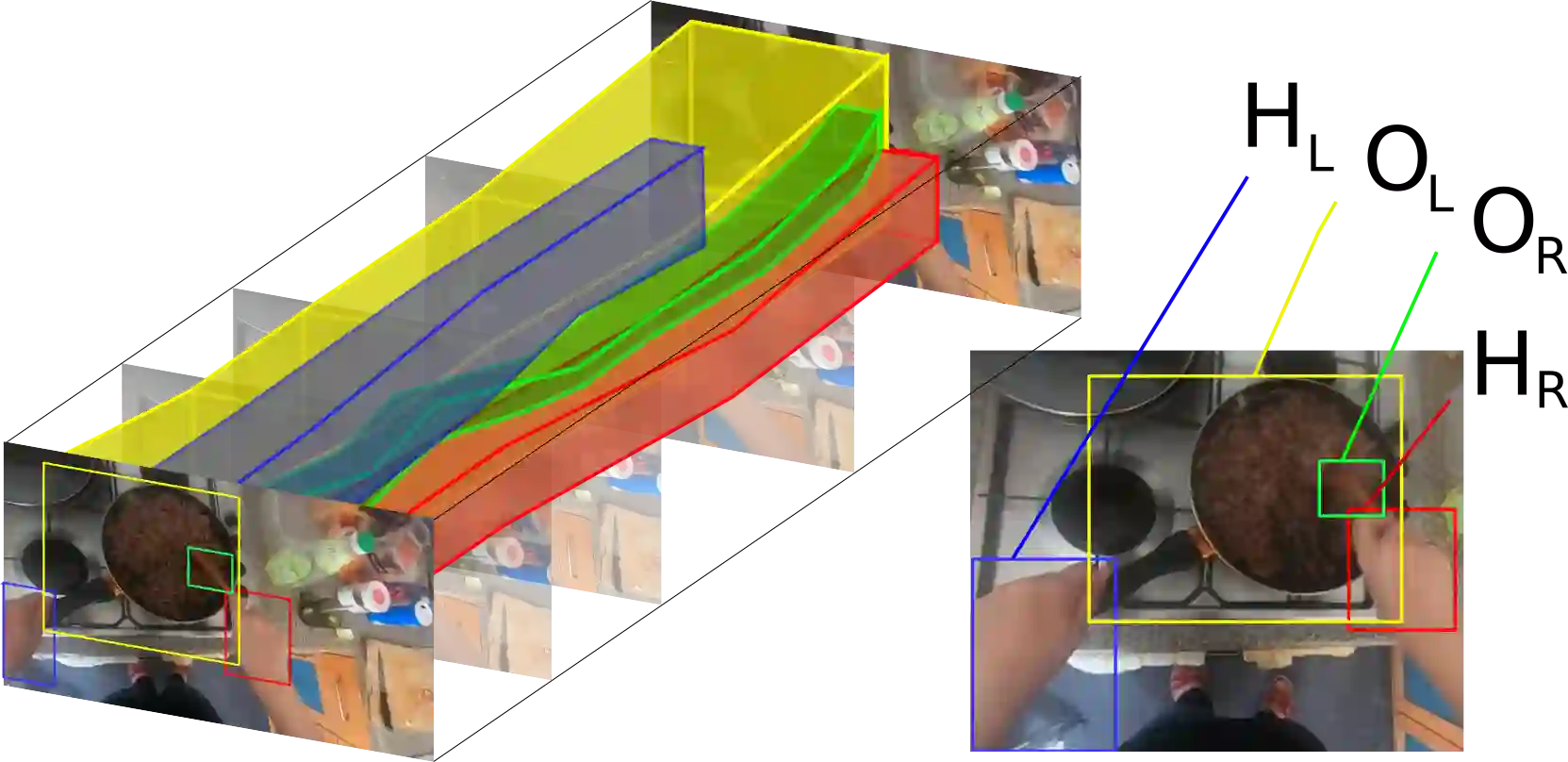

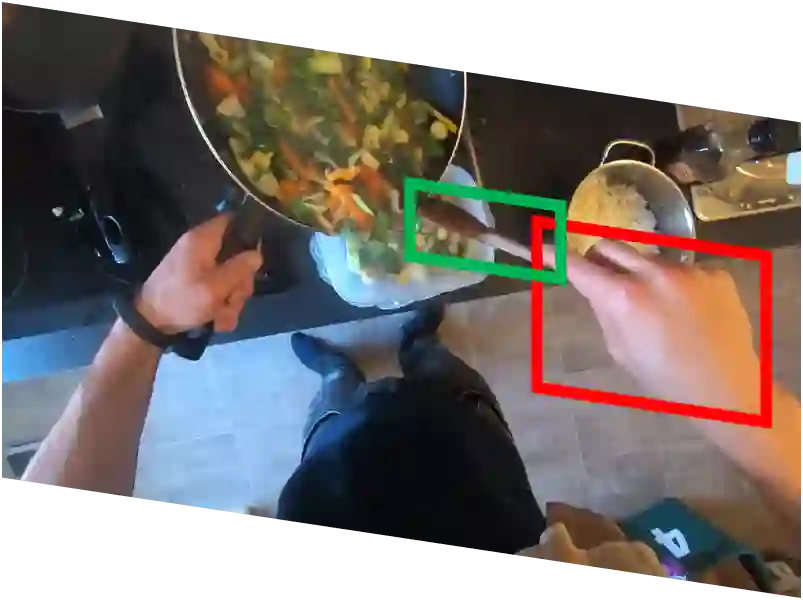

This paper proposes an interaction reasoning network for modelling spatio-temporal relationships between hands and objects in video. The proposed interaction unit utilises a Transformer module to reason about each acting hand, and its spatio-temporal relation to the other hand as well as objects being interacted with. We show that modelling two-handed interactions are critical for action recognition in egocentric video, and demonstrate that by using positionally-encoded trajectories, the network can better recognise observed interactions. We evaluate our proposal on EPIC-KITCHENS and Something-Else datasets, with an ablation study.

翻译:本文建议建立一个互动推理网络,用于模拟视频中手和对象之间的时空关系。 提议的互动单元使用一个变换器模块来解释每个动作手及其与另一手的时空关系以及正在互动的物体。 我们显示,模拟双手互动对于以自我为中心的视频中的行动识别至关重要,并表明通过使用定位编码的轨迹,网络可以更好地识别观察到的相互作用。 我们用一项通缩研究来评估我们关于 EPIC- KITCHENS 和某物Else数据集的建议。