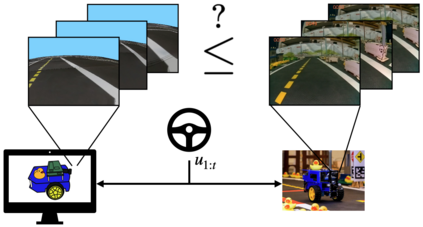

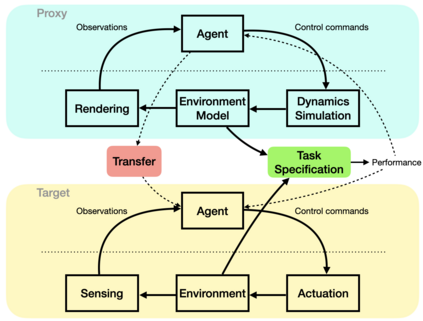

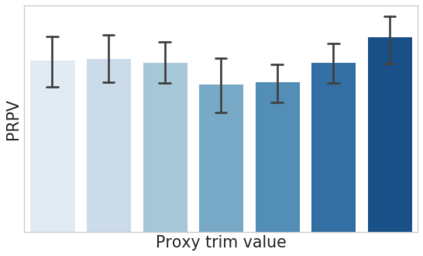

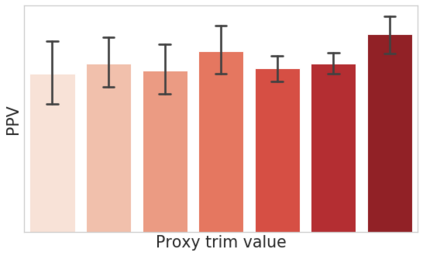

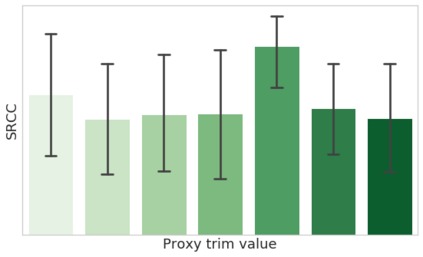

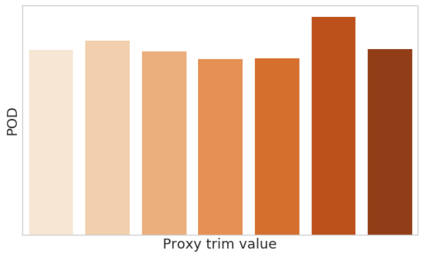

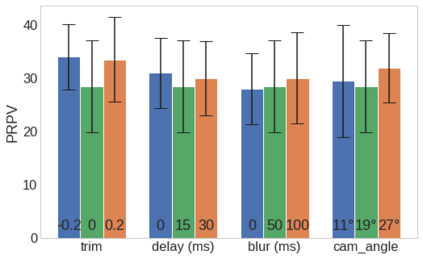

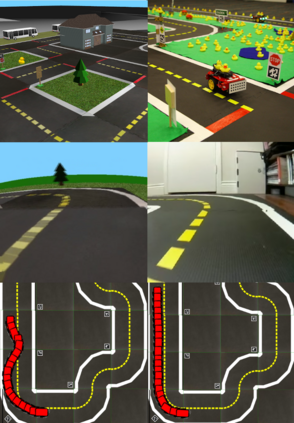

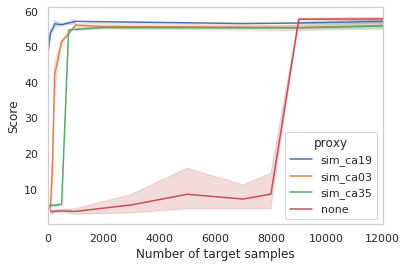

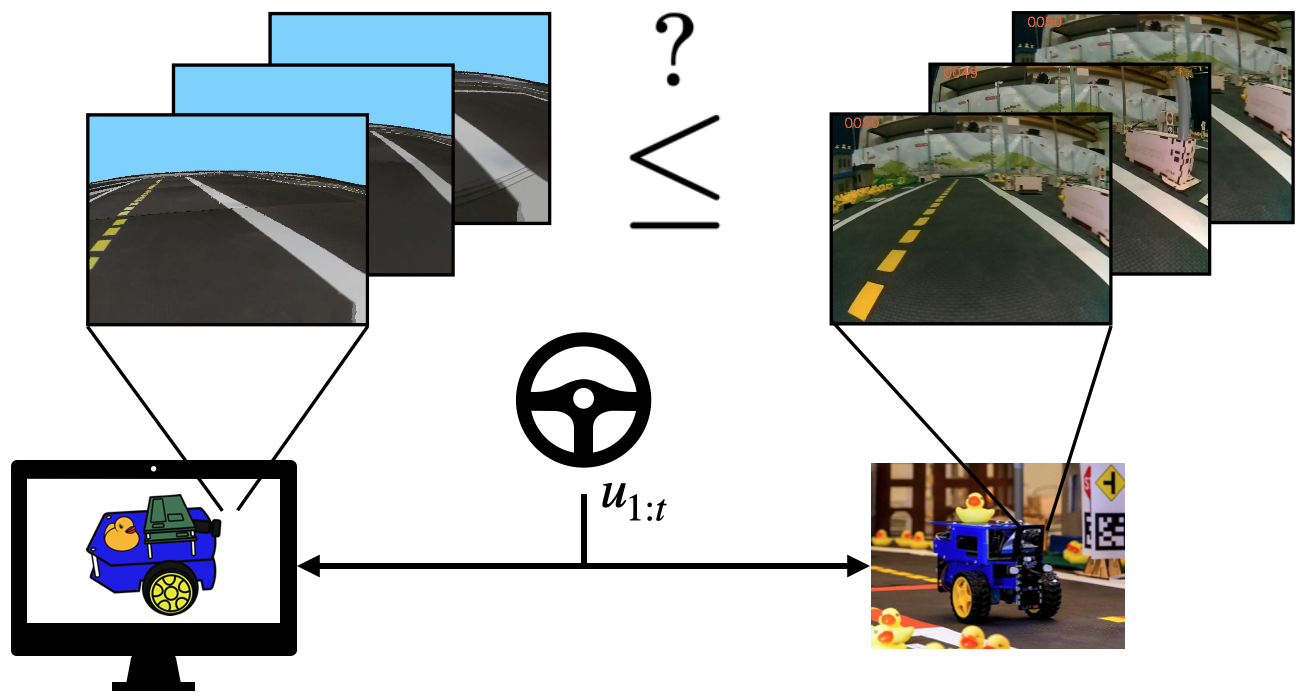

In many situations it is either impossible or impractical to develop and evaluate agents entirely on the target domain on which they will be deployed. This is particularly true in robotics, where doing experiments on hardware is much more arduous than in simulation. This has become arguably more so in the case of learning-based agents. To this end, considerable recent effort has been devoted to developing increasingly realistic and higher fidelity simulators. However, we lack any principled way to evaluate how good a ``proxy domain'' is, specifically in terms of how useful it is in helping us achieve our end objective of building an agent that performs well in the target domain. In this work, we investigate methods to address this need. We begin by clearly separating two uses of proxy domains that are often conflated: 1) their ability to be a faithful predictor of agent performance and 2) their ability to be a useful tool for learning. In this paper, we attempt to clarify the role of proxy domains and establish new proxy usefulness (PU) metrics to compare the usefulness of different proxy domains. We propose the relative predictive PU to assess the predictive ability of a proxy domain and the learning PU to quantify the usefulness of a proxy as a tool to generate learning data. Furthermore, we argue that the value of a proxy is conditioned on the task that it is being used to help solve. We demonstrate how these new metrics can be used to optimize parameters of the proxy domain for which obtaining ground truth via system identification is not trivial.

翻译:在很多情况下,完全开发并评价他们将要部署的目标域的代理人是不可能或不切实际的。在机器人方面尤其如此,在机器人方面,对硬件的试验比模拟要困难得多。在学习的代理人方面,这可以说更加困难。为此目的,最近相当努力致力于开发越来越现实和更加忠实的模拟器。然而,我们缺乏任何原则性的方法来评估“代理域”的好坏,具体地说,它如何帮助我们实现最终目标,即建立一个在目标域运行良好的代理人。在这项工作中,我们调查解决这一需要的方法。我们首先明确区分代用域的两种用途,这些用途往往被混为一谈:(1)它们能够忠实地预测代理人业绩,(2)它们能够成为有用的学习工具。在这份文件中,我们试图澄清代用域的作用,制定新的代用(PU)衡量标准,以比较不同代用域的效用。我们提议相对的预测性参数来评估一个代用域域的预测能力,而不是用什么方法来满足这一需要。我们首先将代用域的代用域的两种用途区分为工具用来量化这个代用工具。我们用来用来用来用来证明它的价值。我们如何通过代用一个用来证明数据的域,这是用来用来证明一个用来证明一个用来证明一个用来证明它的工具。