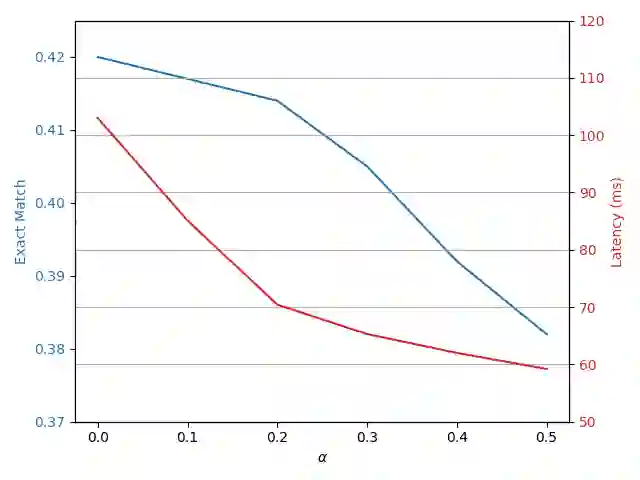

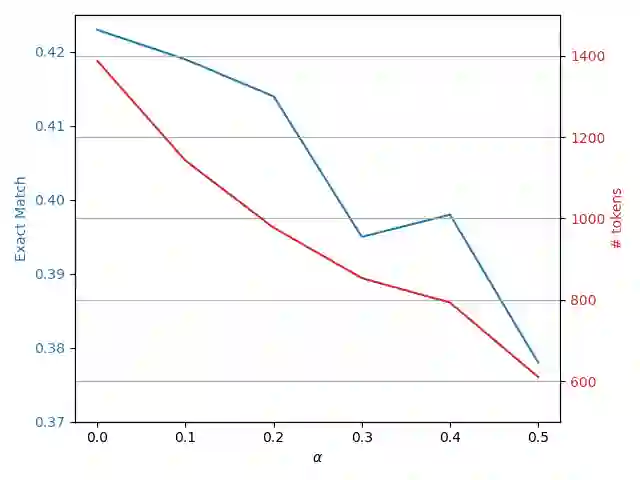

Reasoning models have gained significant attention due to their strong performance, particularly when enhanced with retrieval augmentation. However, these models often incur high computational costs, as both retrieval and reasoning tokens contribute substantially to the overall resource usage. In this work, we make the following contributions: (1) we propose a retrieval-augmented reasoning model that dynamically adjusts the length of the retrieved document list based on the query and retrieval results; (2) we develop a cost-aware advantage function for training of efficient retrieval-augmented reasoning models through reinforcement learning; and (3) we explore both memory- and latency-bound implementations of the proposed cost-aware framework for both proximal and group relative policy optimization algorithms. We evaluate our approach on seven public question answering datasets and demonstrate significant efficiency gains, without compromising effectiveness. In fact, we observed that the model latency decreases by ~16-20% across datasets, while its effectiveness increases by ~5% on average, in terms of exact match.

翻译:推理模型因其强大的性能而受到广泛关注,尤其是在通过检索增强技术进行优化后。然而,这些模型通常会产生高昂的计算成本,因为检索和推理过程所消耗的令牌数量对整体资源使用有显著贡献。在本研究中,我们做出了以下贡献:(1)我们提出了一种检索增强推理模型,该模型能够根据查询和检索结果动态调整检索文档列表的长度;(2)我们开发了一种成本感知的优势函数,用于通过强化学习训练高效的检索增强推理模型;以及(3)我们探索了所提出的成本感知框架在内存受限和延迟受限两种场景下的实现,并应用于近端策略优化和组相对策略优化算法。我们在七个公开问答数据集上评估了我们的方法,结果表明在保持模型有效性的同时,效率得到了显著提升。具体而言,我们观察到模型延迟在不同数据集上降低了约16-20%,同时其有效性(以精确匹配为衡量标准)平均提高了约5%。