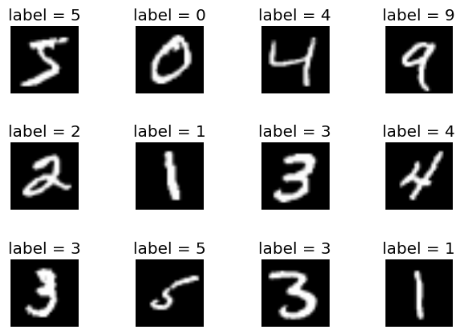

Increasing a ML model accuracy is not enough, we must also increase its trustworthiness. This is an important step for building resilient AI systems for safety-critical applications such as automotive, finance, and healthcare. For that purpose, we propose a multi-agent system that combines both machine and human agents. In this system, a checker agent calculates a trust score of each instance (which penalizes overconfidence and overcautiousness in predictions) using an agreement-based method and ranks it; then an improver agent filters the anomalous instances based on a human rule-based procedure (which is considered safe), gets the human labels, applies geometric data augmentation, and retrains with the augmented data using transfer learning. We evaluate the system on corrupted versions of the MNIST and FashionMNIST datasets. We get an improvement in accuracy and trust score with just few additional labels compared to a baseline approach.

翻译:提高 ML 模型的准确性是不够的, 我们还必须提高它的可信度。 这是建设机动、金融和医疗等安全关键应用的具有弹性的AI 系统的重要一步。 为此,我们建议建立一个多试剂系统, 将机器和人体代理物结合起来。 在这个系统中, 检查器代理商使用基于协议的方法计算每个案例的信用分数( 这会惩罚对信心的不信任和预测中的过度警惕), 并将其排序; 然后改进剂过滤基于基于人类规则程序( 被认为是安全的) 的反常情况, 获得人类标签, 应用几何数据增强, 并利用传输学习对扩大的数据进行重新跟踪 。 我们用传输学习来评估MNST 和 FashonMNIST 数据集的腐败版本的系统 。 我们用比基线方法少得多的标签来提高准确性和信任分数 。