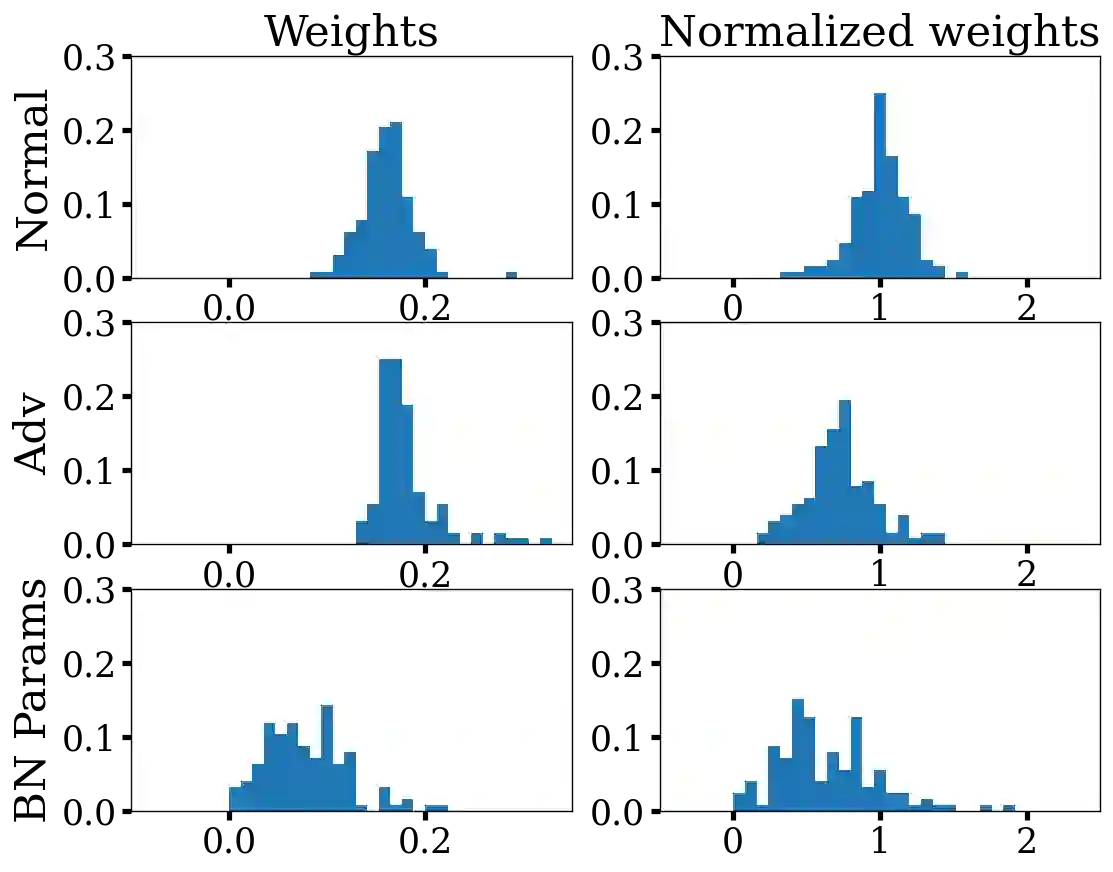

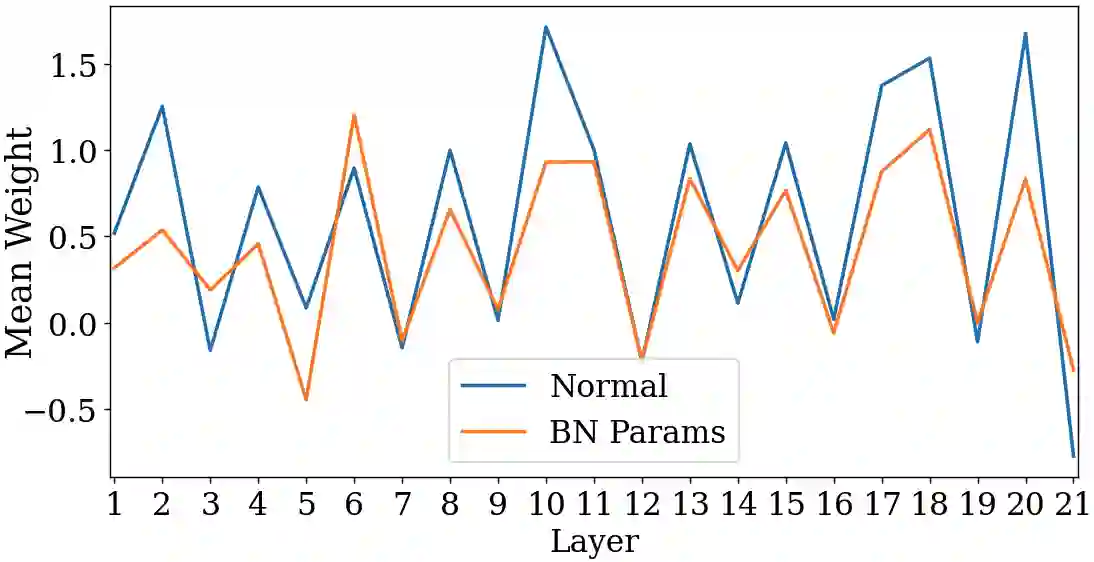

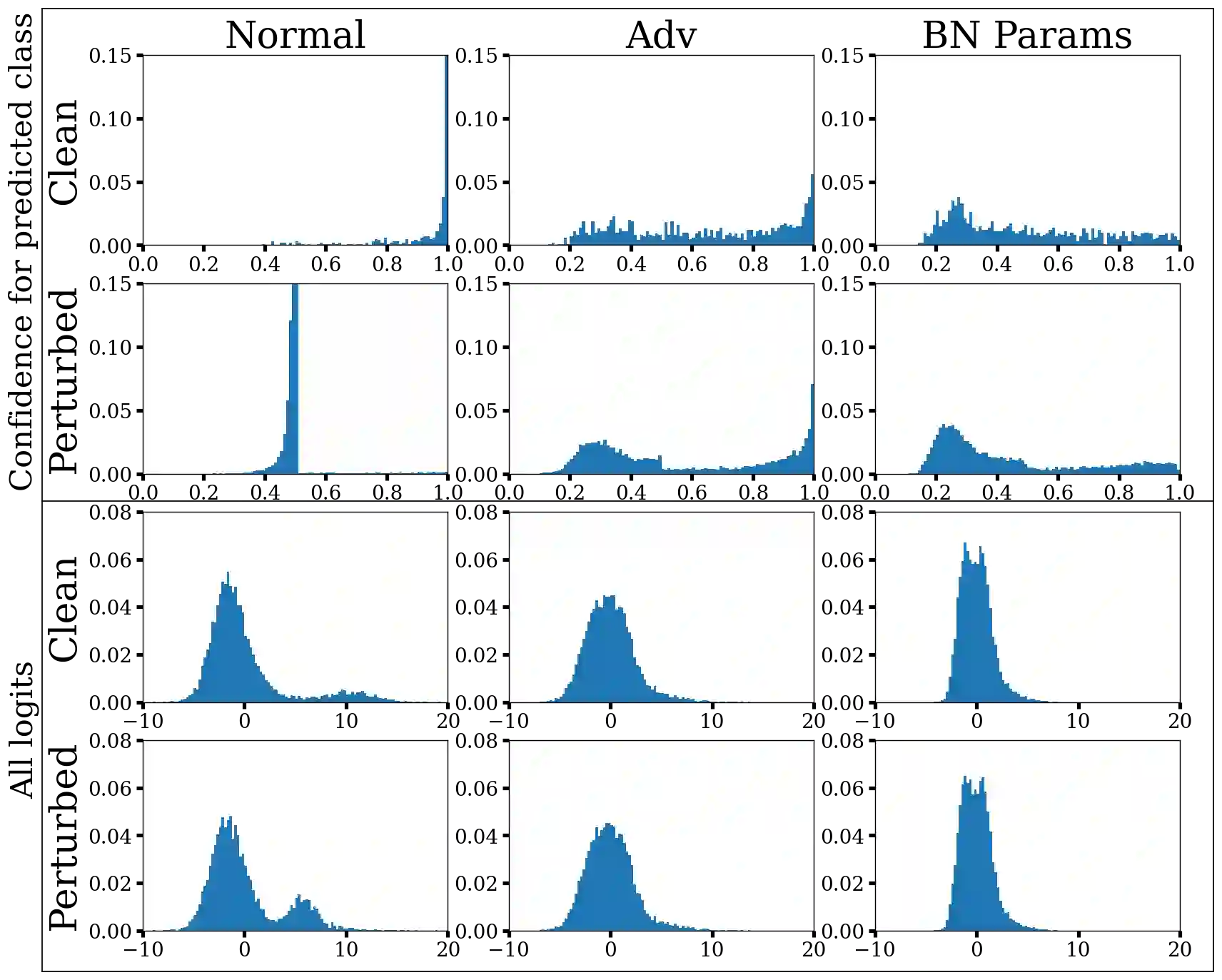

Modern deep learning architecture utilize batch normalization (BN) to stabilize training and improve accuracy. It has been shown that the BN layers alone are surprisingly expressive. In the context of robustness against adversarial examples, however, BN is argued to increase vulnerability. That is, BN helps to learn fragile features. Nevertheless, BN is still used in adversarial training, which is the de-facto standard to learn robust features. In order to shed light on the role of BN in adversarial training, we investigate to what extent the expressiveness of BN can be used to robustify fragile features in comparison to random features. On CIFAR10, we find that adversarially fine-tuning just the BN layers can result in non-trivial adversarial robustness. Adversarially training only the BN layers from scratch, in contrast, is not able to convey meaningful adversarial robustness. Our results indicate that fragile features can be used to learn models with moderate adversarial robustness, while random features cannot

翻译:现代深层学习结构利用批量正常化(BN)来稳定培训和提高准确性。已经表明,单是BN层就令人惊讶地表现出了惊人的表情。然而,在对抗性实例的强力背景下,BN被认为增加了脆弱性。也就是说,BN帮助学习了脆弱的特点。然而,BN仍然用于对抗性培训,这是学习强力特征的法式标准。为了阐明BN在对抗性培训中的作用,我们调查BN的表情在多大程度上可以用来与随机特征相比巩固脆弱特征。在CIFAR10中,我们发现仅对BN层进行对抗性微调会导致非三边对抗性对抗性强势。相反,只对BN层进行从零到零的培训,无法传达有意义的对抗性强力。我们的结果表明,脆弱特征可以用来学习具有温和的对抗性强力模型,而随机性特征则无法进行随机性。