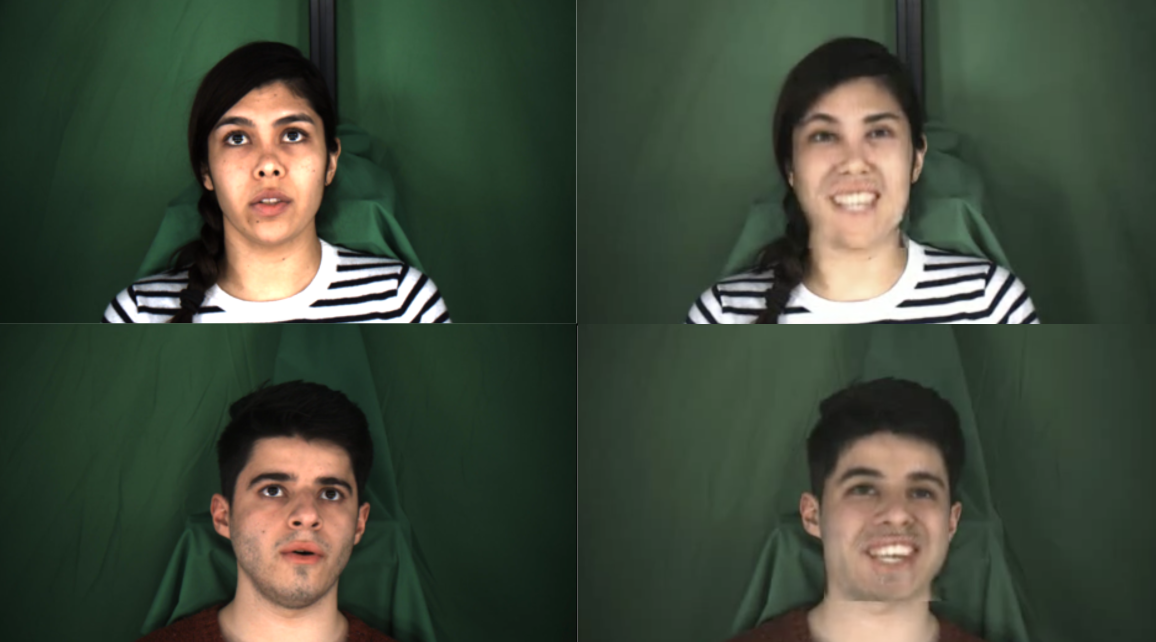

We present Wav2Lip-Emotion, a video-to-video translation architecture that modifies facial expressions of emotion in videos of speakers. Previous work modifies emotion in images, uses a single image to produce a video with animated emotion, or puppets facial expressions in videos with landmarks from a reference video. However, many use cases such as modifying an actor's performance in post-production, coaching individuals to be more animated speakers, or touching up emotion in a teleconference require a video-to-video translation approach. We explore a method to maintain speakers' lip movements, identity, and pose while translating their expressed emotion. Our approach extends an existing multi-modal lip synchronization architecture to modify the speaker's emotion using L1 reconstruction and pre-trained emotion objectives. We also propose a novel automated emotion evaluation approach and corroborate it with a user study. These find that we succeed in modifying emotion while maintaining lip synchronization. Visual quality is somewhat diminished, with a trade off between greater emotion modification and visual quality between model variants. Nevertheless, we demonstrate (1) that facial expressions of emotion can be modified with nothing other than L1 reconstruction and pre-trained emotion objectives and (2) that our automated emotion evaluation approach aligns with human judgements.

翻译:我们展示了Wav2Lip-Emotion(一个视频到视频的翻译结构),它改变了演讲者视频中的情绪面部表达。先前的工作改变了图像中的情感,使用了单一图像来制作带有动动动情感的视频,或者用参考视频中的标志性视频的木偶面部表达。然而,许多使用的案例,例如修改演员在制作后的表现,引导个人成为更动动听的演讲者,或者在电话会议中触摸情感,这需要视频到视频到视频的翻译方法。我们探索了一种在翻译其表达的情感时保持演讲者的嘴唇运动、身份和姿势的方法。我们的方法扩展了现有的多模式的嘴唇同步结构,用L1重建和预先训练过的情感目标来改变演讲者的情绪。我们还提出了一个新的自动情绪评价方法,并通过用户研究加以证实。这些案例发现,我们在改变情绪的同时保持唇动能成功。视觉质量有所减弱,在更大的情绪改变和视觉变式之间的交易中。然而,我们展示:(1) 面部情感表达方式可以与L1重建和预先训练过的情感判断方法相比,可以与人类的自动情绪评价一致。