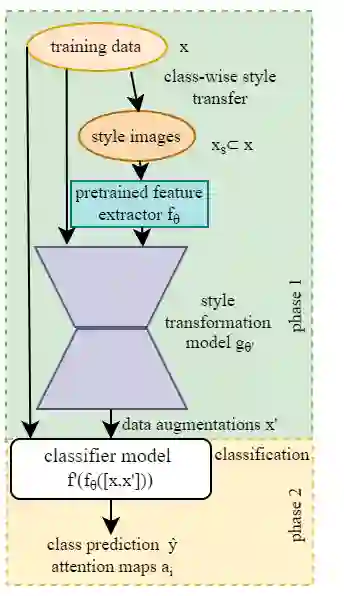

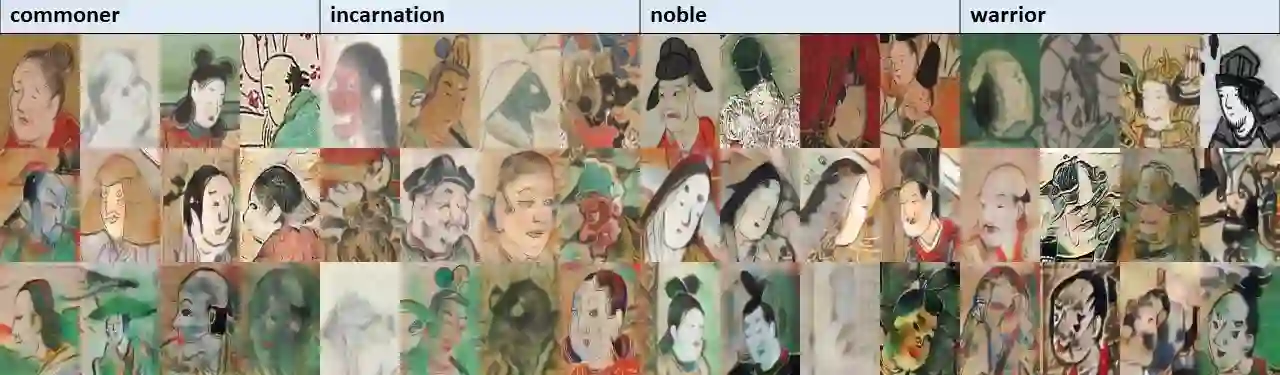

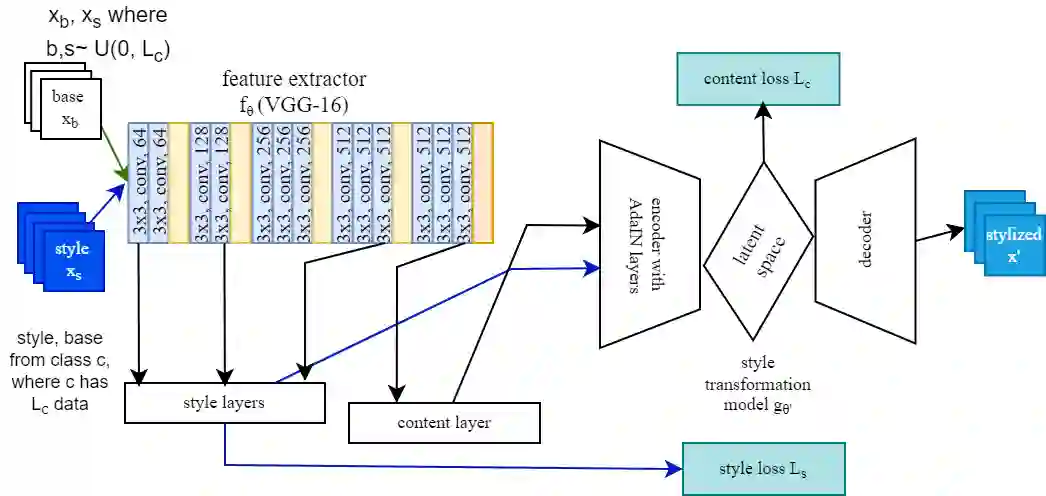

It is difficult to train classifiers on paintings collections due to model bias from domain gaps and data bias from the uneven distribution of artistic styles. Previous techniques like data distillation, traditional data augmentation and style transfer improve classifier training using task specific training datasets or domain adaptation. We propose a system to handle data bias in small paintings datasets like the Kaokore dataset while simultaneously accounting for domain adaptation in fine-tuning a model trained on real world images. Our system consists of two stages which are style transfer and classification. In the style transfer stage, we generate the stylized training samples per class with uniformly sampled content and style images and train the style transformation network per domain. In the classification stage, we can interpret the effectiveness of the style and content layers at the attention layers when training on the original training dataset and the stylized images. We can tradeoff the model performance and convergence by dynamically varying the proportion of augmented samples in the majority and minority classes. We achieve comparable results to the SOTA with fewer training epochs and a classifier with fewer training parameters.

翻译:由于领域差距和艺术风格分布不均的数据偏差的模型偏差,很难对绘画收藏分类员进行绘画培训。以前采用的方法,如数据蒸馏、传统数据增强和风格传输等,利用任务特定培训数据集或领域适应来改进分类培训。我们建议建立一个系统,处理Kakore数据集等小型绘画数据集中的数据偏差,同时在微调一个以真实世界图像为培训的模型时对域性进行调整。我们的系统包括两个阶段,即风格传输和分类。在风格传输阶段,我们以统一的抽样内容和风格图像生成每班的系统化培训样本,并培训每个域的风格转换网络。在分类阶段,我们可以在原始培训数据集和模块化图像培训时,对关注层的风格和内容层的有效性进行解释。我们可以通过动态地改变多数和少数类中增装样本的比例,对模型的性能和趋同性进行权衡。我们以较少的培训教程和一个培训参数来取得与SOTA的可比的结果。