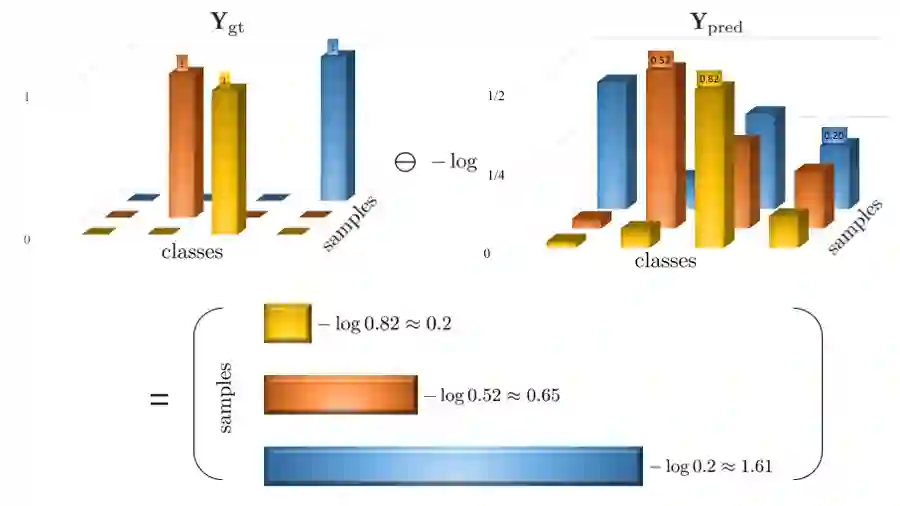

The goal of this document is to provide a pedagogical introduction to the main concepts underpinning the training of deep neural networks using gradient descent; a process known as backpropagation. Although we focus on a very influential class of architectures called "convolutional neural networks" (CNNs) the approach is generic and useful to the machine learning community as a whole. Motivated by the observation that derivations of backpropagation are often obscured by clumsy index-heavy narratives that appear somewhat mathemagical, we aim to offer a conceptually clear, vectorized description that articulates well the higher level logic. Following the principle of "writing is nature's way of letting you know how sloppy your thinking is", we try to make the calculations meticulous, self-contained and yet as intuitive as possible. Taking nothing for granted, ample illustrations serve as visual guides and an extensive bibliography is provided for further explorations. (For the sake of clarity, long mathematical derivations and visualizations have been broken up into short "summarized views" and longer "detailed views" encoded into the PDF as optional content groups. Some figures contain animations designed to illustrate important concepts in a more engaging style. For these reasons, we advise to download the document locally and open it using Adobe Acrobat Reader. Other viewers were not tested and may not render the detailed views, animations correctly.)

翻译:本文的目的是对利用梯度下降来训练深神经网络所依据的主要概念进行教学性介绍;这是一个称为反反向反向演化的过程。虽然我们侧重于一个非常有影响力的结构类,称为“进化神经网络”(CNNs),但这种方法是通用的,对整个机器学习界有用。由于观察到过硬的指数重叙事往往模糊不清,似乎有些虚幻,我们的目标是提供一个概念清晰、矢量化的描述,能够很好地表达更高层次的逻辑。遵循“写作是自然让你知道自己思维有多粗略的方式”的原则,我们试图使计算方法更加细致、自足,而且尽可能直观。不考虑任何理所当然,大量图解的衍生出往往被粗略的指数重叙事所掩盖。 (为了清晰,长期的数学衍生和可视化内容被打破了简短的“简要观点”和更长的“解析观点”被解为PDF的“编译方式”,而不是被写入PDF的“使你了解你的想法是多么粗糙 ” 。我们试图进行精确的计算,但尽可能直观的计算, 却地将动图解地将图解,我们设计了一个更精确的阅读了这些图表。