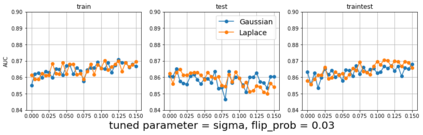

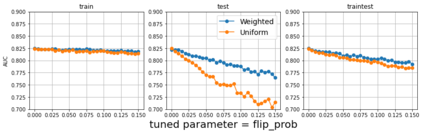

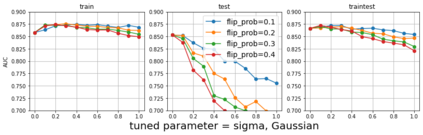

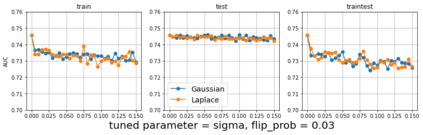

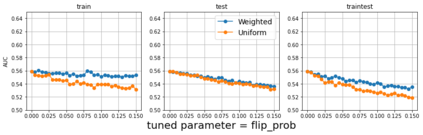

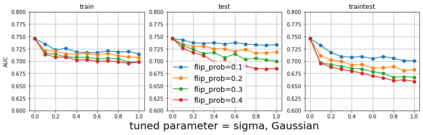

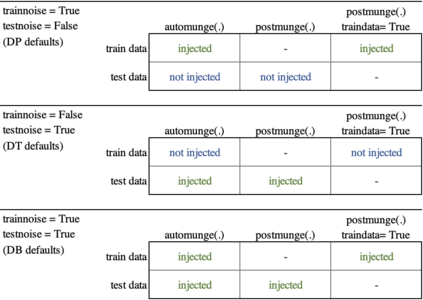

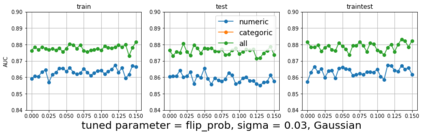

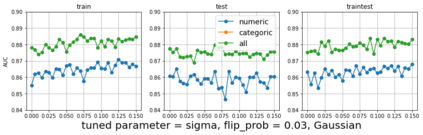

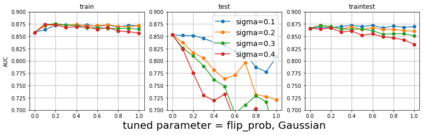

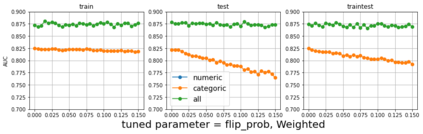

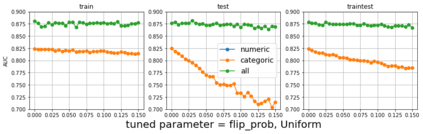

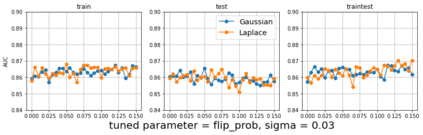

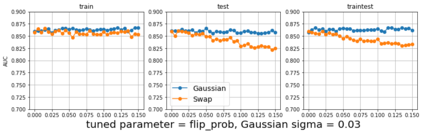

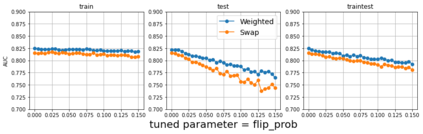

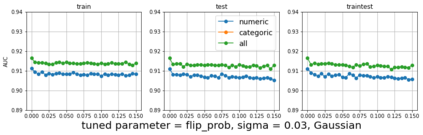

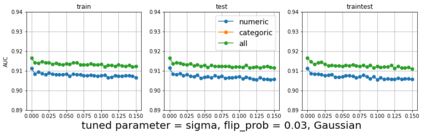

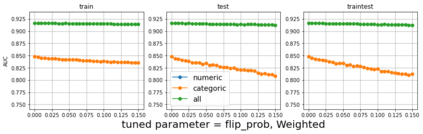

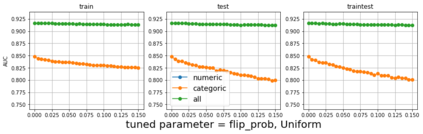

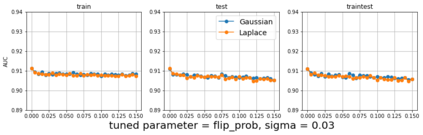

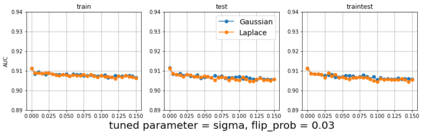

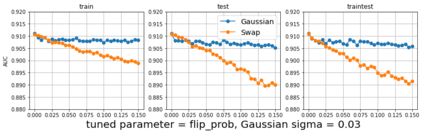

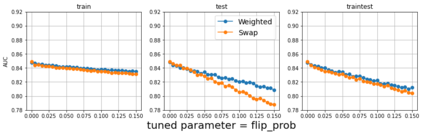

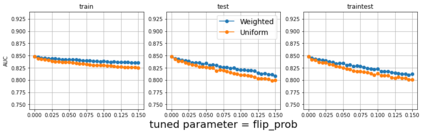

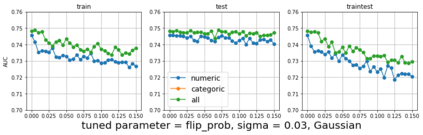

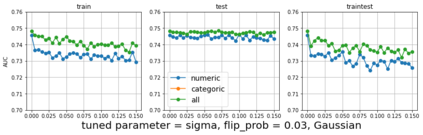

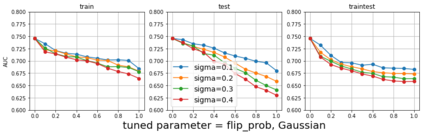

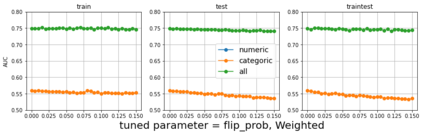

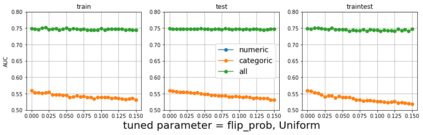

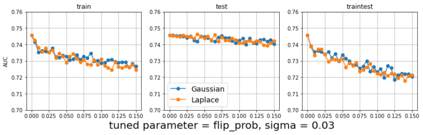

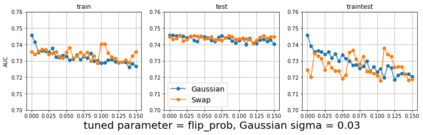

Injecting gaussian noise into training features is well known to have regularization properties. This paper considers noise injections to numeric or categoric tabular features as passed to inference, which translates inference to a non-deterministic outcome and may have relevance to fairness considerations, adversarial example protection, or other use cases benefiting from non-determinism. We offer the Automunge library for tabular preprocessing as a resource for the practice, which includes options to integrate random sampling or entropy seeding with the support of quantum circuits for an improved randomness profile in comparison to pseudo random number generators. Benchmarking shows that neural networks may demonstrate an improved performance when a known noise profile is mitigated with corresponding injections to both training and inference, and that gradient boosting appears to be robust to a mild noise profile in inference, suggesting that stochastic perturbations could be integrated into existing data pipelines for prior trained gradient boosting models.

翻译:将百日咳噪音注入培训功能是众所周知的,具有正规化特性。本文考虑将噪音注入数字或分类表特征,传递到推理中,将推论转化为非决定性结果,并可能与公平考虑、对抗性样保护或受益于非确定性的其他使用案例有关。我们提供Automunge图书馆用于表格预处理,作为实践的一种资源,其中包括在量子电路支持下将随机抽样或诱导结合到与伪随机数字生成器相比改进随机性剖面的选项。基准设定表明,当已知噪音特征通过对培训和推理的相应注入而得到缓解时,神经网络可能显示性能有所改进,而且梯度推动似乎对推断中的微微噪音剖面具有很强的作用,表明可将随机采样或诱导出与量子电路相整合到现有数据管道中,用于先前经过培训的梯度加速模型。