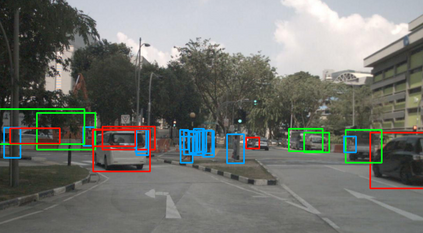

Deep neural networks have proven increasingly important for automotive scene understanding with new algorithms offering constant improvements of the detection performance. However, there is little emphasis on experiences and needs for deployment in embedded environments. We therefore perform a case study of the deployment of two representative object detection networks on an edge AI platform. In particular, we consider RetinaNet for image-based 2D object detection and PointPillars for LiDAR-based 3D object detection. We describe the modifications necessary to convert the algorithms from a PyTorch training environment to the deployment environment taking into account the available tools. We evaluate the runtime of the deployed DNN using two different libraries, TensorRT and TorchScript. In our experiments, we observe slight advantages of TensorRT for convolutional layers and TorchScript for fully connected layers. We also study the trade-off between runtime and performance, when selecting an optimized setup for deployment, and observe that quantization significantly reduces the runtime while having only little impact on the detection performance.

翻译:深神经网络已证明对于汽车了解新算法不断改进探测性能越来越重要,但很少强调在嵌入环境中部署的经验和需要。因此,我们对在边缘AI平台上部署两个具有代表性的物体探测网络进行了案例研究。特别是,我们考虑在基于图像的2D天体探测和基于LIDAR的3D天体探测PointPillars上安装Retinnet。我们描述了将算法从PyTorrch培训环境转换到部署环境的必要修改,同时考虑到现有工具。我们利用两个不同的图书馆(TensorRT和TrchScript)评估部署的DNNN的运行时间。我们在实验中看到TensorRT对电动层和TerchScript对充分连接层的微小优势。我们还研究运行时间和性能之间的交替,在选择最佳部署设置时,并观察四分会大大缩短运行时间,而对探测性能的影响很小。