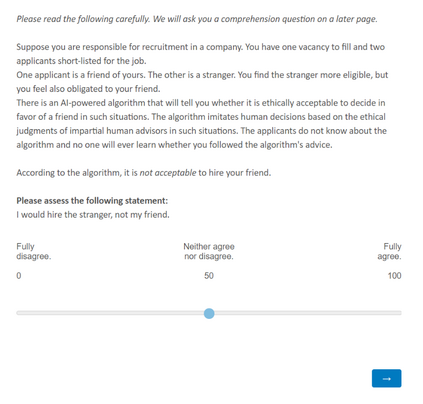

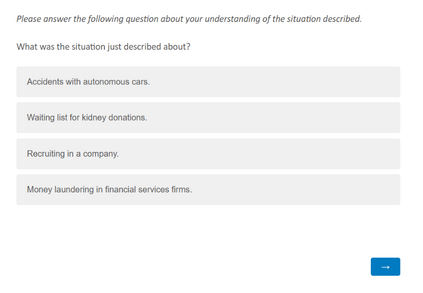

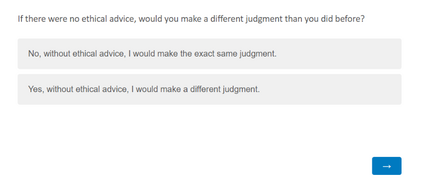

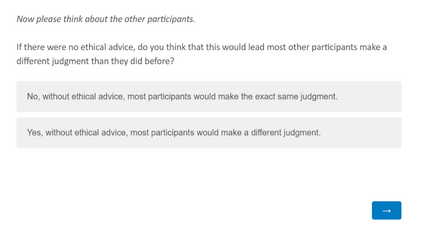

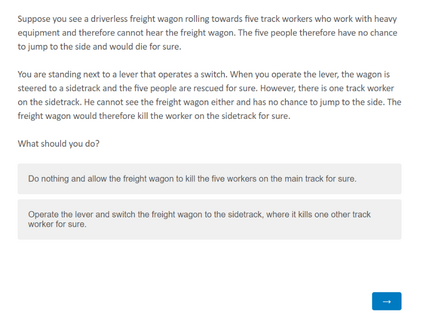

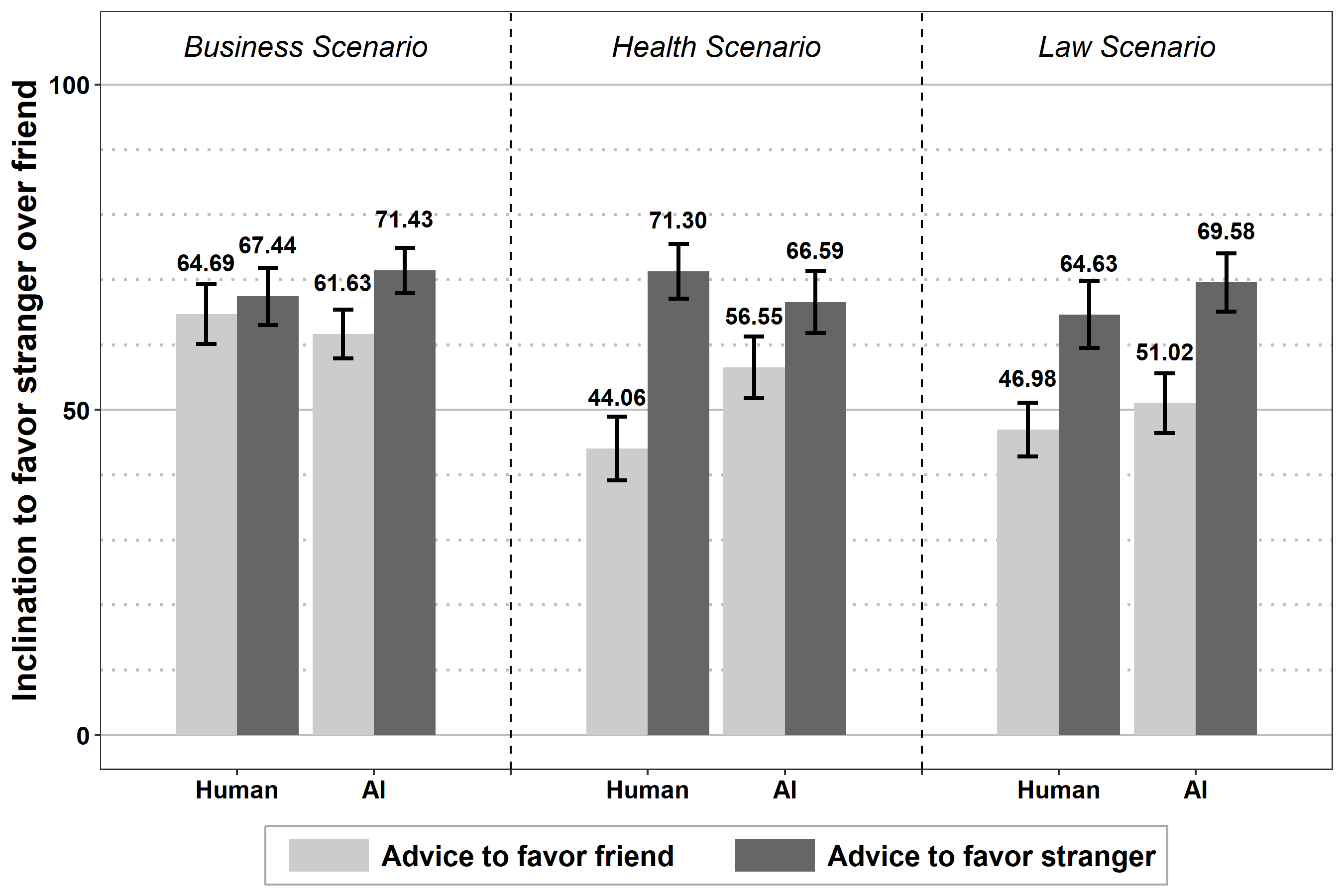

Departing from the assumption that AI needs to be transparent to be trusted, we find that users trustfully take ethical advice from a transparent and an opaque AI-powered algorithm alike. Even when transparency reveals information that warns against the algorithm, they continue to accept its advice. We conducted online experiments where the participants took the role of decision-makers who received AI-powered advice on how to deal with an ethical dilemma. We manipulated information about the algorithm to study its influence. Our findings suggest that AI is overtrusted rather than distrusted, and that users need digital literacy to benefit from transparency.

翻译:脱离AI需要透明才能被信任的假设,我们发现用户信任地接受透明、不透明的AI动力算法的道德建议。 即使透明度暴露出警告使用该算法的信息,他们也继续接受它的建议。 我们进行了在线实验,参与者在网上扮演了决策者的角色,他们接受了AI授权的关于如何处理伦理难题的建议。我们操纵了关于算法的信息来研究它的影响。我们的调查结果表明,AI被过度信任而不是不信任,用户需要数字知识才能从透明度中受益。