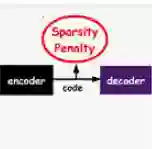

Sparse document representations have been widely used to retrieve relevant documents via exact lexical matching. Owing to the pre-computed inverted index, it supports fast ad-hoc search but incurs the vocabulary mismatch problem. Although recent neural ranking models using pre-trained language models can address this problem, they usually require expensive query inference costs, implying the trade-off between effectiveness and efficiency. Tackling the trade-off, we propose a novel uni-encoder ranking model, Sparse retriever using a Dual document Encoder (SpaDE), learning document representation via the dual encoder. Each encoder plays a central role in (i) adjusting the importance of terms to improve lexical matching and (ii) expanding additional terms to support semantic matching. Furthermore, our co-training strategy trains the dual encoder effectively and avoids unnecessary intervention in training each other. Experimental results on several benchmarks show that SpaDE outperforms existing uni-encoder ranking models.

翻译:通过精确的词汇匹配,分散的文档表示方式被广泛用于检索相关文档。由于预先计算的反转索引,它支持快速的特设搜索,但引起词汇错配问题。尽管最近使用预先培训的语言模型的神经排序模型可以解决这个问题,但它们通常需要昂贵的查询推论成本,这意味着效率和效率之间的权衡。处理交易,我们提议了一个小说单编码排序模型,使用双文档编码器(SpaDE)的Sparse检索器,通过双编码器学习文档代表方式。每个编码器在(一)调整术语的重要性以改进词汇匹配和(二)扩大附加术语以支持语义匹配方面发挥着核心作用。此外,我们的共同培训战略有效地培训双编码器,避免不必要的相互培训干预。几个基准的实验结果表明,SpaDE超越了现有的单编码排序模型。