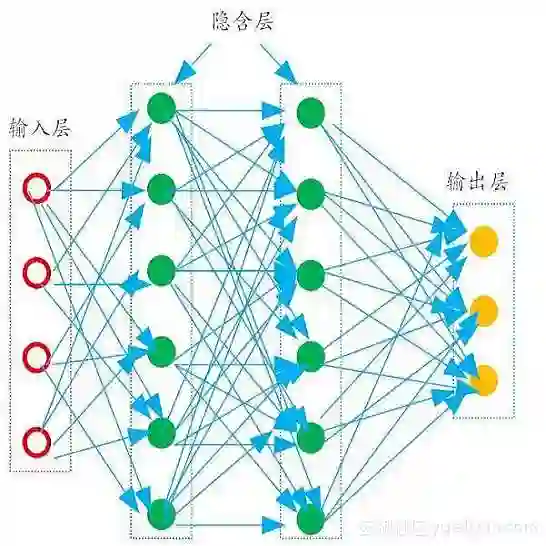

There has been growing interest in generalization performance of large multilayer neural networks that can be trained to achieve zero training error, while generalizing well on test data. This regime is known as 'second descent' and it appears to contradict conventional view that optimal model complexity should reflect optimal balance between underfitting and overfitting, aka the bias-variance trade-off. This paper presents VC-theoretical analysis of double descent and shows that it can be fully explained by classical VC generalization bounds. We illustrate an application of analytic VC-bounds for modeling double descent for classification problems, using empirical results for several learning methods, such as SVM, Least Squares, and Multilayer Perceptron classifiers. In addition, we discuss several possible reasons for misinterpretation of VC-theoretical results in the machine learning community.

翻译:人们日益关注大型多层神经网络的通用性能,这些网络可以被训练达到零培训错误,同时对测试数据进行全面推广。这个制度被称为“第二位 ”, 并且似乎与以下常规观点相矛盾,即最佳模型复杂性应反映配制不足和配配制过度之间的最佳平衡,也就是偏差权衡。本文介绍了对双层的VC理论分析,并表明它可以用传统的VC概括性界限来充分解释。我们用SVM、最小广场和多层截取分解器等多种学习方法的经验结果来说明分类问题,我们用分析性VC标准来模拟双位的VC标准。此外,我们讨论了在机器学习界错误解释VC理论结果的若干可能原因。