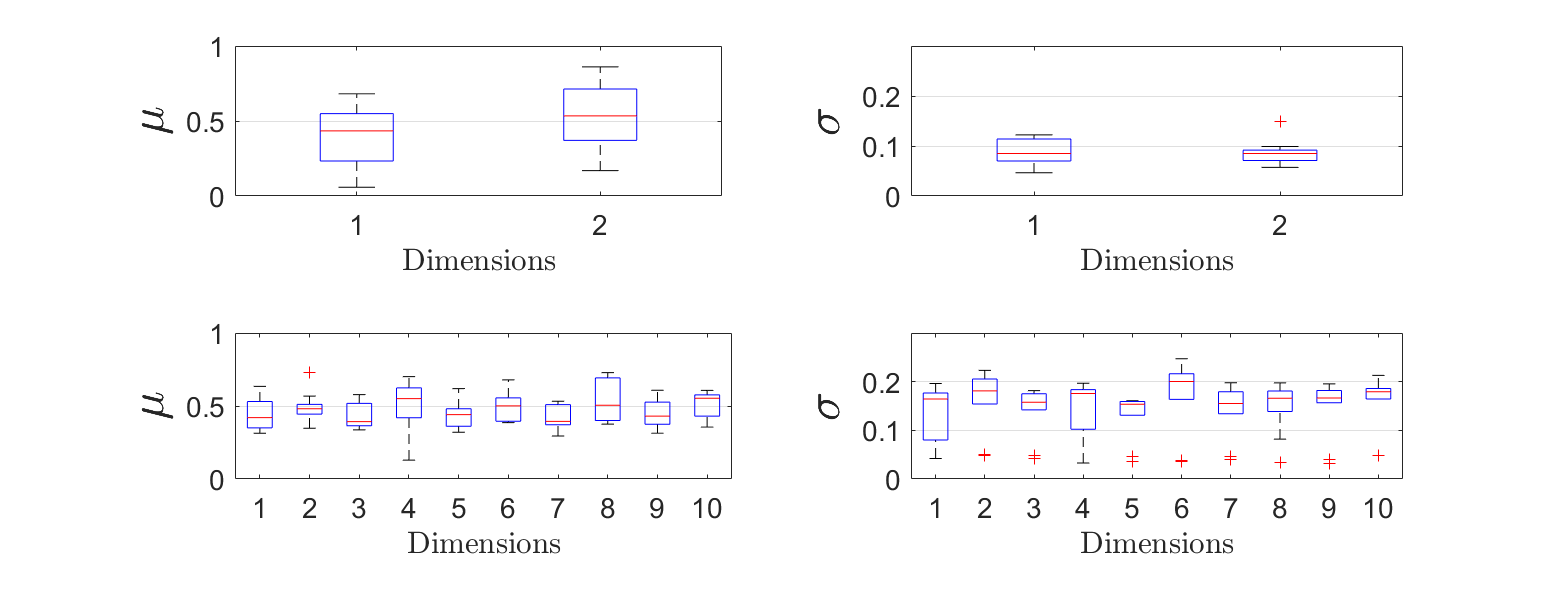

Current advances in Pervasive Computing (PC) involve the adoption of the huge infrastructures of the Internet of Things (IoT) and the Edge Computing (EC). Both, IoT and EC, can support innovative applications around end users to facilitate their activities. Such applications are built upon the collected data and the appropriate processing demanded in the form of requests. To limit the latency, instead of relying on Cloud for data storage and processing, the research community provides a number of models for data management at the EC. Requests, usually defined in the form of tasks or queries, demand the processing of specific data. A model for pre-processing the data preparing them and detecting their statistics before requests arrive is necessary. In this paper, we propose a promising and easy to implement scheme for selecting the appropriate host of the incoming data based on a probabilistic approach. Our aim is to store similar data in the same distributed datasets to have, beforehand, knowledge on their statistics while keeping their solidity at high levels. As solidity, we consider the limited statistical deviation of data, thus, we can support the storage of highly correlated data in the same dataset. Additionally, we propose an aggregation mechanism for outliers detection applied just after the arrival of data. Outliers are transferred to Cloud for further processing. When data are accepted to be locally stored, we propose a model for selecting the appropriate datasets where they will be replicated for building a fault tolerant system. We analytically describe our model and evaluate it through extensive simulations presenting its pros and cons.

翻译:广域计算系统(PPC)目前的进展涉及采用互联网(IOT)和边缘计算系统(EC)的巨大基础设施。 IOT和EC都可以支持终端用户周围的创新应用,以便利他们开展活动。这些应用建立在收集的数据和要求以要求形式进行的适当处理的基础上。为了限制延迟,而不是依靠云储存和处理数据,研究界提供欧盟委员会数据管理的若干模式。通常以任务或查询形式界定的要求要求处理具体数据。在提出要求之前,预处理数据并检测其统计数据的模式是有必要的。在本文件中,我们提出一个有希望和容易执行的方案,以便选择以概率方法为基础的接收数据的适当主机组。我们的目的是将类似的数据储存在同一分发的数据集中,事先将关于这些数据的知识储存在高水平上,同时保持这些数据的可靠性。我们考虑到数据在统计上的偏离有限,因此,我们可以支持将高度关联的数据储存在相同的数据库中,在请求到达之前先进行检测。此外,我们提议在将数据传送到本地后,将数据汇总到当地时,我们提议一个可接受的数据处理机制。