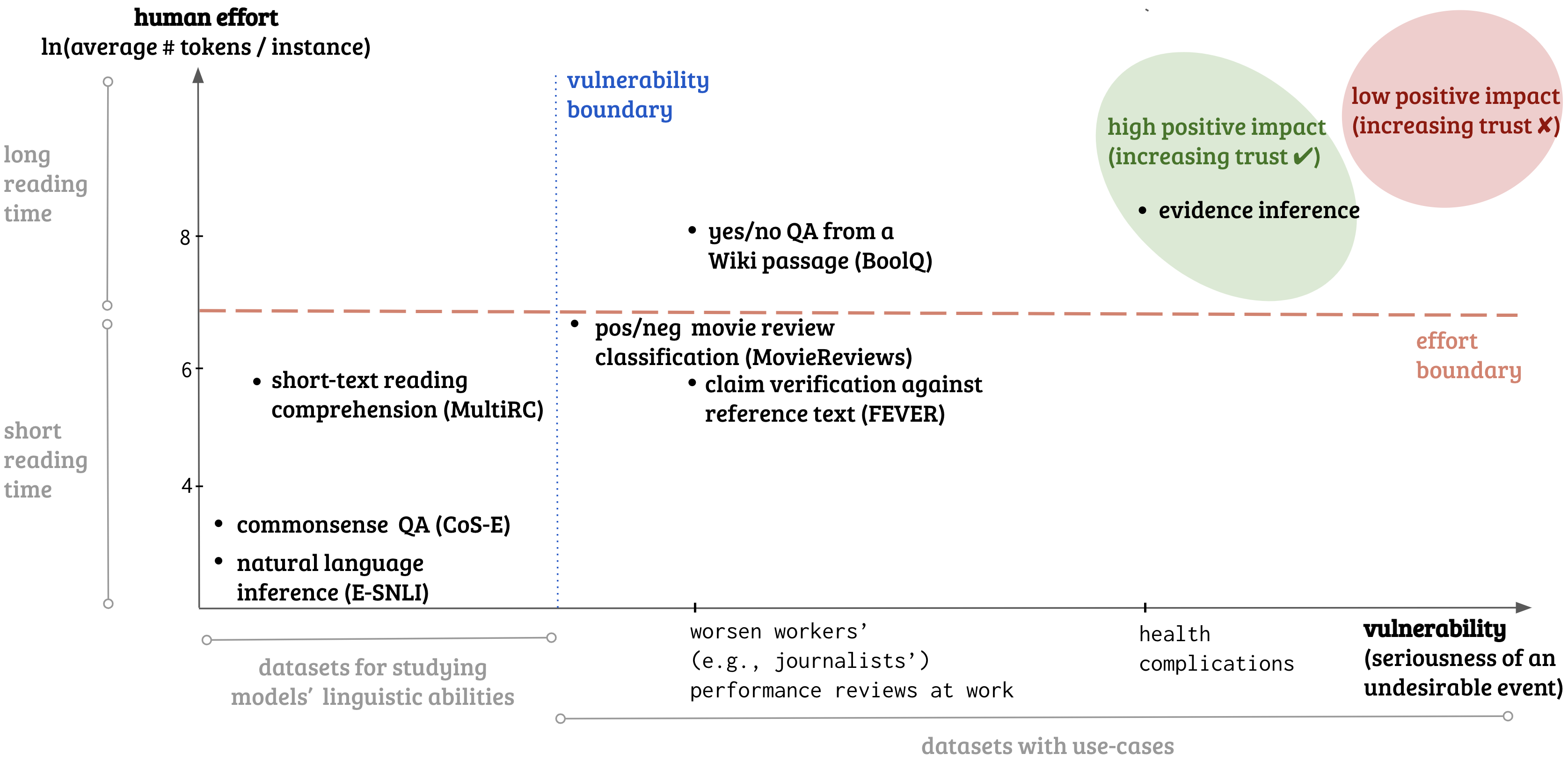

Trust is a central component of the interaction between people and AI, in that 'incorrect' levels of trust may cause misuse, abuse or disuse of the technology. But what, precisely, is the nature of trust in AI? What are the prerequisites and goals of the cognitive mechanism of trust, and how can we promote them, or assess whether they are being satisfied in a given interaction? This work aims to answer these questions. We discuss a model of trust inspired by, but not identical to, sociology's interpersonal trust (i.e., trust between people). This model rests on two key properties of the vulnerability of the user and the ability to anticipate the impact of the AI model's decisions. We incorporate a formalization of 'contractual trust', such that trust between a user and an AI is trust that some implicit or explicit contract will hold, and a formalization of 'trustworthiness' (which detaches from the notion of trustworthiness in sociology), and with it concepts of 'warranted' and 'unwarranted' trust. We then present the possible causes of warranted trust as intrinsic reasoning and extrinsic behavior, and discuss how to design trustworthy AI, how to evaluate whether trust has manifested, and whether it is warranted. Finally, we elucidate the connection between trust and XAI using our formalization.

翻译:信任是人与AI之间互动的一个核心组成部分, 即“ 不正确的”信任水平可能导致技术的滥用、 滥用或不使用。 但是, 信任在AI中的性质究竟是什么? 信任的认知机制的先决条件和目标是什么? 信任的认知机制的前提和目标是什么, 我们怎样才能促进这些先决条件和目标? 这项工作的目的是解答这些问题。 我们讨论的是受社会学人际信任( 即人与人之间的信任)启发但并不完全相同的信任模式。 这个模式取决于用户脆弱性的两大特性, 以及预测AI模式决定影响的能力。 我们把“ 合同信任” 正式化了, 用户与AI之间的信任就是某种隐含或明确的合同能够维持的信任, 以及“ 信任性” ( 与社会学中的信任性概念脱钩 ) 以及“ 战争” 和“ 不信任性” 概念。 然后我们提出信任的可能原因, 作为内在的推理和终结行为, 以及预测AI 模式决定影响的能力。 我们把“ 合同性信任” 正式化, 用户与AI 之间的信任性是什么样的解释。