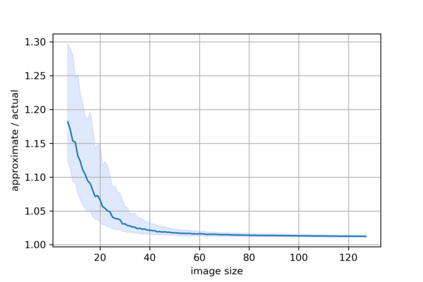

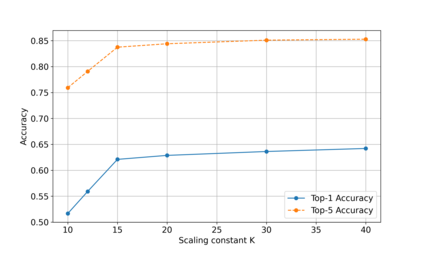

An increasing number of models require the control of the spectral norm of convolutional layers of a neural network. While there is an abundance of methods for estimating and enforcing upper bounds on those during training, they are typically costly in either memory or time. In this work, we introduce a very simple method for spectral normalization of depthwise separable convolutions, which introduces negligible computational and memory overhead. We demonstrate the effectiveness of our method on image classification tasks using standard architectures like MobileNetV2.

翻译:越来越多的模型要求控制神经网络变异层的光谱规范,虽然在培训期间有多种方法来估计和执行这些光谱的上界,但通常在记忆或时间方面费用昂贵。在这项工作中,我们引入一种非常简单的方法,使深度相分离的光谱标准化,引入可忽略不计的计算和内存间接费用。我们用移动网络2等标准结构展示了我们在图像分类任务上的方法的有效性。

相关内容

专知会员服务

17+阅读 · 2020年6月4日

专知会员服务

14+阅读 · 2020年1月1日

专知会员服务

17+阅读 · 2019年11月17日

专知会员服务

36+阅读 · 2019年10月17日

Arxiv

0+阅读 · 2021年4月2日

Arxiv

16+阅读 · 2020年3月30日

Arxiv

14+阅读 · 2019年8月8日

Arxiv

6+阅读 · 2018年6月21日

Arxiv

8+阅读 · 2018年2月7日