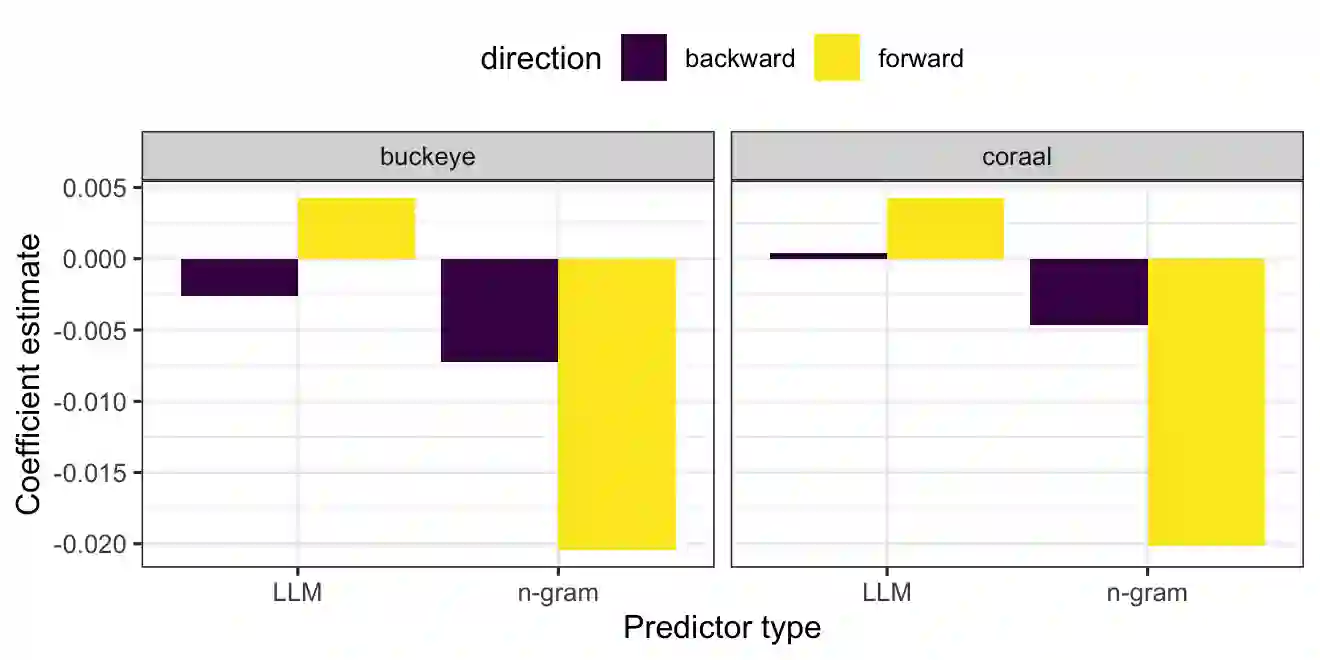

The primary research questions of this paper center on defining the amount of context that is necessary and/or appropriate when investigating the relationship between language model probabilities and cognitive phenomena. We investigate whether whole utterances are necessary to observe probabilistic reduction and demonstrate that n-gram representations suffice as cognitive units of planning.

翻译:本文的核心研究问题聚焦于探究语言模型概率与认知现象之间关系时,定义必要且/或合适的上下文量。我们研究了观察概率缩减现象是否需要完整话语,并证明n-gram表征足以作为认知规划单元。

相关内容

专知会员服务

33+阅读 · 2020年4月26日

专知会员服务

136+阅读 · 2020年3月8日

Arxiv

0+阅读 · 2025年12月30日

Arxiv

0+阅读 · 2025年12月30日

Arxiv

0+阅读 · 2025年12月29日