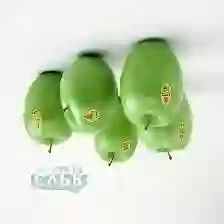

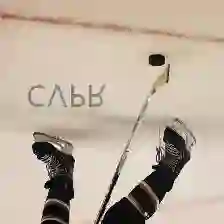

Recent research has demonstrated that adding some imperceptible perturbations to original images can fool deep learning models. However, the current adversarial perturbations are usually shown in the form of noises, and thus have no practical meaning. Image watermark is a technique widely used for copyright protection. We can regard image watermark as a king of meaningful noises and adding it to the original image will not affect people's understanding of the image content, and will not arouse people's suspicion. Therefore, it will be interesting to generate adversarial examples using watermarks. In this paper, we propose a novel watermark perturbation for adversarial examples (Adv-watermark) which combines image watermarking techniques and adversarial example algorithms. Adding a meaningful watermark to the clean images can attack the DNN models. Specifically, we propose a novel optimization algorithm, which is called Basin Hopping Evolution (BHE), to generate adversarial watermarks in the black-box attack mode. Thanks to the BHE, Adv-watermark only requires a few queries from the threat models to finish the attacks. A series of experiments conducted on ImageNet and CASIA-WebFace datasets show that the proposed method can efficiently generate adversarial examples, and outperforms the state-of-the-art attack methods. Moreover, Adv-watermark is more robust against image transformation defense methods.

翻译:最近的研究显示,在原始图像中添加一些无法察觉的扰动会愚弄深层次学习模式。 但是, 当前的对抗性扰动通常以噪音的形式显示, 因而没有实际意义。 图像水印是一种广泛用于版权保护的技术。 我们可以将图像水印视为有意义的噪音之王, 并将其添加到原始图像中不会影响人们对图像内容的理解, 也不会引起人们的怀疑。 因此, 使用水印来生成对抗性水印将会很有趣。 在本文中, 我们提议对对抗性例子( Adv- watermark) 采用新的水印记( Adv- Watermark), 它将图像水印水印技术与对抗性范例算法结合起来。 在清洁图像中添加有意义的水印水标记可以攻击 DNN 模型。 具体地说, 我们提出一种新的优化算法, 叫做盆地喜动进化进化进化进化(BHHE), 在黑箱攻击模式中生成对抗性水印水标记。 感谢 BHE, Av-wamark 只需要从威胁模型中查询完成攻击性模型。 在图像网和CAS- 格式上进行的一系列实验时, 能够产生稳化数据转换方法。