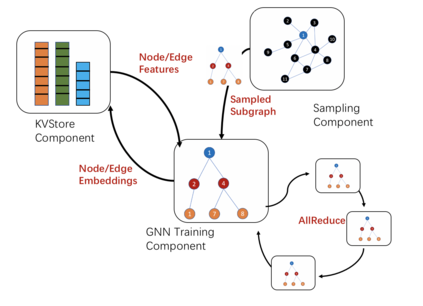

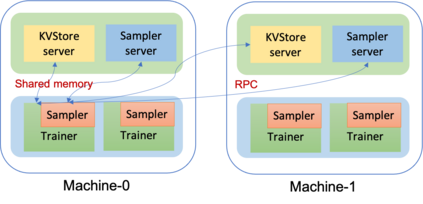

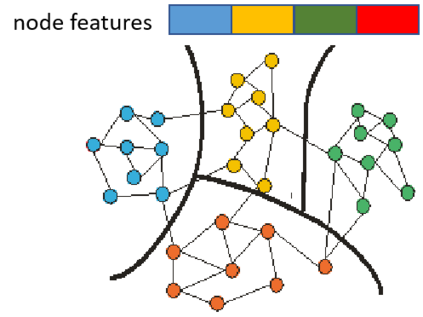

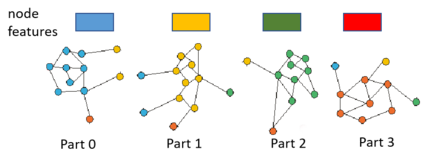

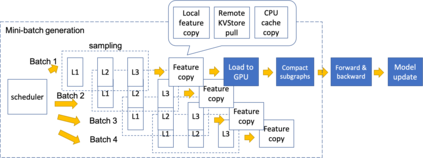

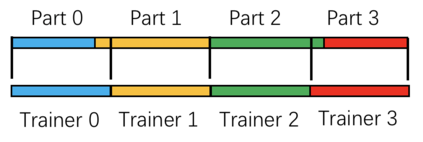

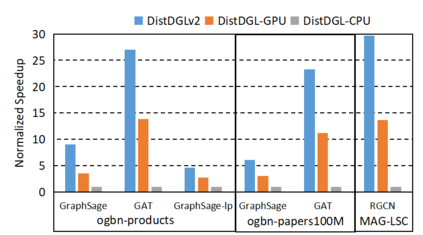

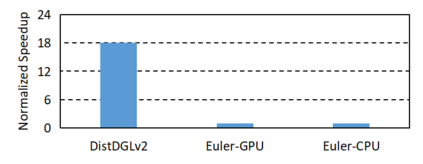

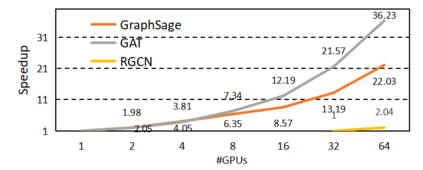

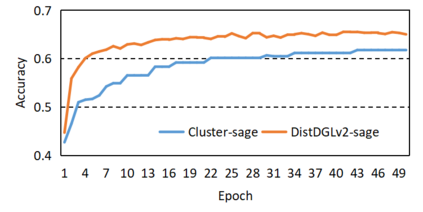

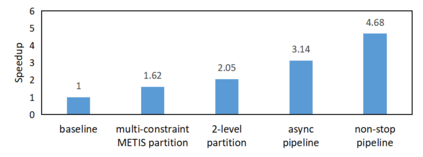

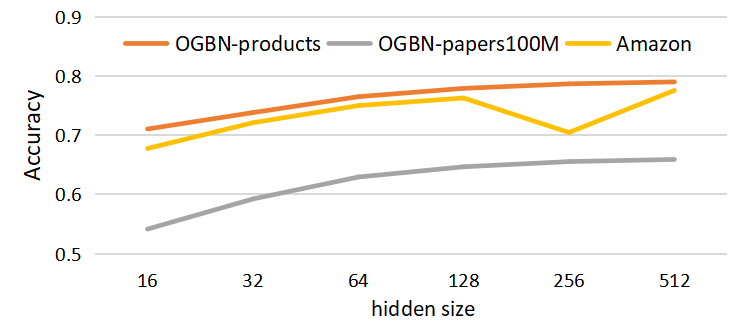

Graph neural networks (GNN) have shown great success in learning from graph-structured data. They are widely used in various applications, such as recommendation, fraud detection, and search. In these domains, the graphs are typically large, containing hundreds of millions or billions of nodes. To tackle this challenge, we develop DistDGLv2, a system that extends DistDGL for training GNNs in a mini-batch fashion, using distributed hybrid CPU/GPU training to scale to large graphs. DistDGLv2 places graph data in distributed CPU memory and performs mini-batch computation in GPUs. DistDGLv2 distributes the graph and its associated data (initial features) across the machines and uses this distribution to derive a computational decomposition by following an owner-compute rule. DistDGLv2 follows a synchronous training approach and allows ego-networks forming mini-batches to include non-local nodes. To minimize the overheads associated with distributed computations, DistDGLv2 uses a multi-level graph partitioning algorithm with min-edge cut along with multiple balancing constraints. This localizes computation in both machine level and GPU level and statically balance the computations. DistDGLv2 deploys an asynchronous mini-batch generation pipeline that makes all computation and data access asynchronous to fully utilize all hardware (CPU, GPU, network, PCIe). The combination allows DistDGLv2 to train high-quality models while achieving high parallel efficiency and memory scalability. We demonstrate DistDGLv2 on various GNN workloads. Our results show that DistDGLv2 achieves 2-3X speedup over DistDGL and 18X speedup over Euler. It takes only 5-10 seconds to complete an epoch on graphs with 100s millions of nodes on a cluster with 64 GPUs.

翻译:图形神经网络( GNN) 显示在从图形结构数据中学习非常成功 。 它们被广泛用于各种应用程序, 如建议、 欺诈检测和搜索 。 在这些域中, 图表一般是大的, 包含数亿或数十亿节点 。 为了应对这一挑战, 我们开发 DistDGLv2, 这个系统将 DistDGDGLVLL 推广DistDGDDLLLL, 用于以小型批量方式培训 GNS, 使用分布式混合 CPU/ GPU培训到大图表 。 DistDGLv2 将图表数据放在分布式的 CP 存储存储存储器存储器存储器存储器存储器存储器存储器, DVTDDLv2 使用此分布式分布式图形数据分布式配置器, 将ODGDRVL 存储器存储器运行到所有ODGDRDRDL 数据。