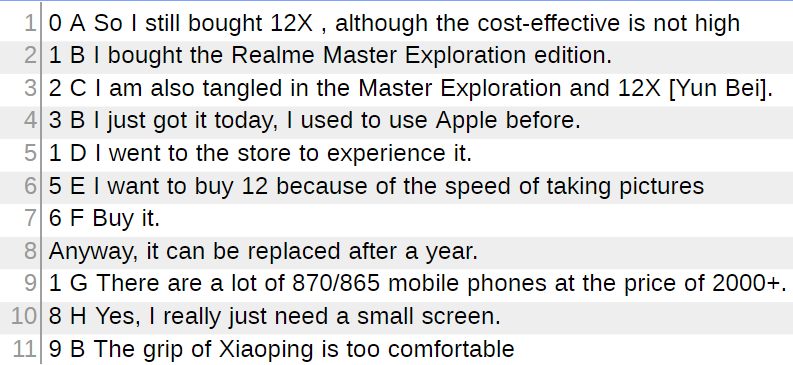

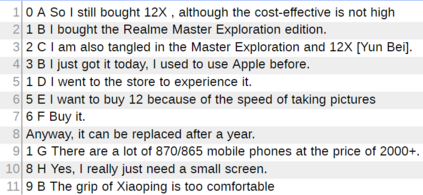

The rapid development of aspect-based sentiment analysis (ABSA) within recent decades shows great potential for real-world society. The current ABSA works, however, are mostly limited to the scenario of a single text piece, leaving the study in dialogue contexts unexplored. In this work, we introduce a novel task of conversational aspect-based sentiment quadruple analysis, namely DiaASQ, aiming to detect the sentiment quadruple of target-aspect-opinion-sentiment in a dialogue. DiaASQ bridges the gap between fine-grained sentiment analysis and conversational opinion mining. We manually construct a large-scale, high-quality Chinese dataset and also obtain the English version dataset via manual translation. We deliberately propose a neural model to benchmark the task. It advances in effectively performing end-to-end quadruple prediction and manages to incorporate rich dialogue-specific and discourse feature representations for better cross-utterance quadruple extraction. We finally point out several potential future works to facilitate the follow-up research of this new task. The DiaASQ data is open at https://github.com/unikcc/DiaASQ

翻译:近几十年来,基于侧面情绪分析(ABSA)的快速发展显示了真实世界社会的巨大潜力。但是,目前ABSA的作品大多局限于单一文本片子的情景,使得在对话背景下的研究没有被探索。在这项工作中,我们引入了基于对话的基于侧面情绪的四重分析的新任务,即DiaASQ,目的是在对话中发现目标-骨架-视力-支持的情绪的四重性。DiaASQ缩小了微小的情绪分析与对谈观点的挖掘之间的差距。我们手工建立了一个大规模、高质量的中国数据集,并通过人工翻译获得英文版数据集。我们有意提出一个神经模型来为任务制定基准。它有效地进行端至端四重预测,并设法纳入丰富的对话专用和讨论特征演示,以便更好地交叉调取出四重音。我们最后指出一些未来可能开展的工作,以促进这项新任务的后续研究。 DiaASQ数据在 https://github.com/ Qikqia/Dia数据在 https://gisub.com/Qqia/Dria) 上是开放的。