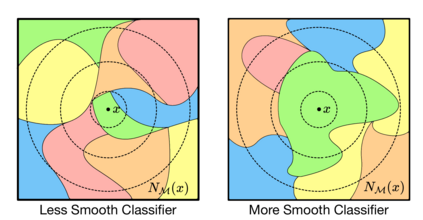

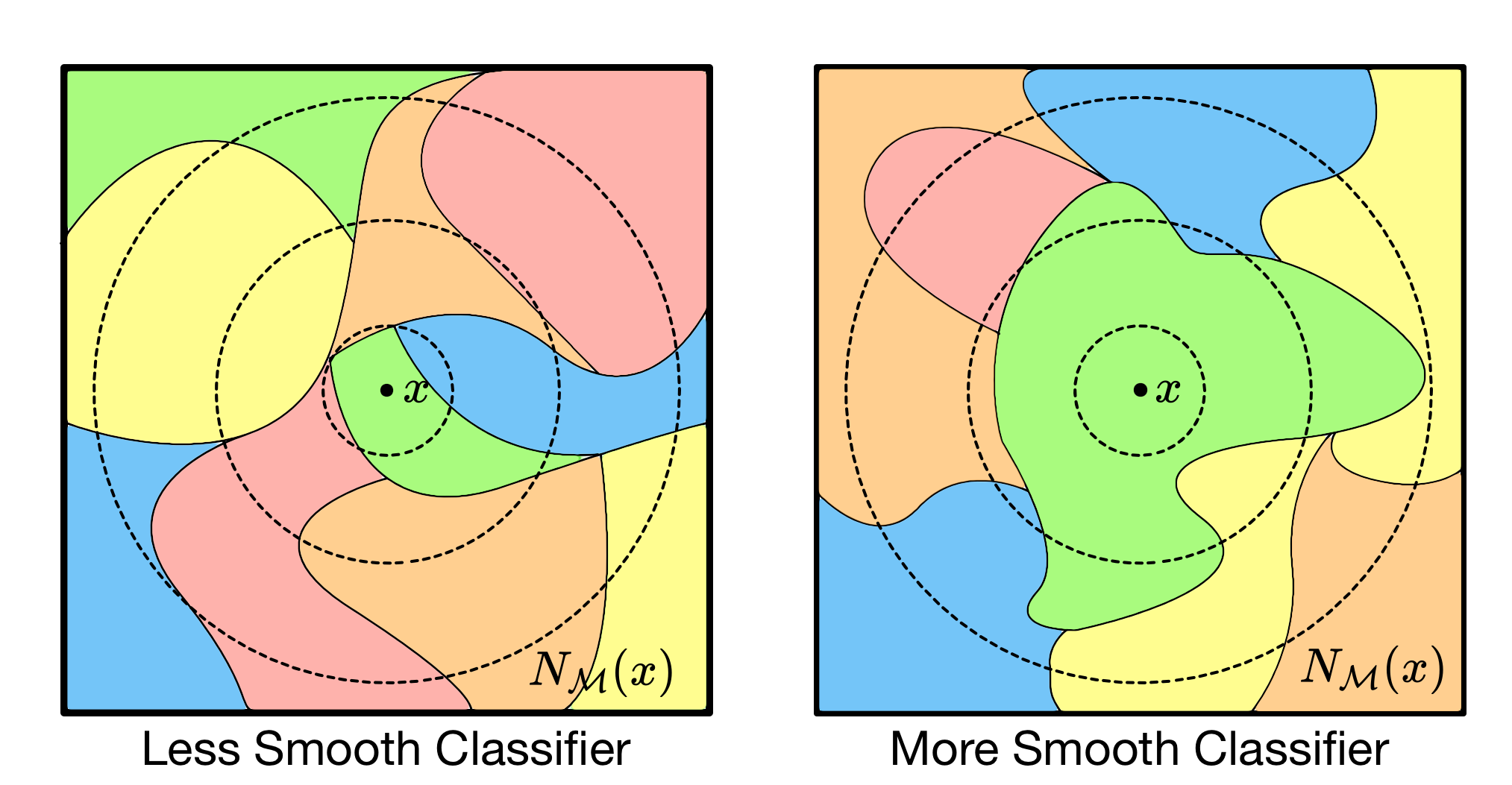

Understanding how machine learning models generalize to new environments is a critical part of their safe deployment. Recent work has proposed a variety of complexity measures that directly predict or theoretically bound the generalization capacity of a model. However, these methods rely on a strong set of assumptions that in practice are not always satisfied. Motivated by the limited settings in which existing measures can be applied, we propose a novel complexity measure based on the local manifold smoothness of a classifier. We define local manifold smoothness as a classifier's output sensitivity to perturbations in the manifold neighborhood around a given test point. Intuitively, a classifier that is less sensitive to these perturbations should generalize better. To estimate smoothness we sample points using data augmentation and measure the fraction of these points classified into the majority class. Our method only requires selecting a data augmentation method and makes no other assumptions about the model or data distributions, meaning it can be applied even in out-of-domain (OOD) settings where existing methods cannot. In experiments on robustness benchmarks in image classification, sentiment analysis, and natural language inference, we demonstrate a strong and robust correlation between our manifold smoothness measure and actual OOD generalization on over 3,000 models evaluated on over 100 train/test domain pairs.

翻译:了解机器学习模型如何普遍适用于新环境是其安全部署的关键部分。最近的工作提出了各种复杂措施,直接预测或理论上约束模型的通用能力,但这些方法依赖于一套在实际中并非总能满足的强有力的假设。受现有措施适用范围有限的环境的驱动,我们根据一个分类器的本地多元性平滑性提出了一个新的复杂措施。我们将本地元流平滑性定义为一个分类器对在特定测试点周围的多区段扰动的敏感度。直观地说,一个对这些扰动能力不那么敏感的分类器应该更加普遍化。为了估算平稳性,我们使用数据增强度抽样点,并测量被划入多数等级的这些点的分数。我们的方法只需要选择一种数据增强方法,而不对模型或数据分布作其他假设,这意味着即使在现有方法无法应用的外部(OODD)环境中,它也可以被应用。在图像分类、情绪分析以及自然语言推导的稳健基准方面进行实验。我们用100个模型来显示,我们用数据平稳度度测量和3000个总和总要求之间,我们测算。