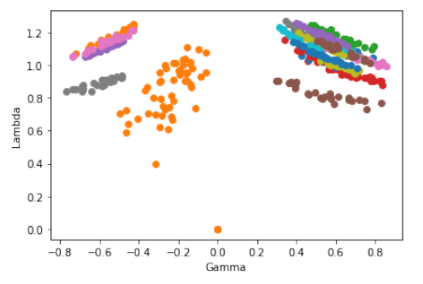

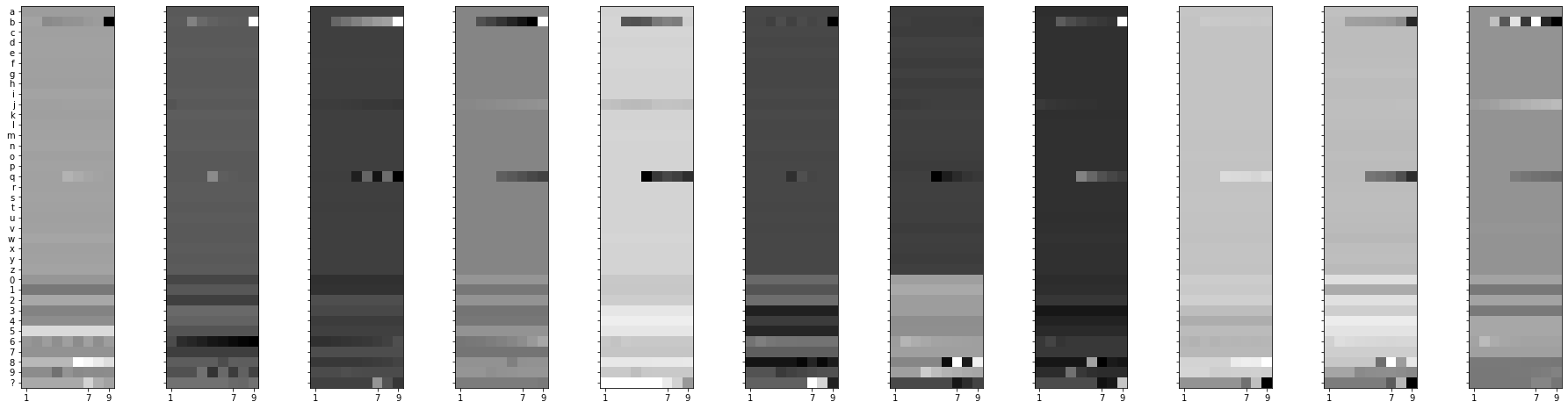

Short-term plasticity (STP) is a mechanism that stores decaying memories in synapses of the cerebral cortex. In computing practice, STP has been used, but mostly in the niche of spiking neurons, even though theory predicts that it is the optimal solution to certain dynamic tasks. Here we present a new type of recurrent neural unit, the STP Neuron (STPN), which indeed turns out strikingly powerful. Its key mechanism is that synapses have a state, propagated through time by a self-recurrent connection-within-the-synapse. This formulation enables training the plasticity with backpropagation through time, resulting in a form of learning to learn and forget in the short term. The STPN outperforms all tested alternatives, i.e. RNNs, LSTMs, other models with fast weights, and differentiable plasticity. We confirm this in both supervised and reinforcement learning (RL), and in tasks such as Associative Retrieval, Maze Exploration, Atari video games, and MuJoCo robotics. Moreover, we calculate that, in neuromorphic or biological circuits, the STPN minimizes energy consumption across models, as it depresses individual synapses dynamically. Based on these, biological STP may have been a strong evolutionary attractor that maximizes both efficiency and computational power. The STPN now brings these neuromorphic advantages also to a broad spectrum of machine learning practice. Code is available at https://github.com/NeuromorphicComputing/stpn

翻译:短期可塑性(STTP)是一个在大脑皮层神经突触中存储衰落记忆的机制。 在计算实践中,STP被使用过,但大多是在神经神经发泡的边缘,尽管理论预测这是某些动态任务的最佳解决方案。在这里,我们展示了新型的经常性神经元,STP神经元(STTP Neron),它确实非常强大。它的关键机制是神经突触有一个状态,它通过一个自我常态的频谱连接在时间上传播。这种配方能够通过时间对塑料进行反向调整培训,从而形成学习和遗忘神经神经元的优势。STPN(STTP)超越了所有测试过的替代品,即RNN、LSTM(STMs)、其他具有快速重量的模型以及不同的可塑性。我们在监管和强化的STL(RL)中证实了这一点,在Associal Relievalval Veval,Metari视频游戏和MujoocoI机器人的后期演化能力,我们从这些生物史变变变变变的模型中可以算出一个强大的动力模型。