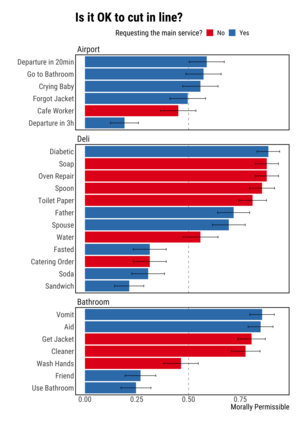

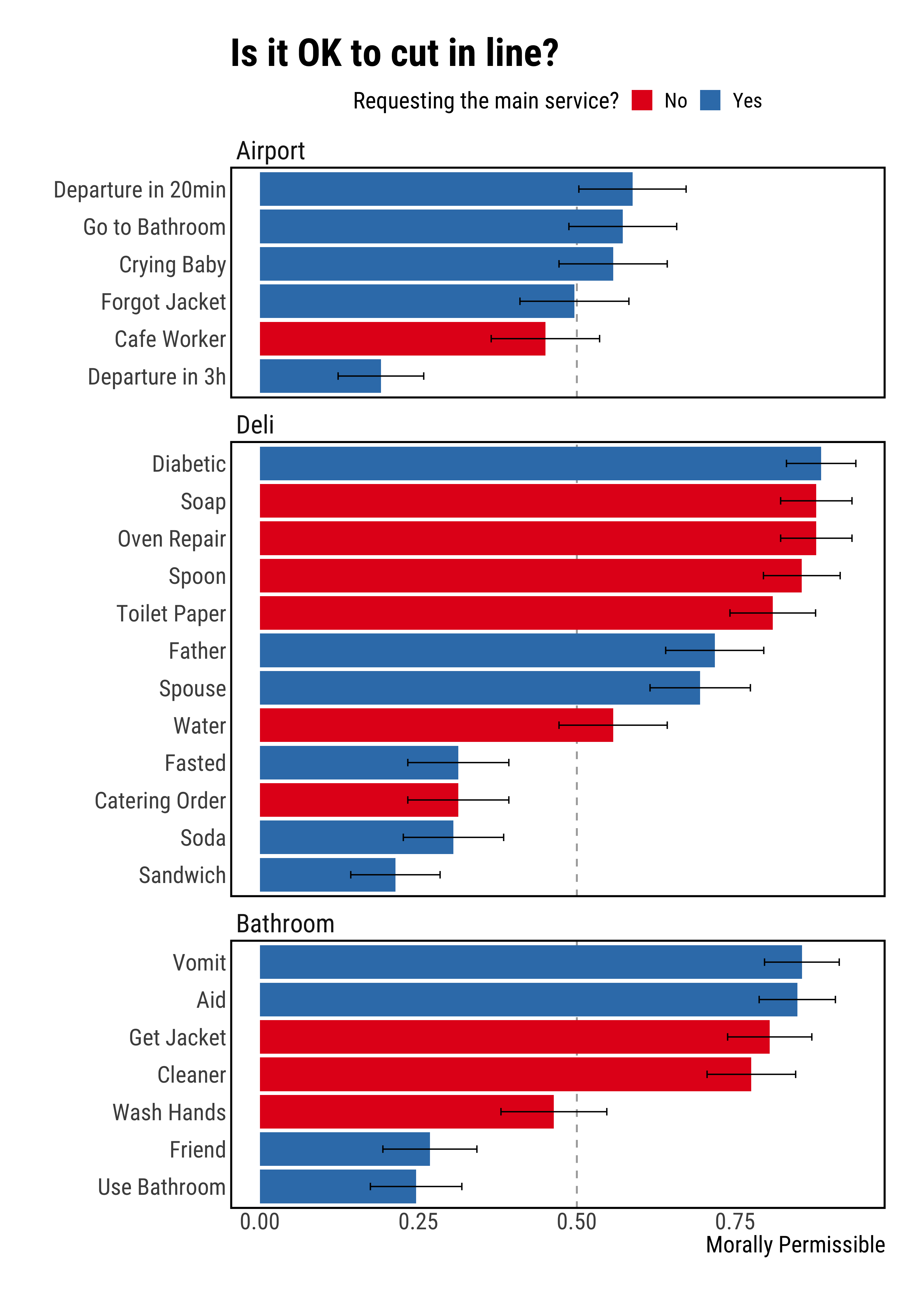

One of the most remarkable things about the human moral mind is its flexibility. We can make moral judgments about cases we have never seen before. We can decide that pre-established rules should be broken. We can invent novel rules on the fly. Capturing this flexibility is one of the central challenges in developing AI systems that can interpret and produce human-like moral judgment. This paper details the results of a study of real-world decision makers who judge whether it is acceptable to break a well-established norm: ``no cutting in line.'' We gather data on how human participants judge the acceptability of line-cutting in a range of scenarios. Then, in order to effectively embed these reasoning capabilities into a machine, we propose a method for modeling them using a preference-based structure, which captures a novel modification to standard ``dual process'' theories of moral judgment.

翻译:人类道德意识最突出的事物之一是其灵活性。我们可以对以前从未见过的案例做出道德判断。我们可以决定预先确立的规则应该被打破。我们可以发明关于飞行的新规则。获得这种灵活性是发展能够解释和产生人性道德判断的AI系统的主要挑战之一。本文件详细介绍了关于判断违反既定规范是否可接受的现实世界决策者的研究结果:“不切入一线 ” 。我们收集了人类参与者如何判断在各种情景中可接受分界线的数据。然后,为了有效地将这些推理能力纳入机器,我们提出了一种方法,用基于优惠的结构来模拟这些能力,它抓住了对标准“双重进程”道德判断理论的新修改。