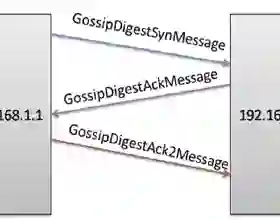

This paper studies the decentralized nonconvex optimization problem $\min_{x\in{\mathbb R}^d} f(x)\triangleq \frac{1}{m}\sum_{i=1}^m f_i(x)$, where $f_i(x)\triangleq \frac{1}{n}\sum_{j=1}^n f_{i,j}(x)$ is the local function on the $i$-th agent of the network. We propose a novel stochastic algorithm called DEcentralized probAbilistic Recursive gradiEnt deScenT (\DEAREST), which integrates the techniques of variance reduction, gradient tracking and multi-consensus. We construct a Lyapunov function that simultaneously characterizes the function value, the gradient estimation error and the consensus error for the convergence analysis. Based on this measure, we provide a concise proof to show DEAREST requires at most ${\mathcal O}(mn+\sqrt{mn}L\varepsilon^{-2})$ incremental first-order oracle (IFO) calls and ${\mathcal O}({L\varepsilon^{-2}}/{\sqrt{1-\lambda_2(W)}}\,)$ communication rounds to find an $\varepsilon$-stationary point in expectation, where $L$ is the smoothness parameter and $\lambda_2(W)$ is the second-largest eigenvalue of the gossip matrix $W$. We can verify both of the IFO complexity and communication complexity match the lower bounds. To the best of our knowledge, DEAREST is the first optimal algorithm for decentralized nonconvex finite-sum optimization.

翻译:本文研究分散的非convex优化问题 $\ min\ x\ x\ x\ y\ mathbrb Räd} f(x)\ triesangleq\ ffrac{1\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\\