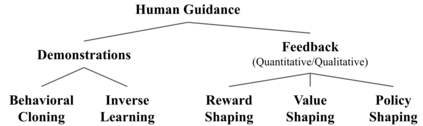

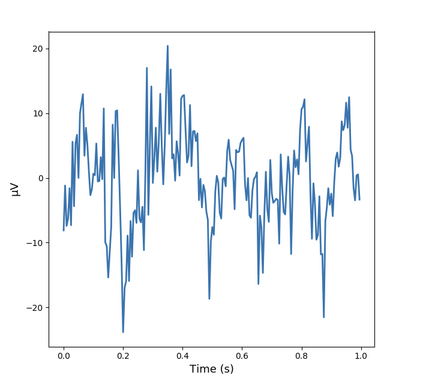

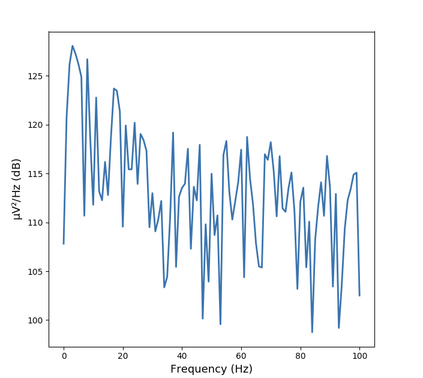

Reinforcement learning (RL) and brain-computer interfaces (BCI) are two fields that have been growing over the past decade. Until recently, these fields have operated independently of one another. With the rising interest in human-in-the-loop (HITL) applications, RL algorithms have been adapted to account for human guidance giving rise to the sub-field of interactive reinforcement learning (IRL). Adjacently, BCI applications have been long interested in extracting intrinsic feedback from neural activity during human-computer interactions. These two ideas have set RL and BCI on a collision course for one another through the integration of BCI into the IRL framework where intrinsic feedback can be utilized to help train an agent. This intersection has created a new and emerging paradigm denoted as intrinsic IRL. To further help facilitate deeper ingratiation of BCI and IRL, we provide a tutorial and review of intrinsic IRL so far with an emphasis on its parent field of feedback-driven IRL along with discussions concerning validity, challenges, and open problems.

翻译:强化学习(RL)和大脑-计算机界面(BCI)是过去十年来不断增长的两个领域,直到最近,这两个领域一直相互独立运作。随着对环形人(HITL)应用程序的兴趣日益浓厚,RL算法已经调整,以顾及人的指导,从而导致互动强化学习(IRL)的子领域。相邻,BCI应用程序长期以来一直有兴趣在人-计算机互动期间从神经活动中提取内在反馈。这两个想法通过将BCI纳入IRL框架,使RL和BCI相互碰撞,利用内在反馈来帮助培训一个代理。这一交叉法创造了一个新的新兴模式,被称为IRL。为了进一步促进BCI和IRL的更深层次偏向性,我们提供了内在IRL的辅导和审查,并侧重于其父方反馈驱动的IRL领域,同时讨论了有效性、挑战和公开问题。