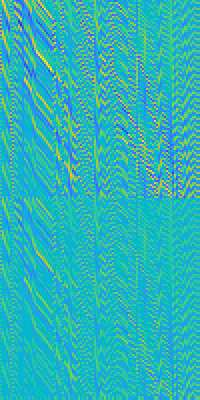

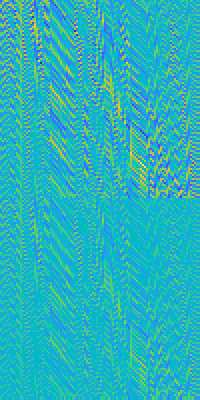

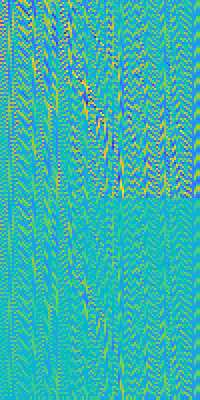

Parameterized state space models in the form of recurrent networks are often used in machine learning to learn from data streams exhibiting temporal dependencies. To break the black box nature of such models it is important to understand the dynamical features of the input driving time series that are formed in the state space. We propose a framework for rigorous analysis of such state representations in vanishing memory state space models such as echo state networks (ESN). In particular, we consider the state space a temporal feature space and the readout mapping from the state space a kernel machine operating in that feature space. We show that: (1) The usual ESN strategy of randomly generating input-to-state, as well as state coupling leads to shallow memory time series representations, corresponding to cross-correlation operator with fast exponentially decaying coefficients; (2) Imposing symmetry on dynamic coupling yields a constrained dynamic kernel matching the input time series with straightforward exponentially decaying motifs or exponentially decaying motifs of the highest frequency; (3) Simple cycle high-dimensional reservoir topology specified only through two free parameters can implement deep memory dynamic kernels with a rich variety of matching motifs. We quantify richness of feature representations imposed by dynamic kernels and demonstrate that for dynamic kernel associated with cycle reservoir topology, the kernel richness undergoes a phase transition close to the edge of stability.

翻译:以重复式网络为形式的国家空间模型往往用于机器学习,从显示时间依赖性的数据流中学习。为了打破这些模型的黑盒性质,必须理解在州空间中形成的输入驱动时间序列的动态特征。我们提议一个框架,以便在消失记忆状态空间模型(如回声状态网络(ESN))时,对此类状态表示进行严格分析。我们特别认为,国家空间是一个时间特征空间,从州空间进行读出绘图,一个在州空间运行的内核机器,一个在该特征空间运行的内核机器。我们显示:(1) 随机生成输入到国家的常态欧洲SN战略,以及国家合并导致在州空间中形成输入驱动时间序列的浅度时间序列。我们建议一个框架,用于严格分析这种输入时间模型,例如回声状态网络(ESN)。我们特别认为,国家空间是一个时间特征空间,是一个时间特征空间,一个在州空间进行直线性指数腐蚀软化或指数性腐蚀最高频率的内核模式。 (c) 简单循环高维层储水库表,只有通过两个自由参数来指定,才能实施深度内流的内存的内流动态内层内流流流流流流,通过动态内层的动态循环的动态循环,以显示动态循环的动态流的动态循环的动态层结构。