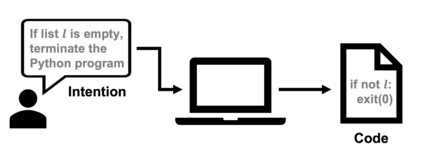

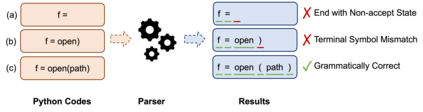

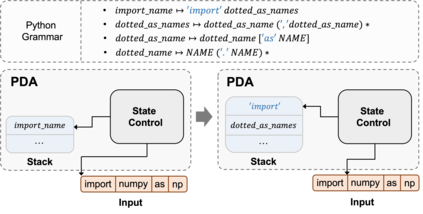

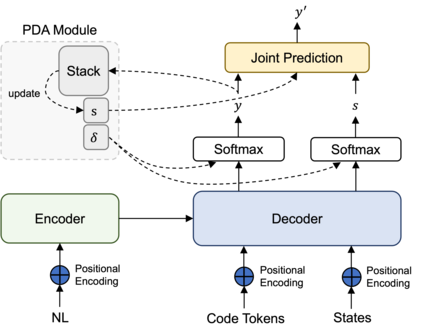

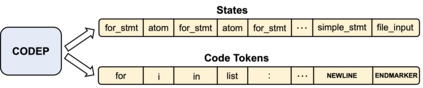

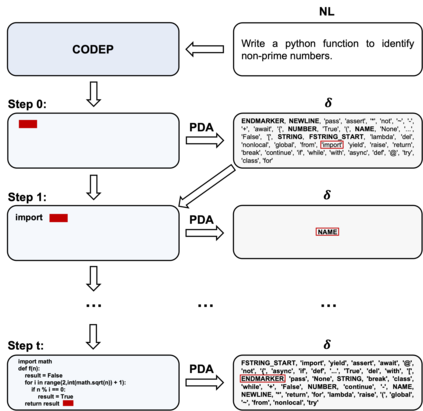

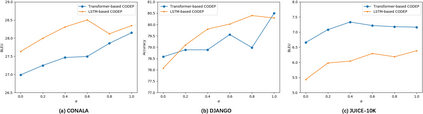

General-purpose code generation (GPCG) aims to automatically convert the natural language description into source code in a general-purpose language (GPL) like Python. Intrinsically, code generation is a particular type of text generation that produces grammatically defined text, namely code. However, existing sequence-to-sequence (Seq2Seq) approaches neglect grammar rules when generating GPL code. In this paper, we make the first attempt to consider grammatical Seq2Seq (GSS) models for GPCG and propose CODEP, a GSS code generation framework equipped with a pushdown automaton (PDA) module. PDA module (PDAM) contains a PDA and an algorithm to help model generate the following prediction bounded in a valid set for each generation step, so that ensuring the grammatical correctness of generated codes. During training, CODEP additionally incorporates state representation and state prediction task, which leverages PDA states to assist CODEP in comprehending the parsing process of PDA. In inference, our method outputs codes satisfying grammatical constraints with PDAM and the joint prediction of PDA states. Furthermore, PDAM can be directly applied to Seq2Seq models, i.e., without any need for training. To evaluate the effectiveness of our proposed method, we construct the PDA for the most popular GPL Python and conduct extensive experiments on four benchmark datasets. Experimental results demonstrate the superiority of CODEP compared to the state-of-the-art approaches without pre-training, and PDAM also achieves significant improvements over the pre-trained models.

翻译:通用代码生成 (GPCG) 旨在将自然语言描述自动转换成像 Python 这样的通用语言源代码。 从本质上讲,代码生成是一种特殊的文本生成类型,它产生语法定义的文本,即代码。然而,现有的序列到序列序列(Seq2Seq) 方法在生成 GPL 代码时忽略了语法规则。 在本文件中,我们第一次尝试考虑GPCG 的语法Seq2Seq(GSS) 模型,并提议 CODEP(GD),这是一个配置了推降自动马通模块(PDA) 的 GDE 代码生成框架。 PDA 模块包含一个PDA 和算法,帮助模型产生以下的预测,每个生成步骤都有效,以确保生成的代码的语法正确性。 在培训中,CODEPDA 额外整合了国家代表性和状态预测任务, 利用PDA 来帮助COD 广泛理解 PDA 的解算法进程。 推算, 我们的方法输出到 SAD 4 数据预测算。