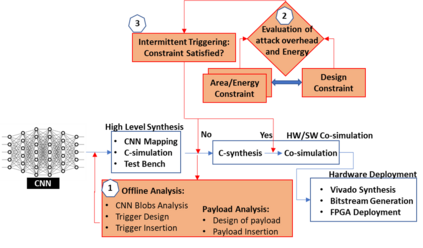

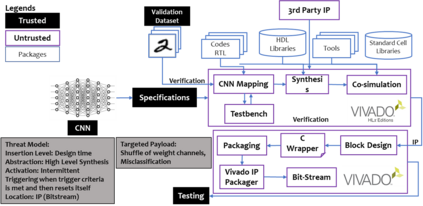

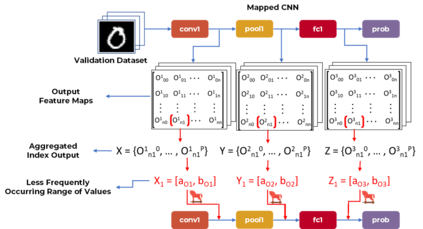

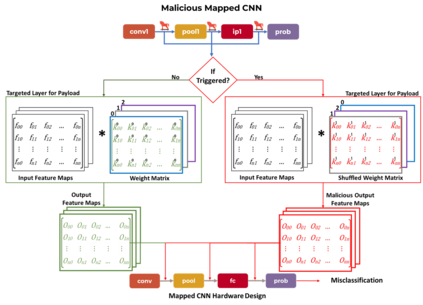

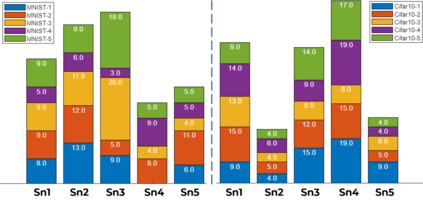

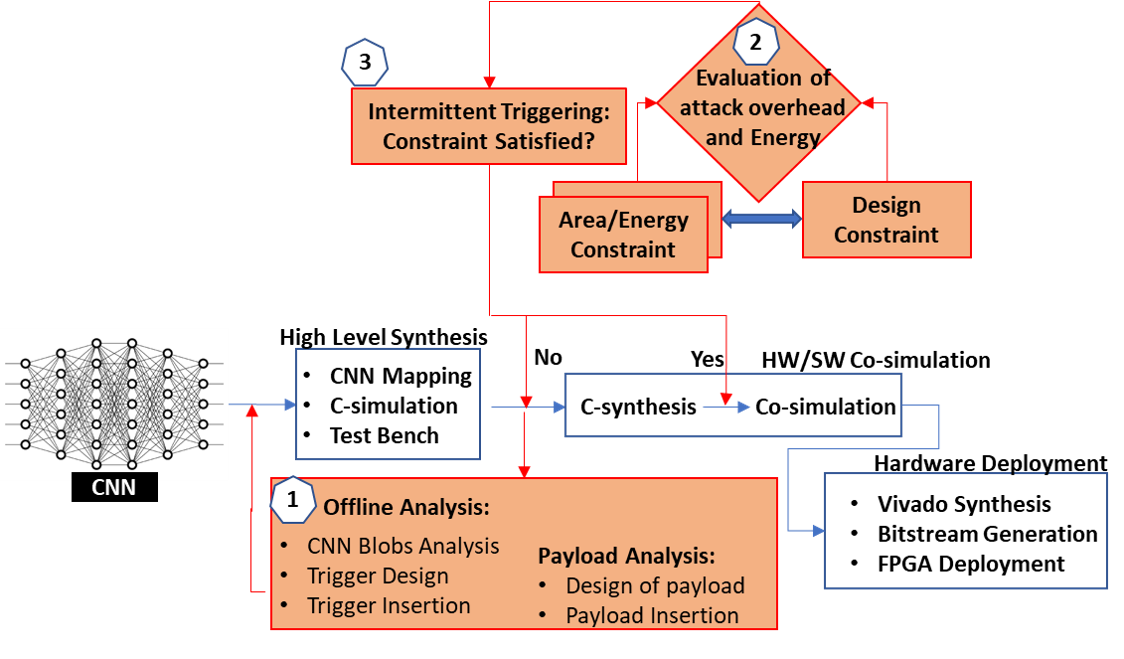

Security of inference phase deployment of Convolutional neural network (CNN) into resource constrained embedded systems (e.g. low end FPGAs) is a growing research area. Using secure practices, third party FPGA designers can be provided with no knowledge of initial and final classification layers. In this work, we demonstrate that hardware intrinsic attack (HIA) in such a "secure" design is still possible. Proposed HIA is inserted inside mathematical operations of individual layers of CNN, which propagates erroneous operations in all the subsequent CNN layers that lead to misclassification. The attack is non-periodic and completely random, hence it becomes difficult to detect. Five different attack scenarios with respect to each CNN layer are designed and evaluated based on the overhead resources and the rate of triggering in comparison to the original implementation. Our results for two CNN architectures show that in all the attack scenarios, additional latency is negligible (<0.61%), increment in DSP, LUT, FF is also less than 2.36%. Three attack scenarios do not require any additional BRAM resources, while in two scenarios BRAM increases, which compensates with the corresponding decrease in FF and LUTs. To the authors' best knowledge this work is the first to address the hardware intrinsic CNN attack with the attacker does not have knowledge of the full CNN.

翻译:向资源受限的嵌入系统(如低端FPGAs)部署革命性神经网络(CNN)的保险安全阶段部署 向资源受限的内嵌系统(如低端FPGAs) 的预测安全阶段部署, 是一个日益增长的研究领域。 使用安全的做法, 第三方FPGA设计者可以在初始和最终分类层不知情的情况下获得第三方FPGA设计者的信息。 在这项工作中, 我们证明, 在这种“ 安全” 设计中, 硬件内在攻击(HIA) 仍然有可能。 拟议的HIA插入CNN各层的数学操作中, 该操作在随后所有CNN层次传播错误操作导致分类错误的错误操作, 导致错误分类错误。 攻击是非定期的, 完全随机的, 因而难以检测。 与CNN CNN 层有关的五种不同的攻击情景, 其设计和评估依据的是间接资源和触发速度, 与最初的触发速度。 我们的两个CNNA 结构的结果表明, 在所有攻击情景中, 额外的硬体知识与CNIS 攻击的作者是完全的硬件。