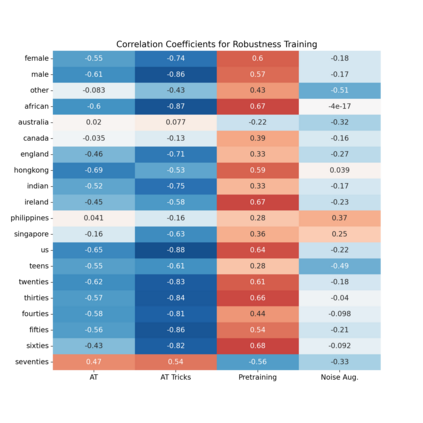

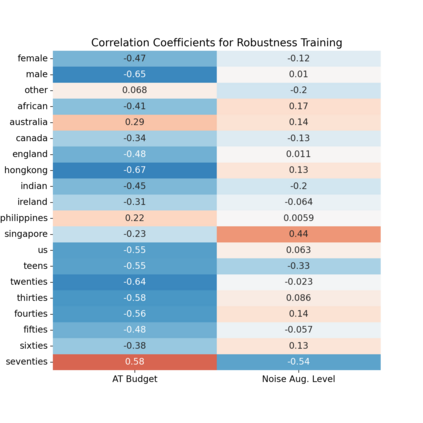

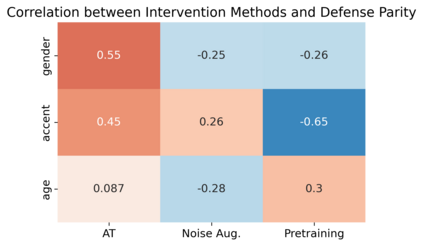

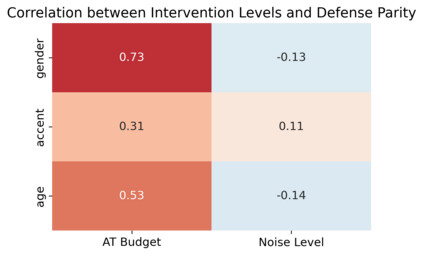

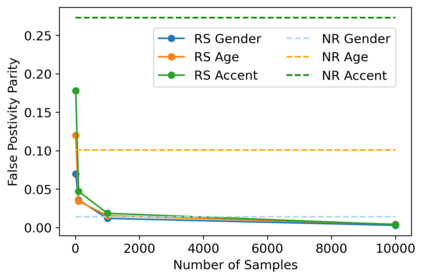

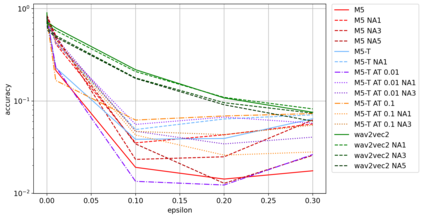

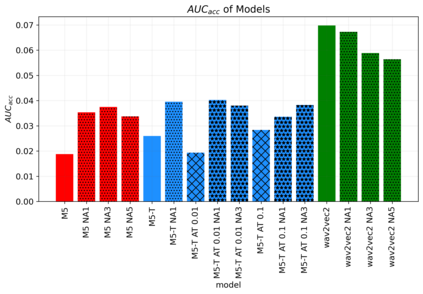

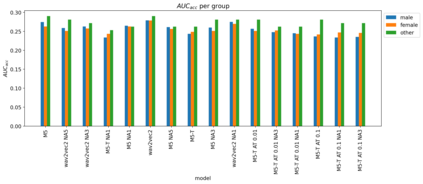

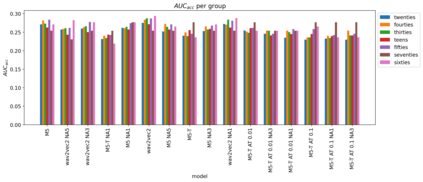

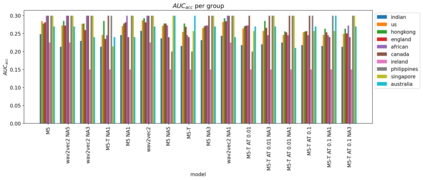

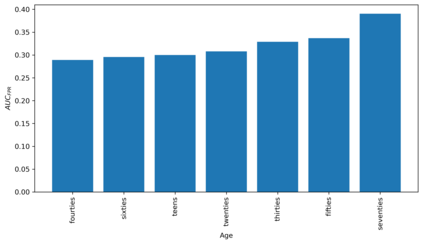

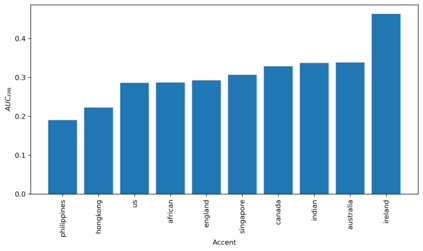

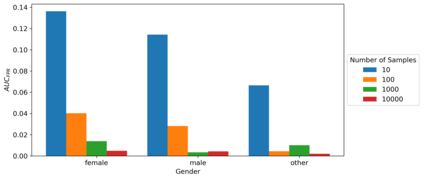

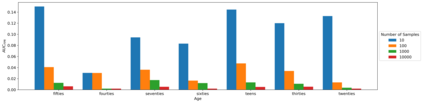

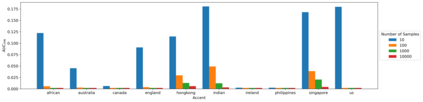

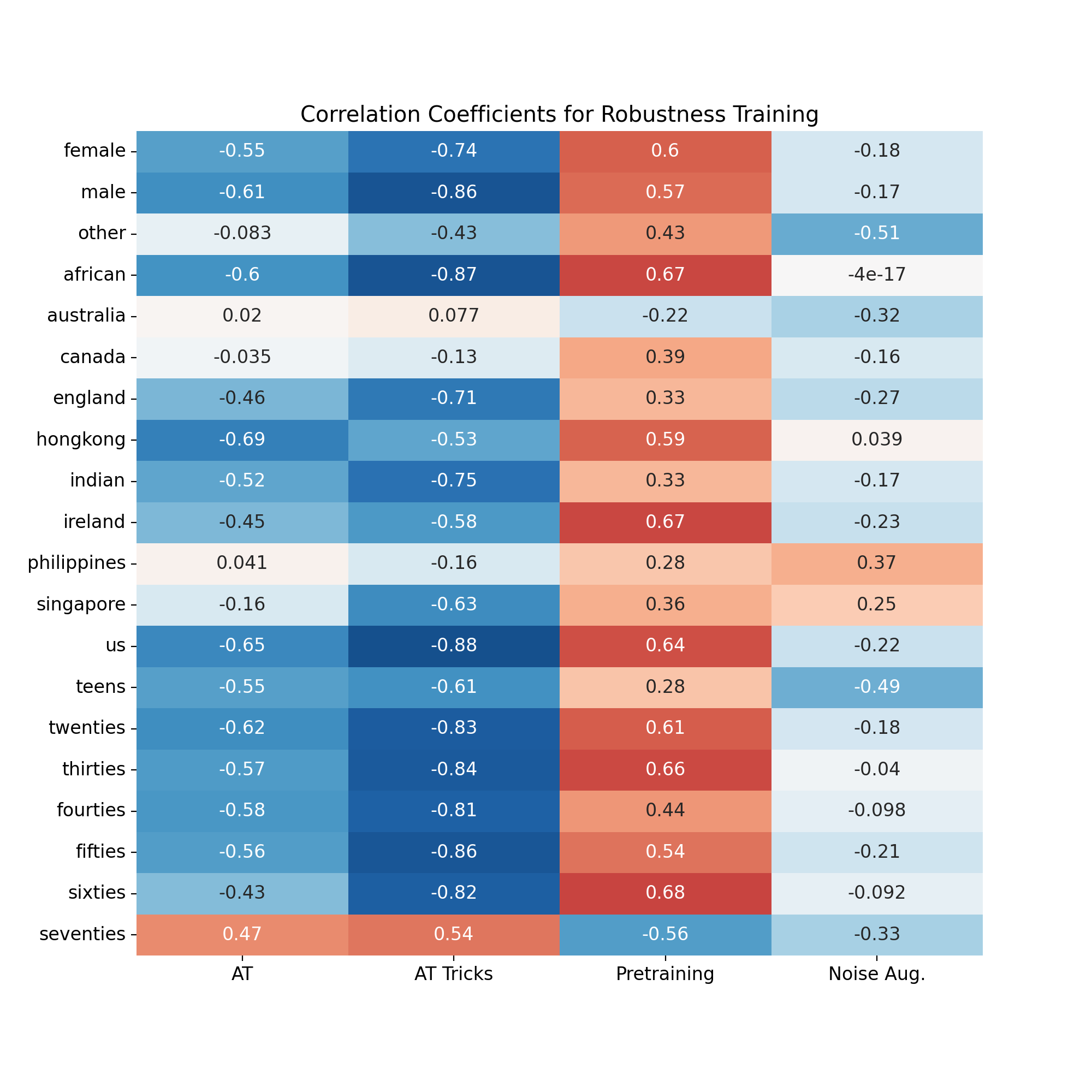

The machine learning security community has developed myriad defenses for evasion attacks over the past decade. An understudied question in that community is: for whom do these defenses defend? In this work, we consider some common approaches to defending learned systems and whether those approaches may offer unexpected performance inequities when used by different sub-populations. We outline simple parity metrics and a framework for analysis that can begin to answer this question through empirical results of the fairness implications of machine learning security methods. Many methods have been proposed that can cause direct harm, which we describe as biased vulnerability and biased rejection. Our framework and metric can be applied to robustly trained models, preprocessing-based methods, and rejection methods to capture behavior over security budgets. We identify a realistic dataset with a reasonable computational cost suitable for measuring the equality of defenses. Through a case study in speech command recognition, we show how such defenses do not offer equal protection for social subgroups and how to perform such analyses for robustness training, and we present a comparison of fairness between two rejection-based defenses: randomized smoothing and neural rejection. We offer further analysis of factors that correlate to equitable defenses to stimulate the future investigation of how to assist in building such defenses. To the best of our knowledge, this is the first work that examines the fairness disparity in the accuracy-robustness trade-off in speech data and addresses fairness evaluation for rejection-based defenses.

翻译:机器学习安全界在过去十年中为躲避袭击发展了无数防御手段。在这个社区中,一个研究不足的问题是:谁为这些防御手段辩护?在这项工作中,我们考虑一些共同的方法来捍卫学习的系统,这些方法在不同的亚群体使用时是否会造成意想不到的绩效不平等。我们概述了简单的均等指标和分析框架,可以通过机器学习安全方法的公平影响的经验结果来开始回答这个问题。提出了许多方法可以造成直接伤害,我们称之为有偏见的脆弱性和有偏见的拒绝。我们的框架和衡量标准可以适用于经过严格训练的模式、预先处理的方法和拒绝方法,以捕捉安全预算方面的行为。我们在这项工作中考虑一些共同的方法,以一些共同的方法来捍卫学习,这些方法在不同的亚群体使用时,是否会产生出乎意料之外的业绩不平等。我们通过对语言指令认识的案例研究,来说明这些防御方法如何为社会分组提供同等的保护,以及如何进行这种分析,并且我们比较两种基于拒绝的防御方法:随机化的平滑和神经拒绝。我们进一步分析与公平防卫的公平性相关的因素,我们进一步分析,以这种公平防卫的准确性为未来研究提供这种贸易的准确性研究提供怎样的平衡。</s>