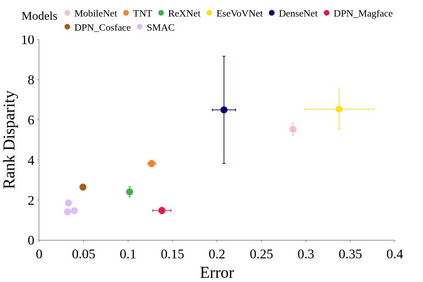

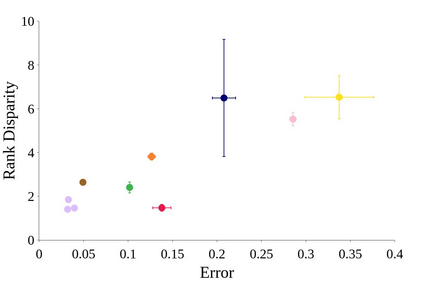

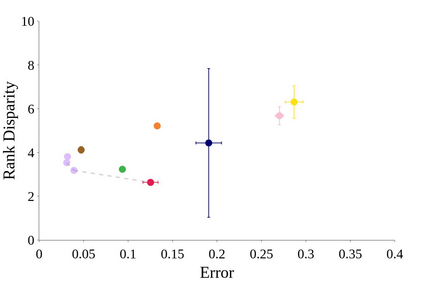

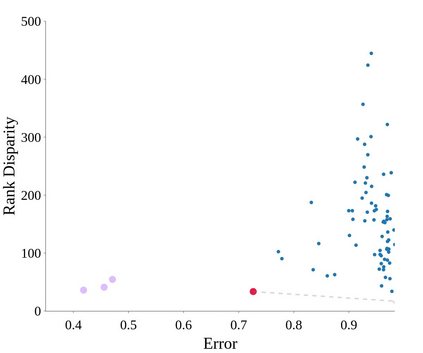

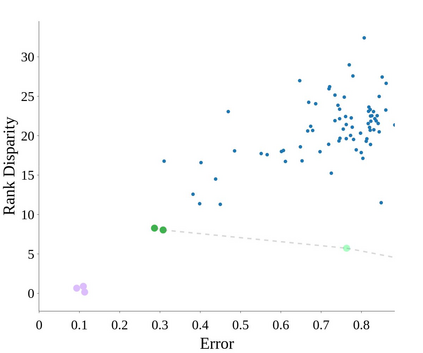

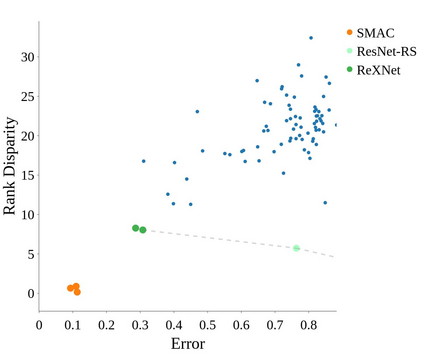

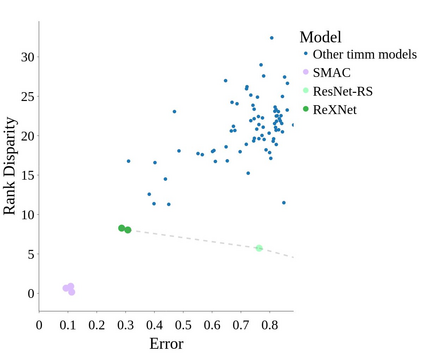

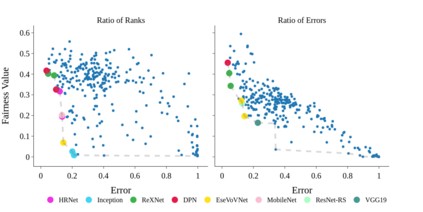

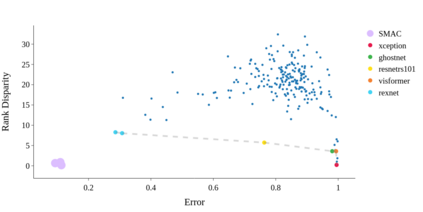

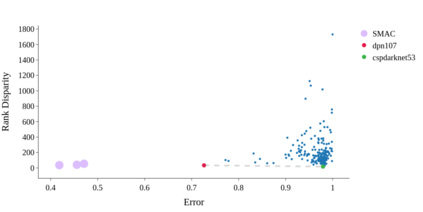

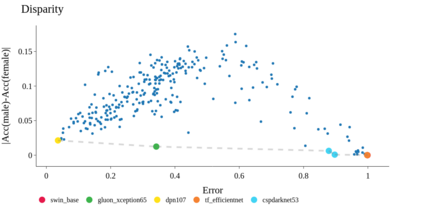

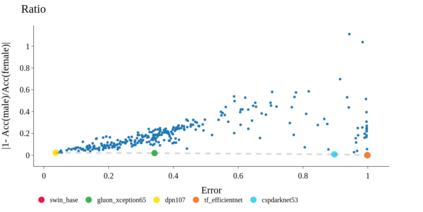

Face recognition systems are deployed across the world by government agencies and contractors for sensitive and impactful tasks, such as surveillance and database matching. Despite their widespread use, these systems are known to exhibit bias across a range of sociodemographic dimensions, such as gender and race. Nonetheless, an array of works proposing pre-processing, training, and post-processing methods have failed to close these gaps. Here, we take a very different approach to this problem, identifying that both architectures and hyperparameters of neural networks are instrumental in reducing bias. We first run a large-scale analysis of the impact of architectures and training hyperparameters on several common fairness metrics and show that the implicit convention of choosing high-accuracy architectures may be suboptimal for fairness. Motivated by our findings, we run the first neural architecture search for fairness, jointly with a search for hyperparameters. We output a suite of models which Pareto-dominate all other competitive architectures in terms of accuracy and fairness. Furthermore, we show that these models transfer well to other face recognition datasets with similar and distinct protected attributes. We release our code and raw result files so that researchers and practitioners can replace our fairness metrics with a bias measure of their choice.

翻译:政府机构和承包商为敏感和有影响的任务在世界各地部署面对面的识别系统,例如监视和数据库匹配。尽管这些系统被广泛使用,但众所周知,这些系统在性别和种族等一系列社会人口层面表现出偏见。然而,一系列提议预处理、培训和后处理方法的工作未能弥补这些差距。在这里,我们对这个问题采取非常不同的办法,确定神经网络的建筑和超光度计在减少偏差方面起着作用。我们首先对建筑的影响进行了大规模分析,对若干通用的公平度量指标进行了超强参数培训,并表明选择高精度结构的隐含公约可能不利于公平。我们根据调查结果,我们运行了第一个神经结构寻求公平,同时搜索了超度参数。我们制作了一套模型,Pareto在准确和公平方面将所有其他竞争性结构都定了下来。此外,我们展示了这些模型向其他面临识别的数据集转移的模型,并展示了相似和受保护的属性,表明选择高精确度结构的隐含的隐含的公约可能不利于公平性。我们发布了我们的代码和原始结果,从而用测量了研究人员和从业者选择的公正度来取代了自己的选择。