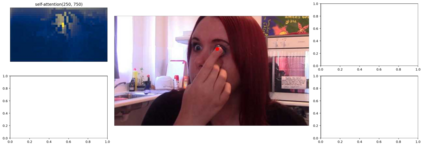

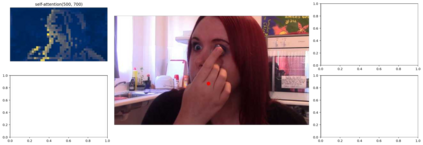

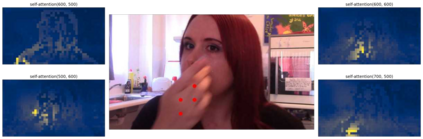

Video grounding aims to localize the temporal segment corresponding to a sentence query from an untrimmed video. Almost all existing video grounding methods fall into two frameworks: 1) Top-down model: It predefines a set of segment candidates and then conducts segment classification and regression. 2) Bottom-up model: It directly predicts frame-wise probabilities of the referential segment boundaries. However, all these methods are not end-to-end, \ie, they always rely on some time-consuming post-processing steps to refine predictions. To this end, we reformulate video grounding as a set prediction task and propose a novel end-to-end multi-modal Transformer model, dubbed as \textbf{GTR}. Specifically, GTR has two encoders for video and language encoding, and a cross-modal decoder for grounding prediction. To facilitate the end-to-end training, we use a Cubic Embedding layer to transform the raw videos into a set of visual tokens. To better fuse these two modalities in the decoder, we design a new Multi-head Cross-Modal Attention. The whole GTR is optimized via a Many-to-One matching loss. Furthermore, we conduct comprehensive studies to investigate different model design choices. Extensive results on three benchmarks have validated the superiority of GTR. All three typical GTR variants achieve record-breaking performance on all datasets and metrics, with several times faster inference speed.

翻译:视频地面定位旨在将与未剪辑的视频中的句号查询相对应的时间段段本地化。 几乎所有现有的视频地面段方法都分为两个框架:(1) 上下模式: 它预先确定一组段候选人,然后进行分级分类和回归。 (2) 下调模式: 它直接预测宽段边界框架的概率。 但是, 所有这些方法都不是端端对端,\ie, 它们总是依赖一些耗时的后处理步骤来完善预测。 为此, 将视频地面部分重新配置为一套设定的预测任务, 并提出一个新的端对端多式变异器模型, 称为\ textbf{GTR}。 具体地说, GTR有两个视频和语言编码编码编码编码的编码器, 和一个跨模式解调器用于基础预测。 然而, 所有这些方法都不是端对端培训, 我们总是使用一个 Cubic 内嵌入层层层将原始视频转换成一套更快的视觉标记。 为了更好地将这两种模式结合在解析器中, 我们设计了一个新的多式TR- 跨式的跨式数据库, 我们设计了一个新的多式数据库到不同的版本数据库, 将一个G- 格式的模型到所有的模拟的模拟模拟模拟的模拟的模拟的模拟模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟的模拟结果。