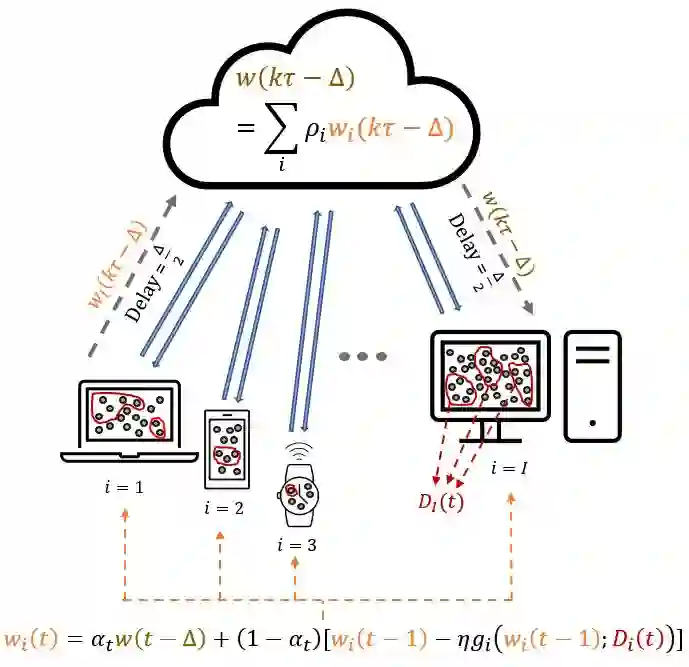

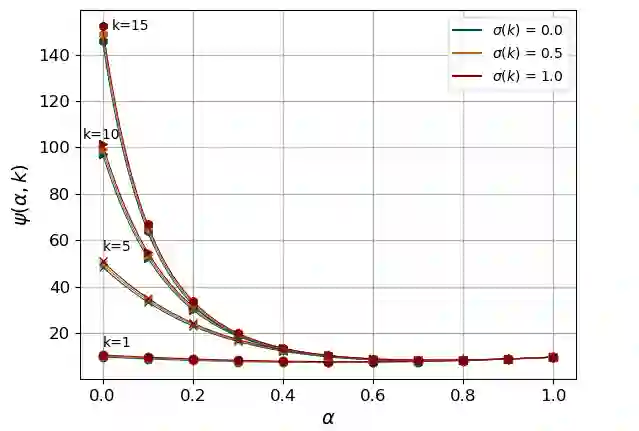

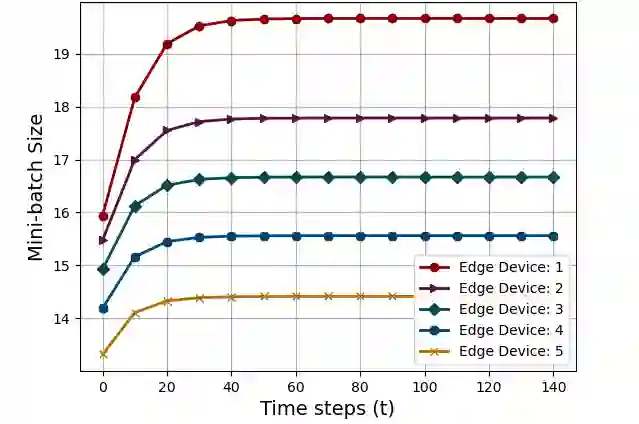

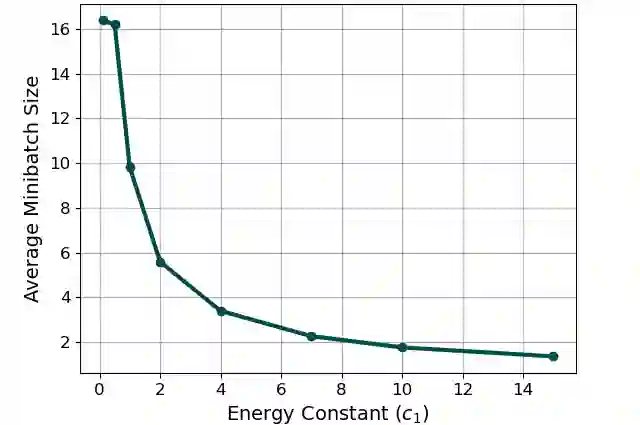

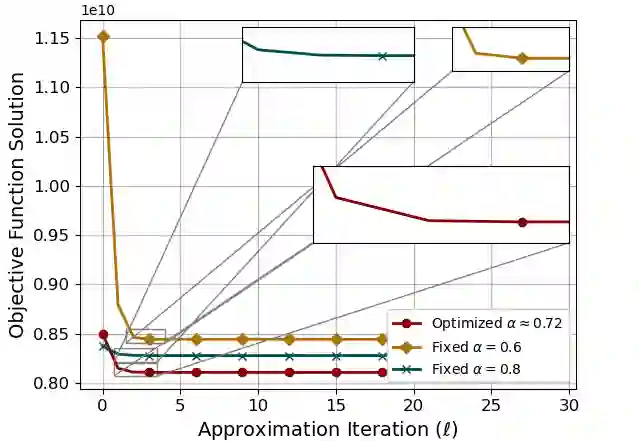

Federated learning (FL) has emerged as a popular technique for distributing machine learning across wireless edge devices. We examine FL under two salient properties of contemporary networks: device-server communication delays and device computation heterogeneity. Our proposed StoFedDelAv algorithm incorporates a local-global model combiner into the FL synchronization step. We theoretically characterize the convergence behavior of StoFedDelAv and obtain the optimal combiner weights, which consider the global model delay and expected local gradient error at each device. We then formulate a network-aware optimization problem which tunes the minibatch sizes of the devices to jointly minimize energy consumption and machine learning training loss, and solve the non-convex problem through a series of convex approximations. Our simulations reveal that StoFedDelAv outperforms the current art in FL, evidenced by the obtained improvements in optimization objective.

翻译:联邦学习(FL) 已经成为在无线边缘设备之间分配机器学习的流行技术。 我们根据当代网络的两个突出特性( 设备- 服务器通信延迟和装置计算异质性)来检查FL。 我们提议的StoFedDelAv 算法将一个本地- 全球模型组合器纳入FL同步步骤。 我们理论上将StoFedDelAv 的趋同行为定性为最佳组合器重量,它考虑到全球模型延迟和每个设备预期的本地梯度错误。 然后我们设计一个网络敏化优化问题, 以调和设备的小型尺寸, 以联合尽量减少能源消耗和机器学习培训损失, 并通过一系列配置近似来解决非电离层问题。 我们的模拟显示StoFedDelAv 超越了当前FL 的艺术, 体现在优化目标的改进。