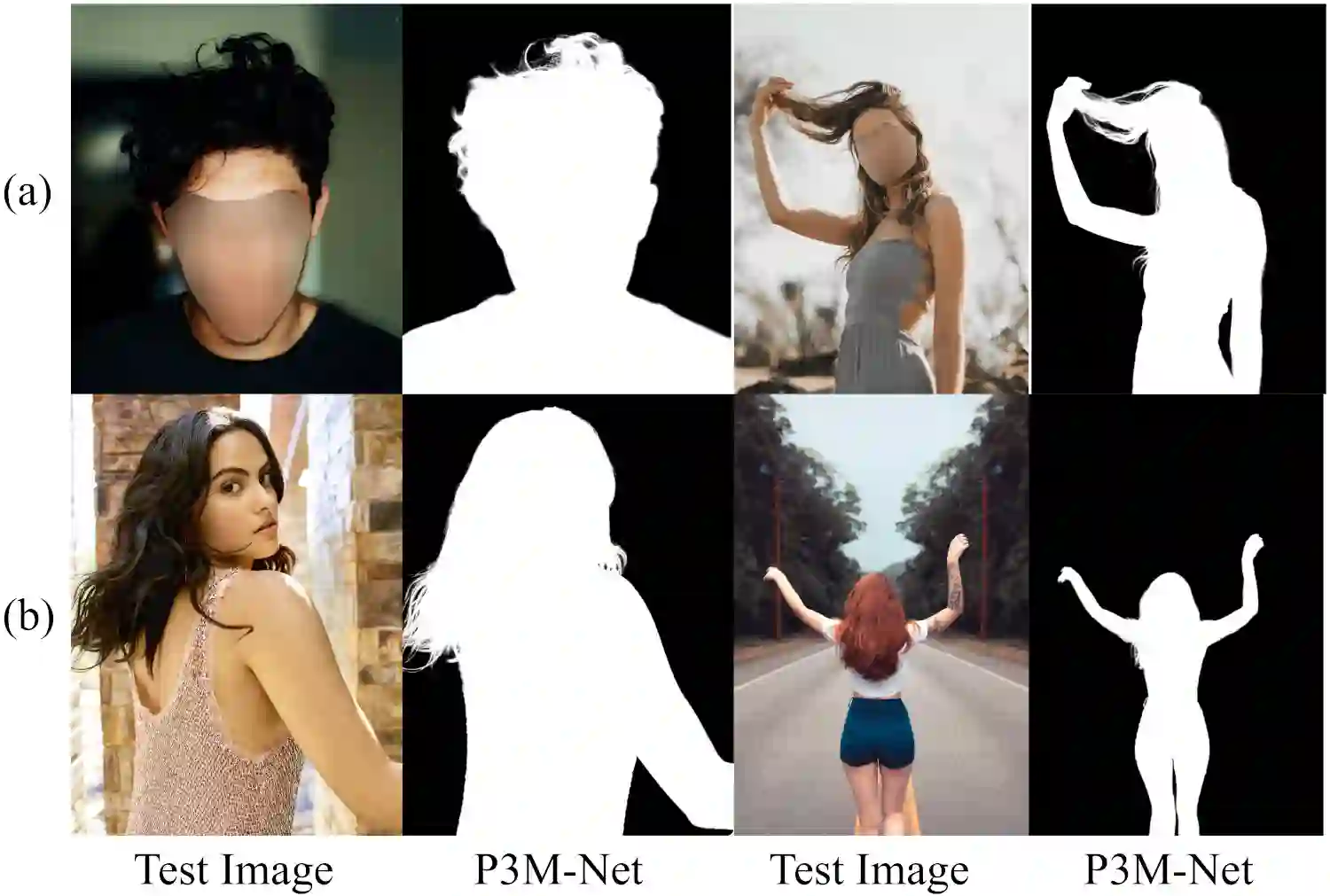

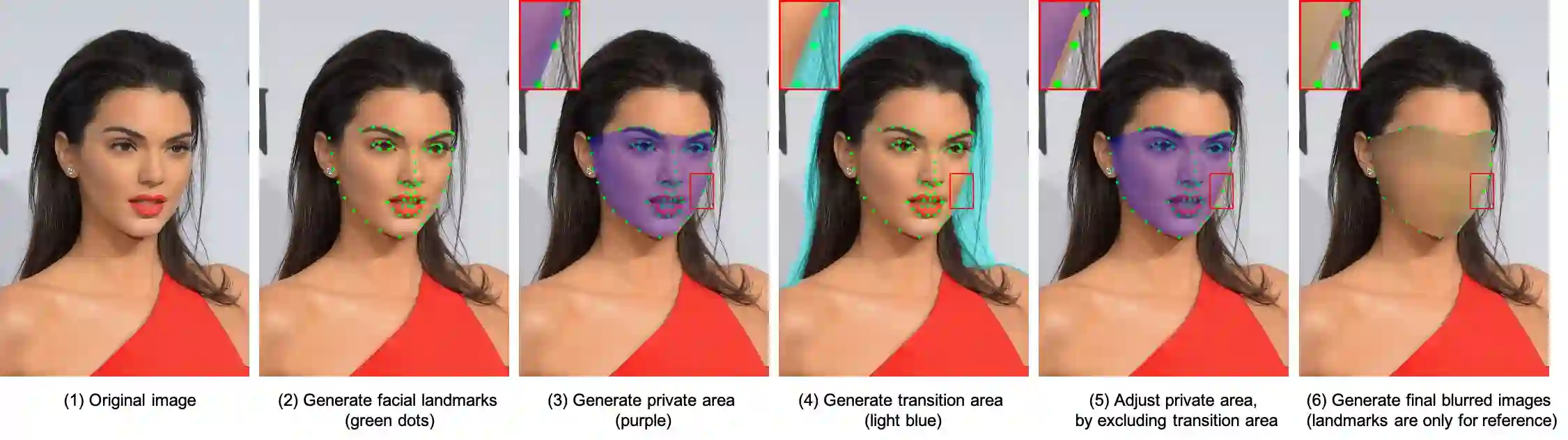

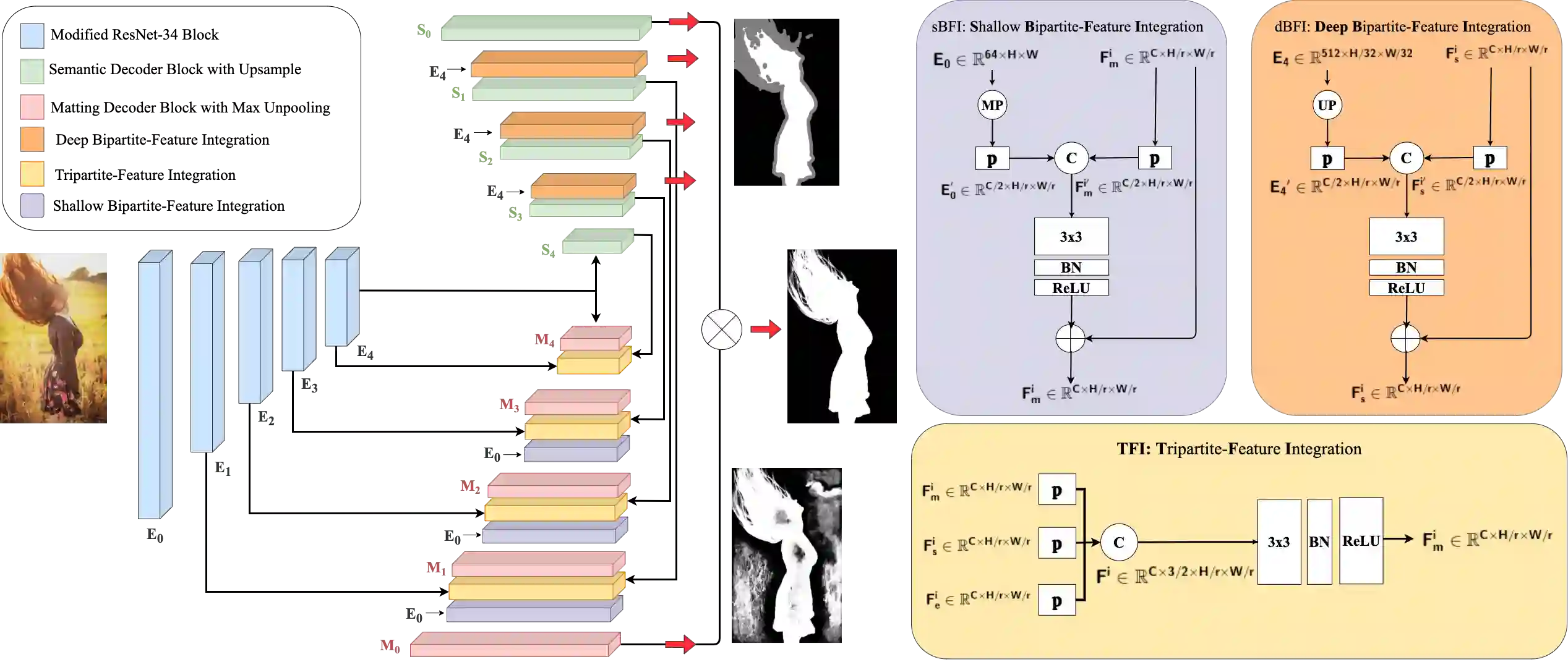

Recently, there has been an increasing concern about the privacy issue raised by using personally identifiable information in machine learning. However, previous portrait matting methods were all based on identifiable portrait images. To fill the gap, we present P3M-10k in this paper, which is the first large-scale anonymized benchmark for Privacy-Preserving Portrait Matting. P3M-10k consists of 10,000 high-resolution face-blurred portrait images along with high-quality alpha mattes. We systematically evaluate both trimap-free and trimap-based matting methods on P3M-10k and find that existing matting methods show different generalization capabilities when following the Privacy-Preserving Training (PPT) setting, i.e., "training on face-blurred images and testing on arbitrary images". To devise a better trimap-free portrait matting model, we propose P3M-Net, which leverages the power of a unified framework for both semantic perception and detail matting, and specifically emphasizes the interaction between them and the encoder to facilitate the matting process. Extensive experiments on P3M-10k demonstrate that P3M-Net outperforms the state-of-the-art methods in terms of both objective metrics and subjective visual quality. Besides, it shows good generalization capacity under the PPT setting, confirming the value of P3M-10k for facilitating future research and enabling potential real-world applications. The source code and dataset will be made publicly available.

翻译:最近,人们日益关注在机器学习中使用个人可识别的信息所产生的隐私问题,然而,以前的肖像结配方法都是基于可识别的肖像图像。为了填补这一空白,我们在本文中介绍了P3M-10k,这是隐私-保护Portrait Matting的第一个大规模匿名基准。P3M-10k由10,000张高分辨率面粉碎肖像和高质量的阿尔法垫组成。我们系统地评价了在P3M-10k上不设字形和基于三角形的配配对方法,发现现有的配对方法都以可识别的肖像图像为基础。在进行隐私保护培训(PPPT)设置之后,即“面罩图像培训和任意图像测试”时,我们提出了P3M-10k-10k 。为了设计一个更好的不设字型画像的肖像配像模型,我们提出了P3M-Net, 利用一个统一的框架的力量来进行语义认知和细节配交配,我们特别强调它们与编码之间的相互作用,以便利交配过程。在P3M-10K数据库中进行广泛的广泛实验时,P3M-10k 将展示一个可实现的图像质量目标化的模型,在P3M-maxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx