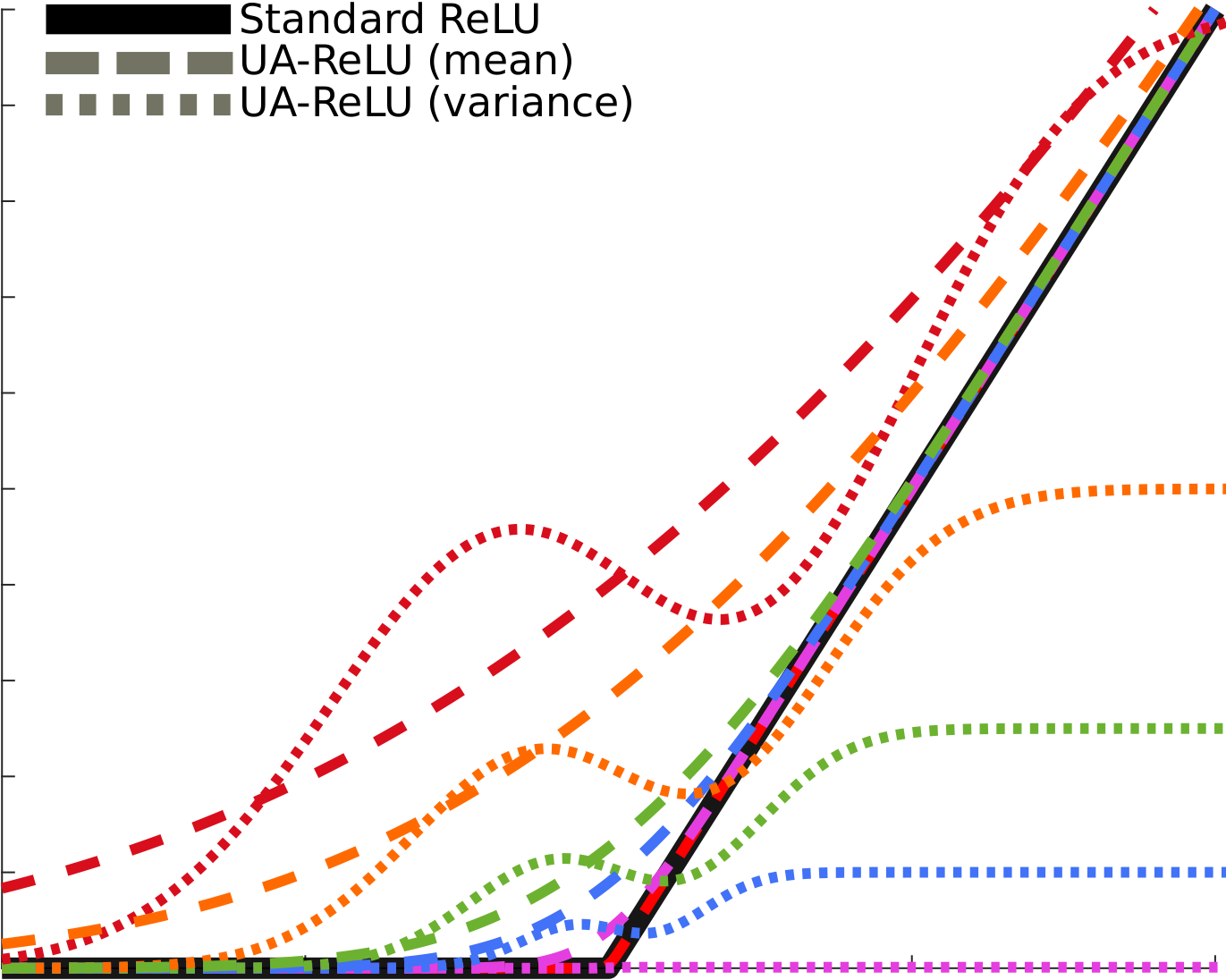

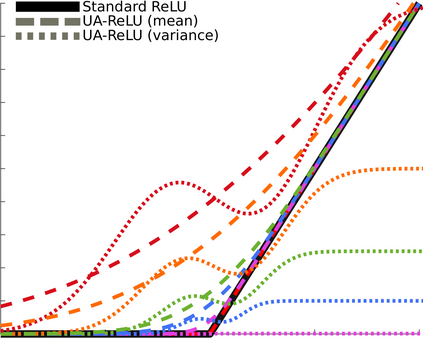

In this technical report we study the problem of propagation of uncertainty (in terms of variances of given uni-variate normal random variables) through typical building blocks of a Convolutional Neural Network (CNN). These include layers that perform linear operations, such as 2D convolutions, fully-connected, and average pooling layers, as well as layers that act non-linearly on their input, such as the Rectified Linear Unit (ReLU). Finally, we discuss the sigmoid function, for which we give approximations of its first- and second-order moments, as well as the binary cross-entropy loss function, for which we approximate its expected value under normal random inputs.

翻译:在这份技术报告中,我们研究了通过一个革命神经网络(CNN)的典型构件传播不确定性的问题(就特定单变正常随机变量的差异而言),其中包括进行线性操作的层,如2D演进、完全连接和平均集合层,以及非线性地利用输入的层,如经过校正的线性单位(ReLU)。 最后,我们讨论了我们给出其第一和第二阶点近似点的类似功能,以及二元跨热带损失功能,我们在正常随机输入下估计其预期值。