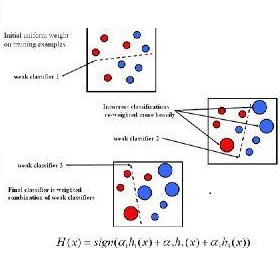

This paper studies binary classification in robust one-bit compressed sensing with adversarial errors. It is assumed that the model is overparameterized and that the parameter of interest is effectively sparse. AdaBoost is considered, and, through its relation to the max-$\ell_1$-margin-classifier, prediction error bounds are derived. The developed theory is general and allows for heavy-tailed feature distributions, requiring only a weak moment assumption and an anti-concentration condition. Improved convergence rates are shown when the features satisfy a small deviation lower bound. In particular, the results provide an explanation why interpolating adversarial noise can be harmless for classification problems. Simulations illustrate the presented theory.

翻译:本文研究强力单位压缩传感器的二元分类和对抗性误差; 假设模型的参数过分,而且有关参数实际上很少; 考虑了AdaBoost, 并通过它与最大-$/ell_1$-边际分类仪的关系,得出了预测错误界限; 开发的理论是一般性的,允许重尾特征分布,只要求微小的假设和反浓缩条件; 当特性满足小小的偏差时,就会显示更好的趋同率; 特别是,结果解释了内插对抗性噪音对分类问题是否无害的原因; 模拟说明了所提出的理论。