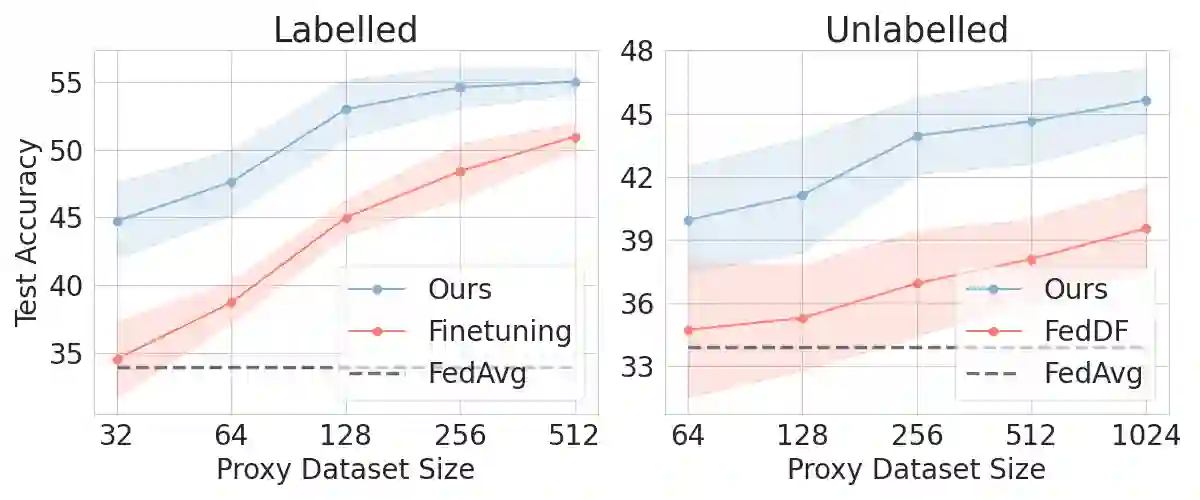

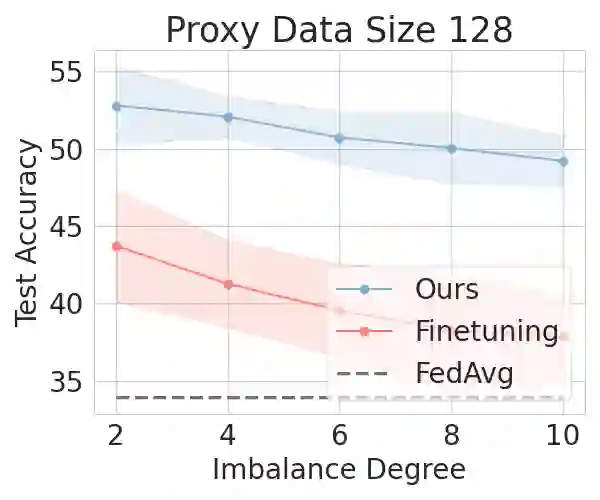

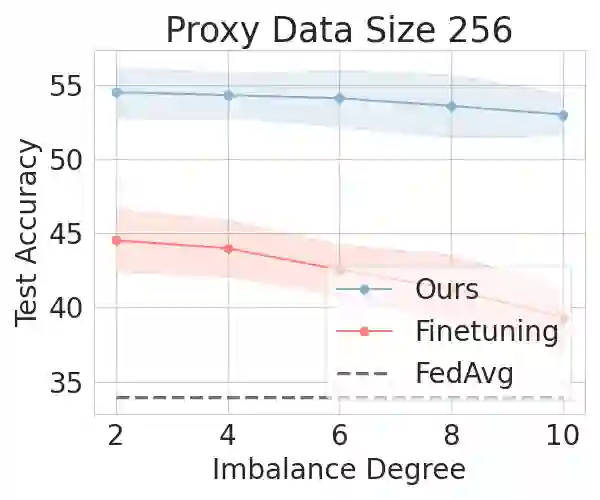

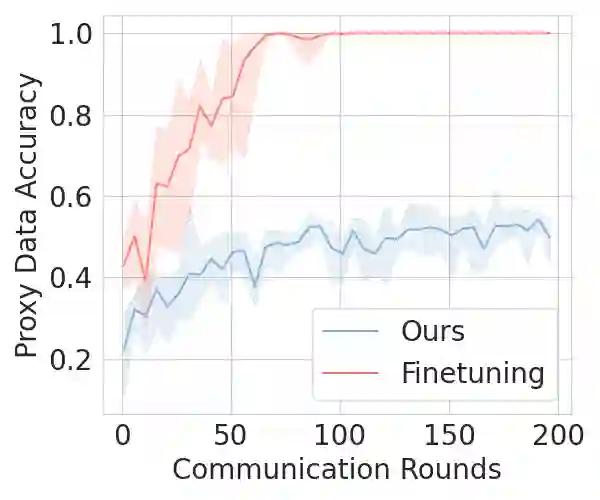

Non-IID data distribution across clients and poisoning attacks are two main challenges in real-world federated learning (FL) systems. While both of them have attracted great research interest with specific strategies developed, no known solution manages to address them in a unified framework. To universally overcome both challenges, we propose SmartFL, a generic approach that optimizes the server-side aggregation process with a small amount of proxy data collected by the service provider itself via a subspace training technique. Specifically, the aggregation weight of each participating client at each round is optimized using the server-collected proxy data, which is essentially the optimization of the global model in the convex hull spanned by client models. Since at each round, the number of tunable parameters optimized on the server side equals the number of participating clients (thus independent of the model size), we are able to train a global model with massive parameters using only a small amount of proxy data (e.g., around one hundred samples). With optimized aggregation, SmartFL ensures robustness against both heterogeneous and malicious clients, which is desirable in real-world FL where either or both problems may occur. We provide theoretical analyses of the convergence and generalization capacity for SmartFL. Empirically, SmartFL achieves state-of-the-art performance on both FL with non-IID data distribution and FL with malicious clients. The source code will be released.

翻译:在现实世界联合学习系统(FL)中,非IID数据在客户和中毒袭击之间分布是两个主要挑战。虽然这两个系统都吸引了对所制定的具体战略的极大研究兴趣,但没有任何已知的解决办法能够在统一的框架内解决它们。为了普遍克服这两个挑战,我们提议SmartFL, 这是一种通用办法,以服务提供者自己通过子空间培训技术收集的少量代理数据优化服务器-侧汇总进程。具体地说,每轮参与客户的总重使用服务器收集的代理数据优化,这基本上是优化由客户模型覆盖的锥体船体内的全球模型。由于在每轮服务器上优化的金枪鱼参数数量等于参与客户的数量(因此独立于模型大小),我们有能力用少量代理数据(例如,大约100个样本)来培训一个具有大量参数的全球模型。使用最优化的聚合数据,SmartFlFL确保每个参与客户对混合和恶意客户的坚固性,这在现实世界FL(既有可能出现问题,也可能出现两种问题)中都是可取的。我们用FML(FM-F-L)的智能化数据流流放能力进行智能分析。