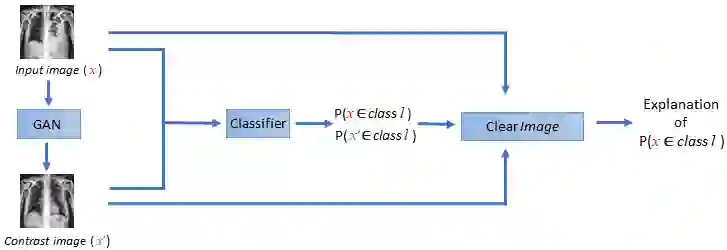

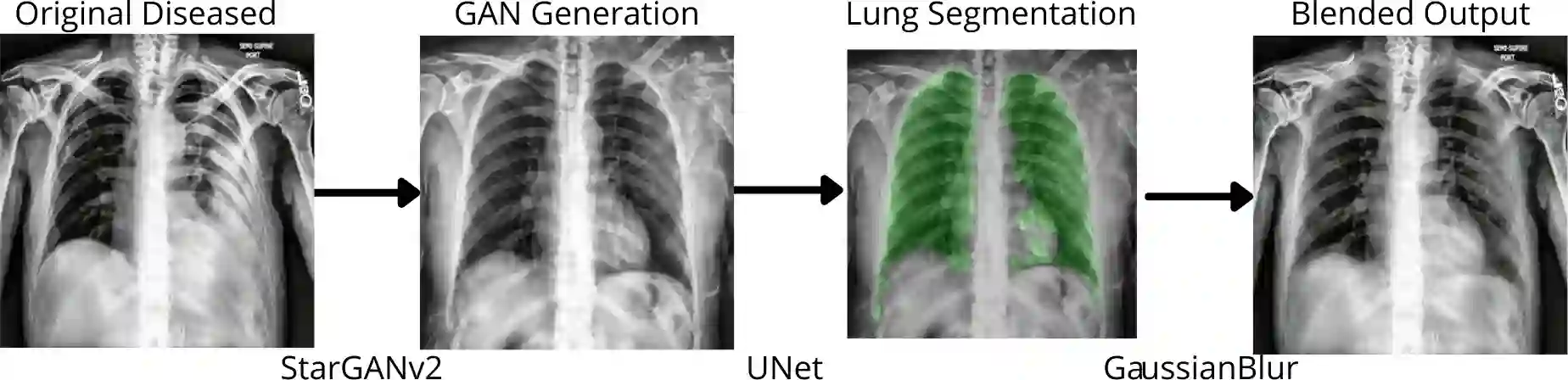

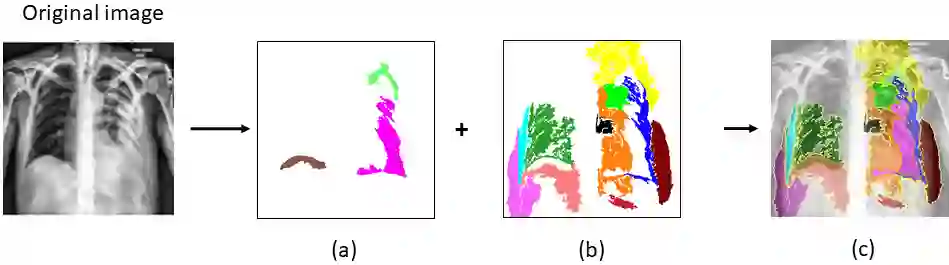

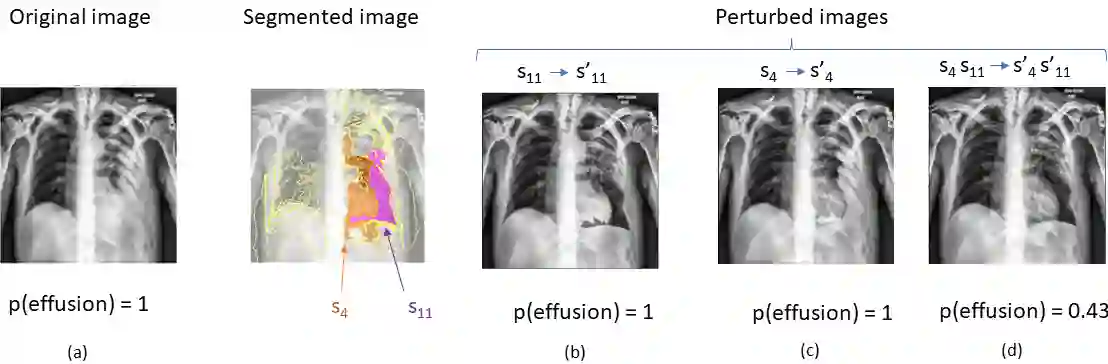

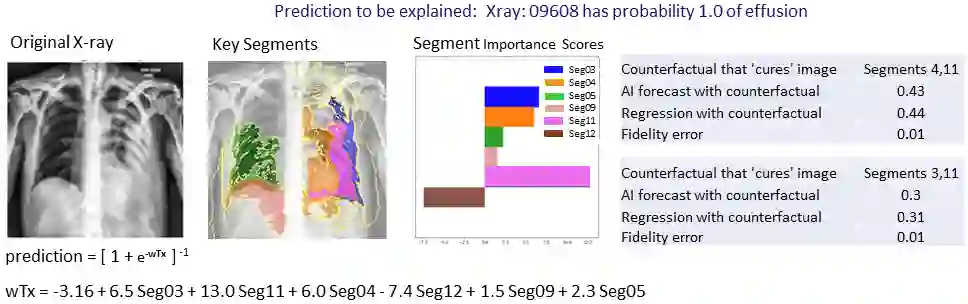

A novel explainable AI method called CLEAR Image is introduced in this paper. CLEAR Image is based on the view that a satisfactory explanation should be contrastive, counterfactual and measurable. CLEAR Image explains an image's classification probability by contrasting the image with a corresponding image generated automatically via adversarial learning. This enables both salient segmentation and perturbations that faithfully determine each segment's importance. CLEAR Image was successfully applied to a medical imaging case study where it outperformed methods such as Grad-CAM and LIME by an average of 27% using a novel pointing game metric. CLEAR Image excels in identifying cases of "causal overdetermination" where there are multiple patches in an image, any one of which is sufficient by itself to cause the classification probability to be close to one.

翻译:本文引入了名为 CLEAR 图像的新颖的 AI 方法 。 CLEAR 图像基于以下观点: 令人满意的解释应该是对比的、反事实的和可测量的。 CLEAR 图像通过将图像与通过对抗性学习自动生成的对应图像作对比来解释图像的分类概率。 这既能让显著的分化和扰动忠实地决定每个部分的重要性。 CLEAR 图像成功地应用到医学成像案例研究中, 其效果超过 Grad- CAM 和 LIME 等方法, 平均为27%, 使用新的指针游戏指标。 CLEAR 图像在确定图像中存在多个补丁的“ 导致超标度” 案例方面非常出色, 任何一种补丁本身足以使分类概率接近于一个。