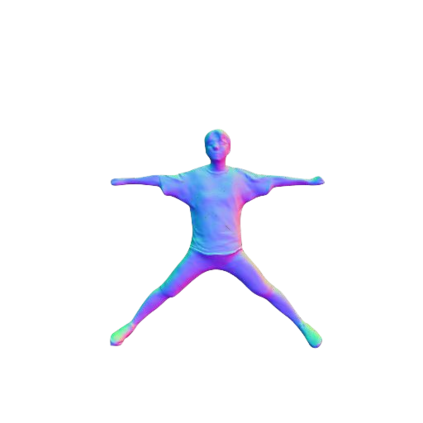

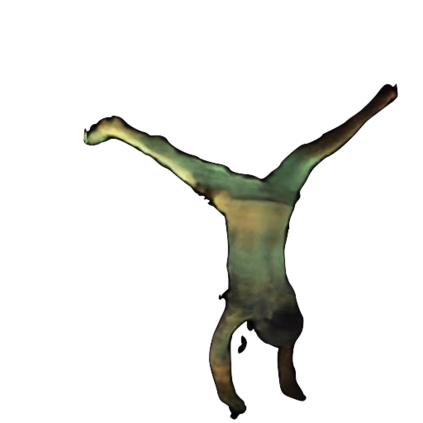

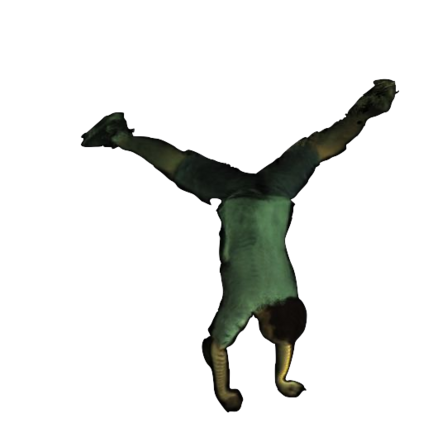

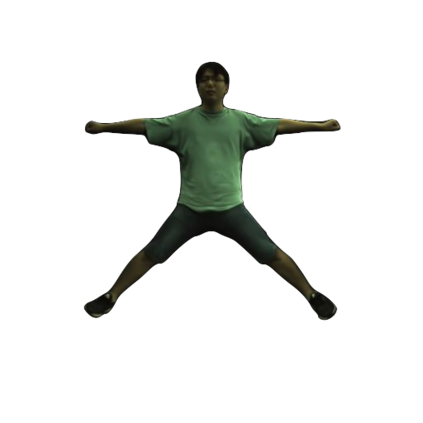

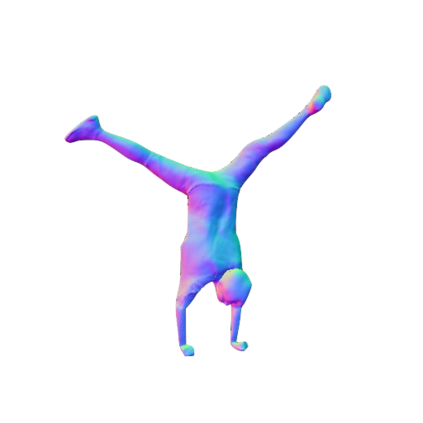

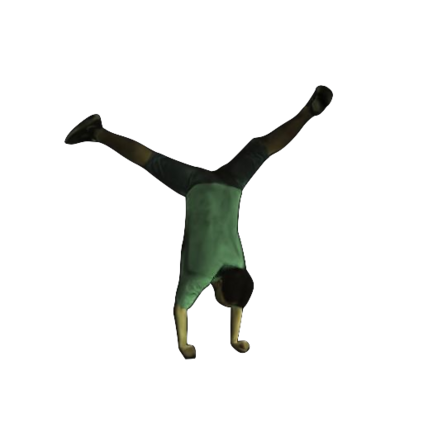

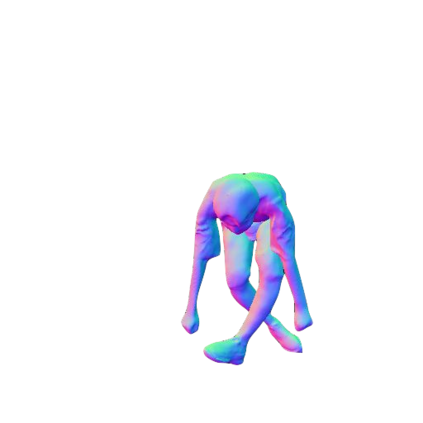

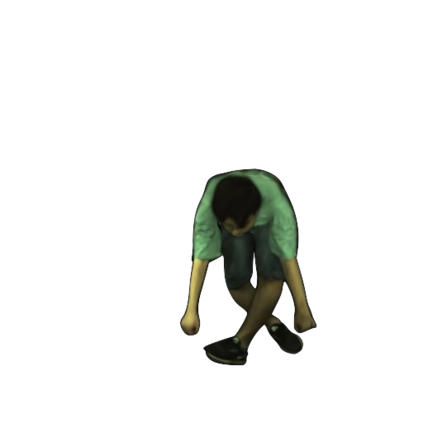

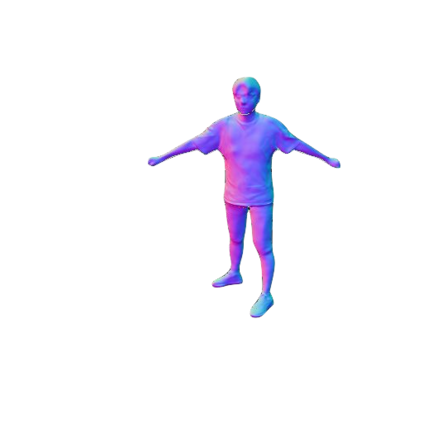

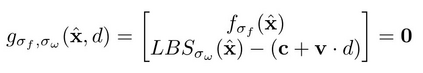

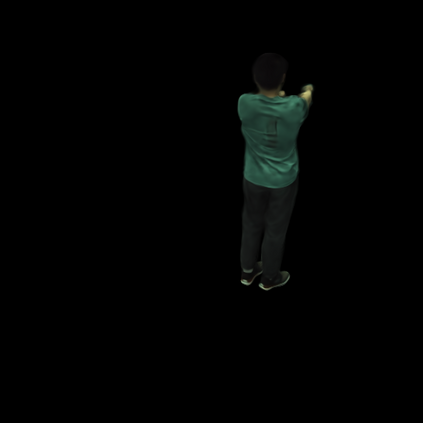

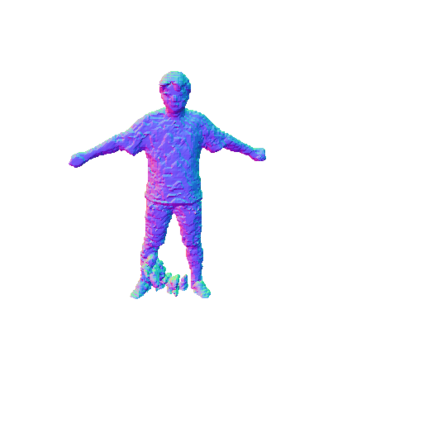

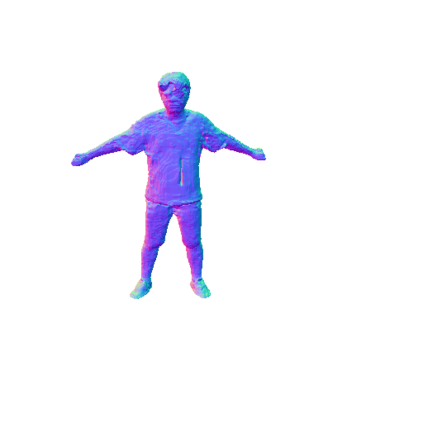

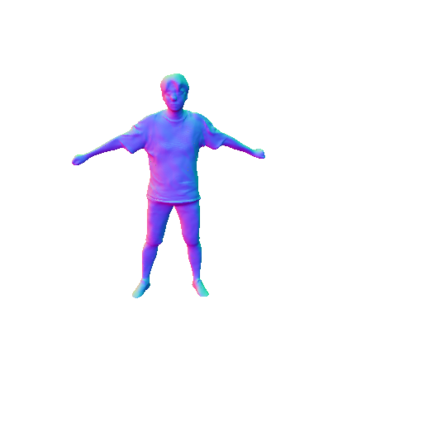

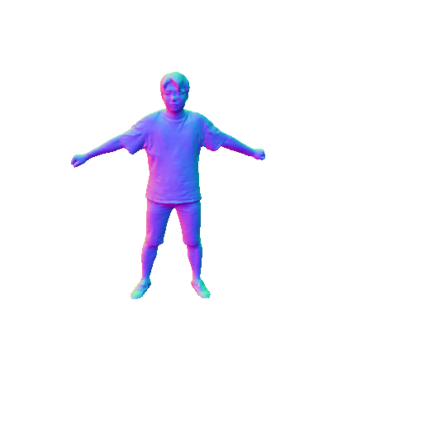

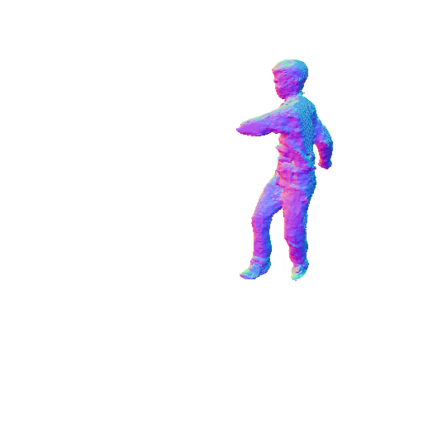

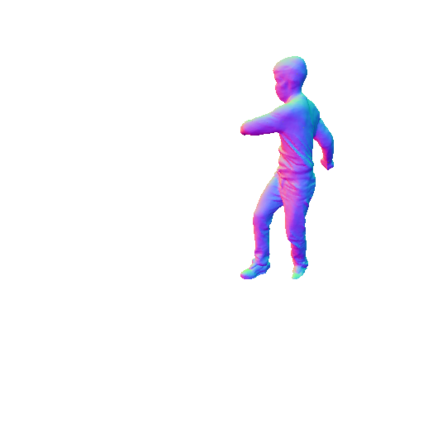

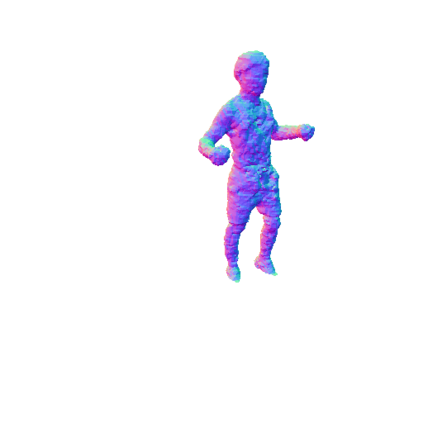

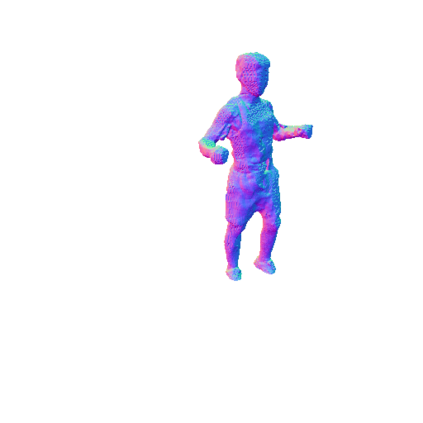

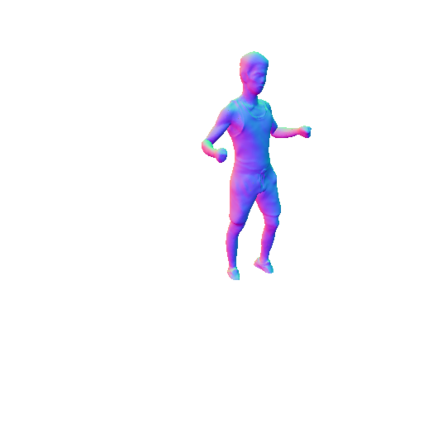

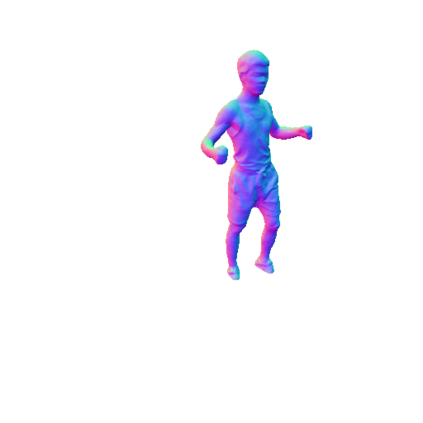

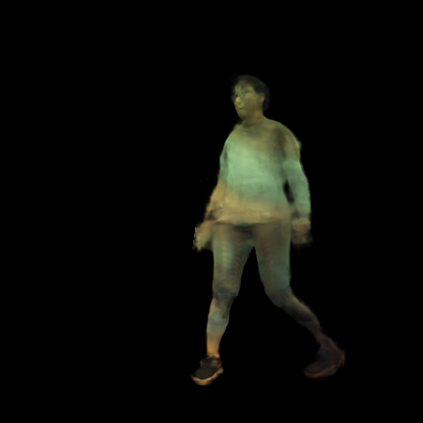

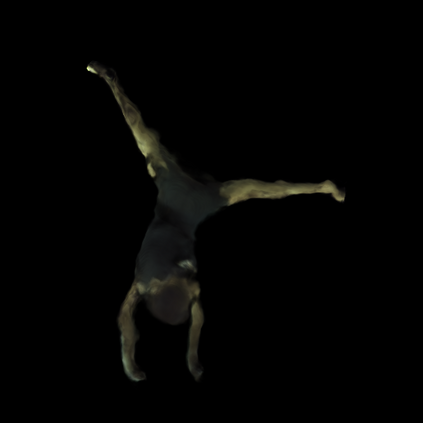

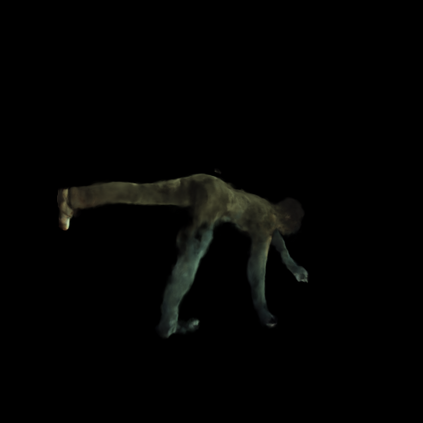

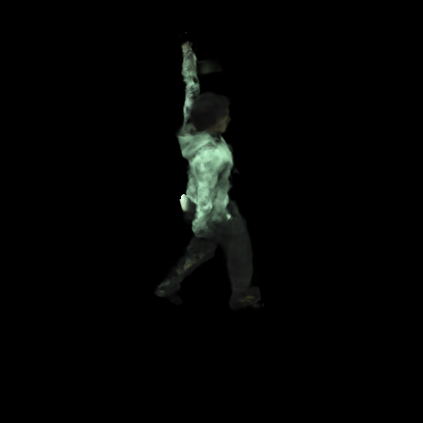

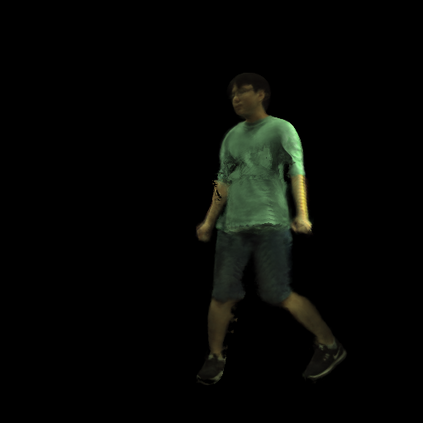

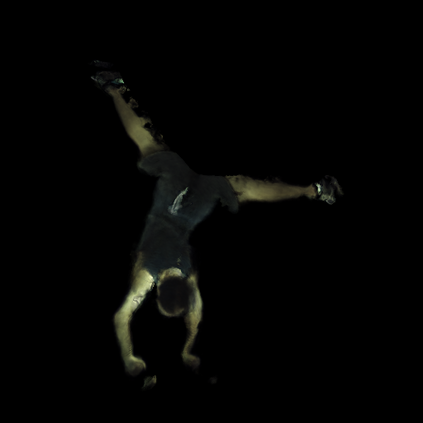

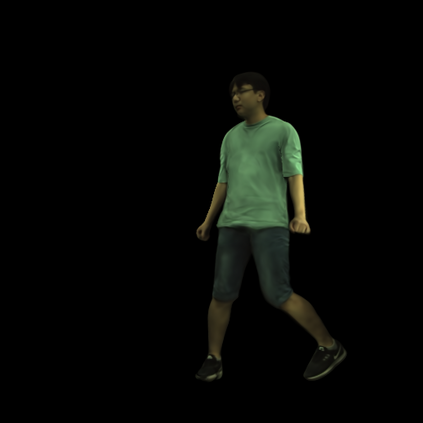

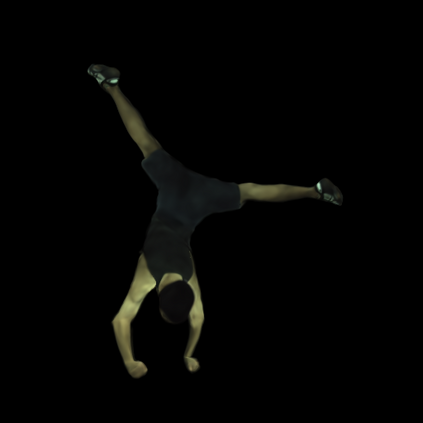

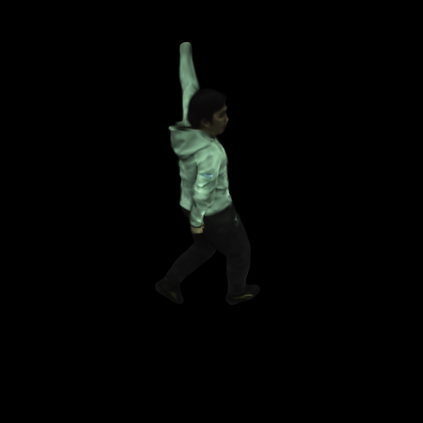

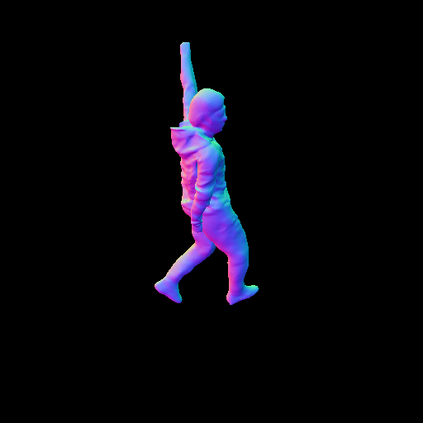

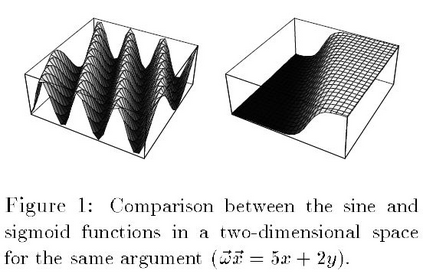

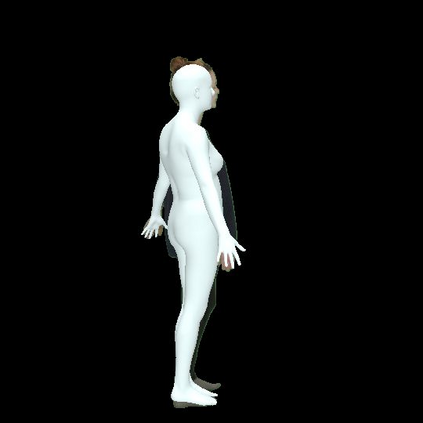

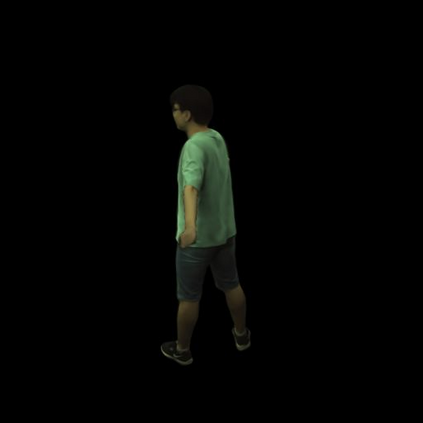

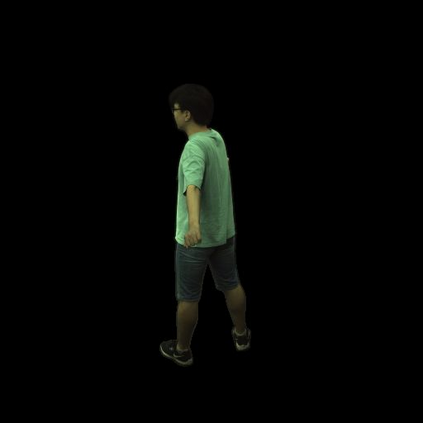

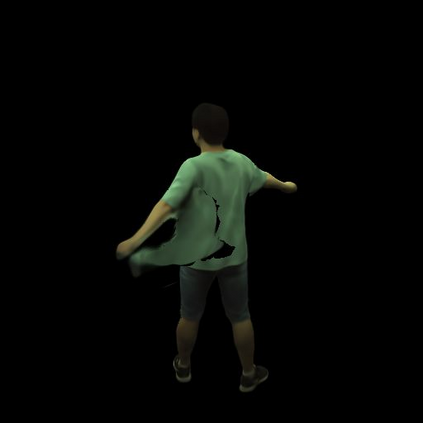

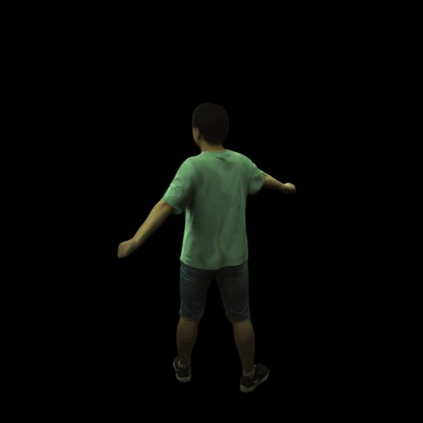

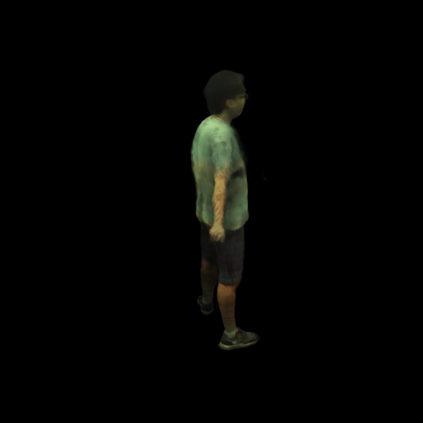

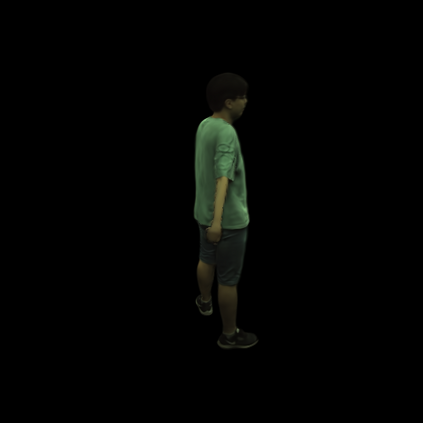

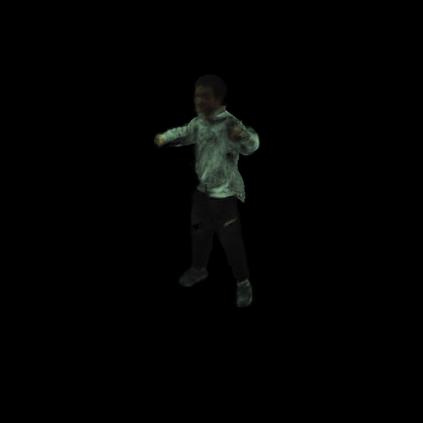

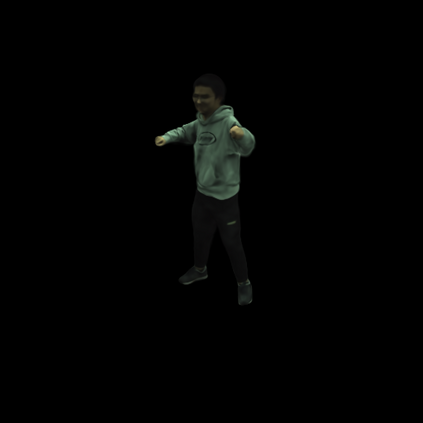

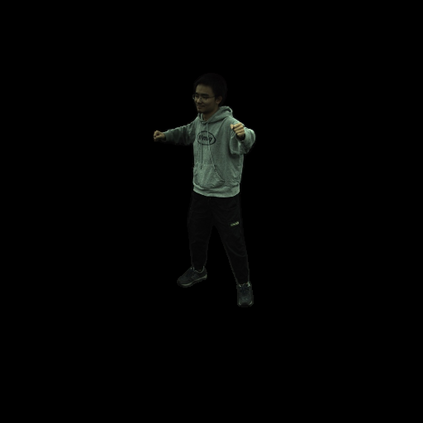

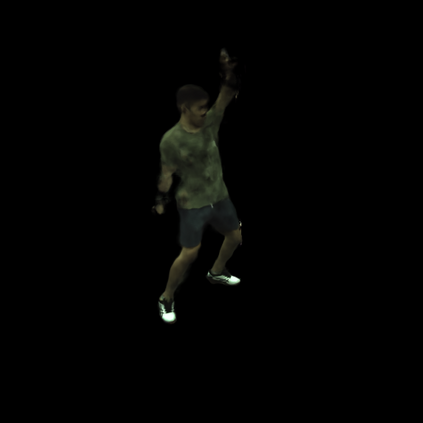

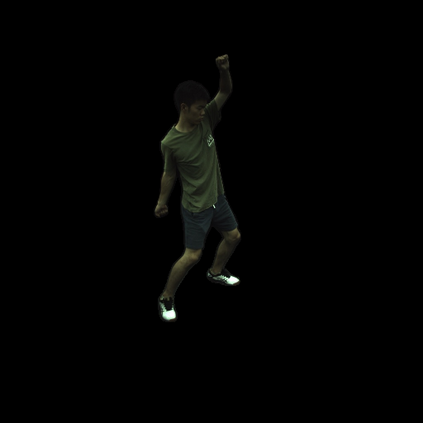

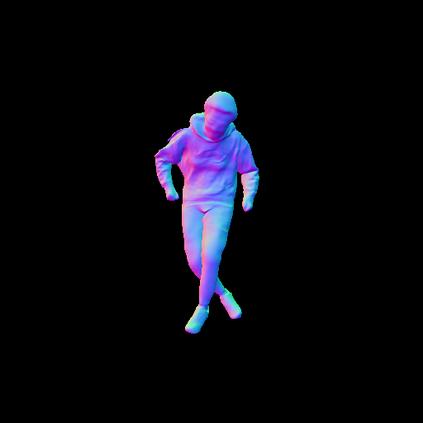

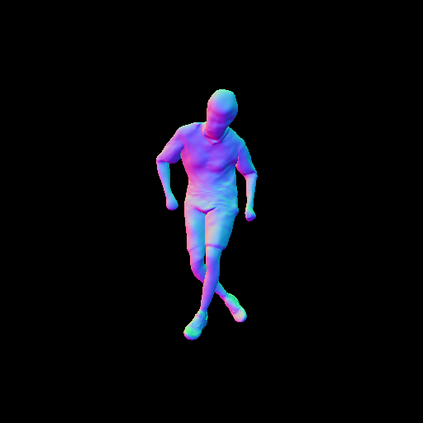

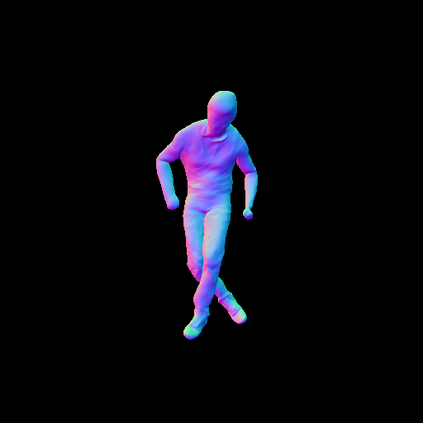

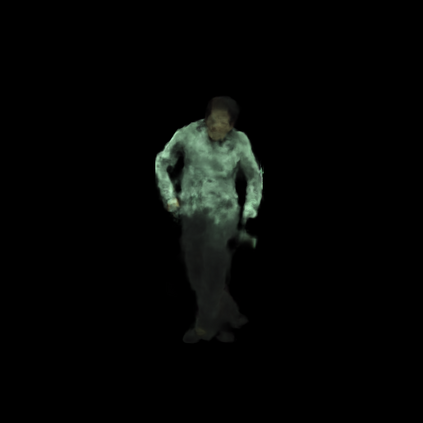

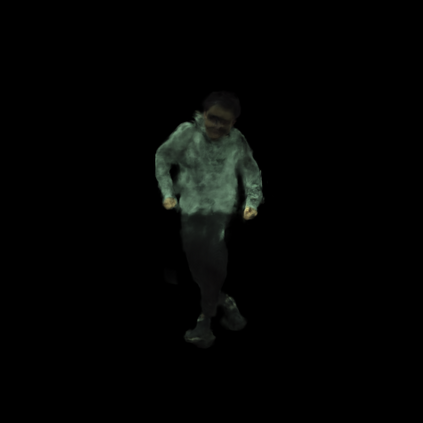

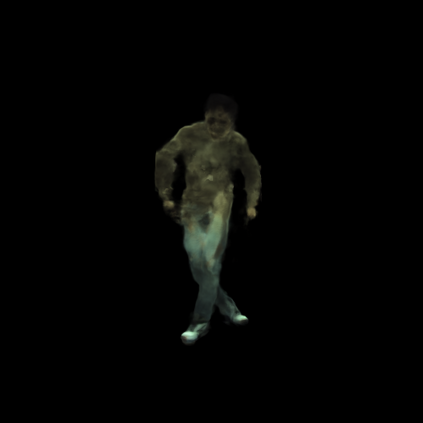

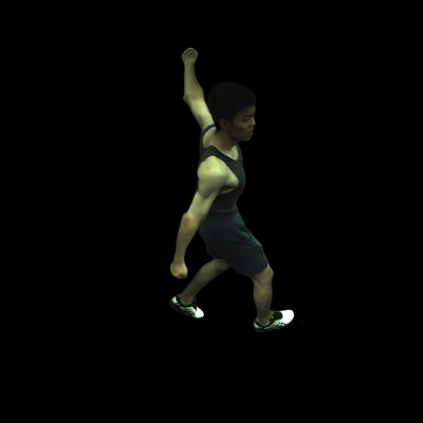

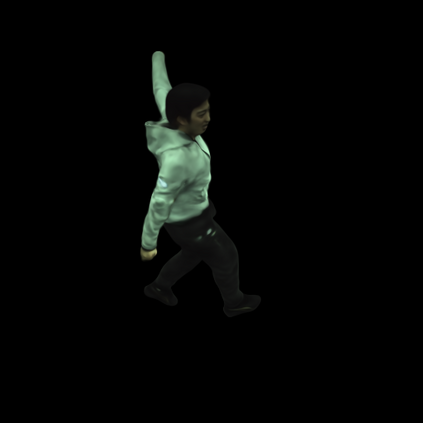

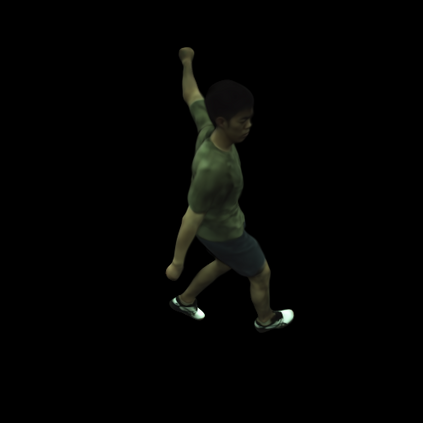

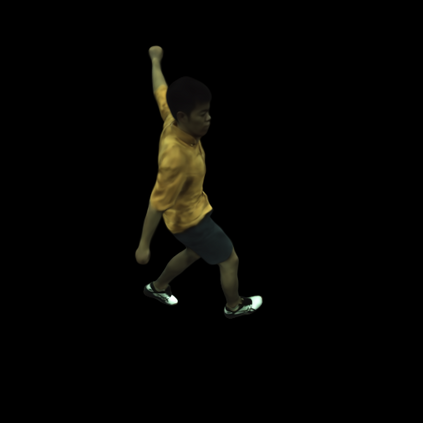

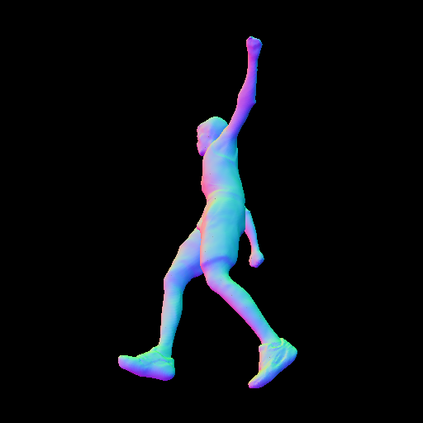

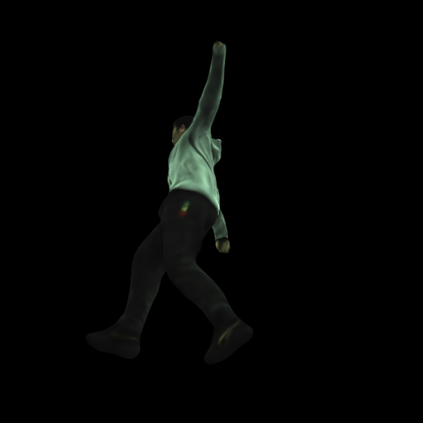

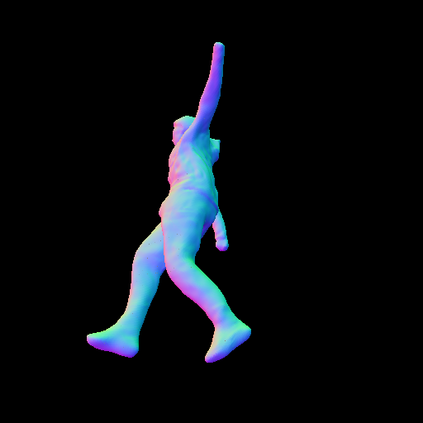

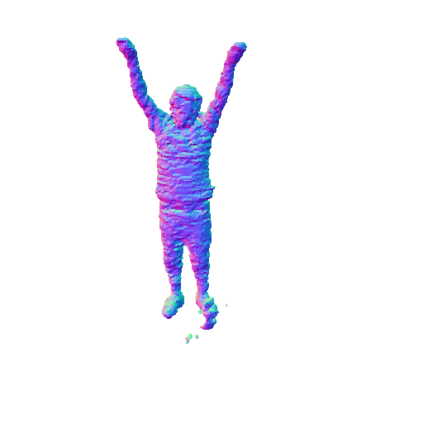

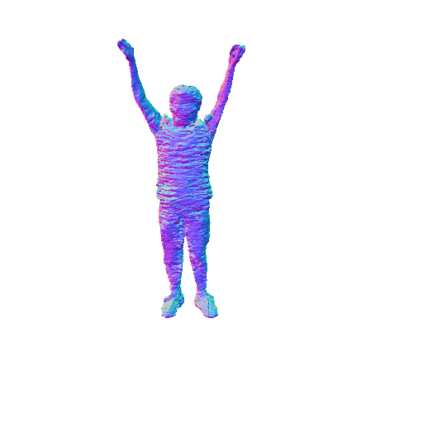

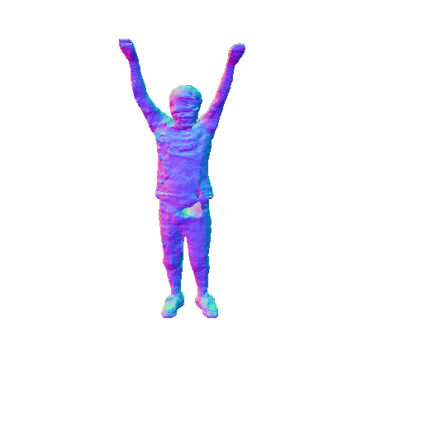

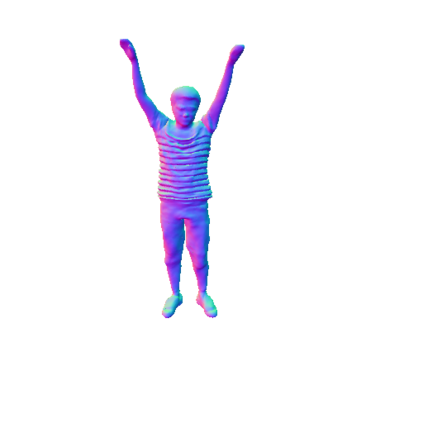

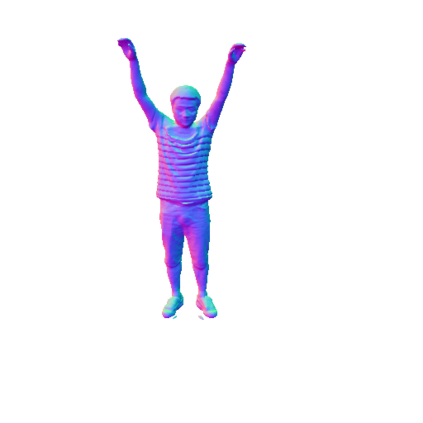

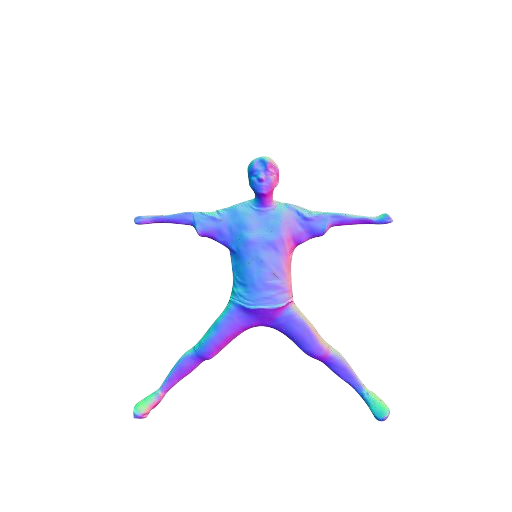

Combining human body models with differentiable rendering has recently enabled animatable avatars of clothed humans from sparse sets of multi-view RGB videos. While state-of-the-art approaches achieve realistic appearance with neural radiance fields (NeRF), the inferred geometry often lacks detail due to missing geometric constraints. Further, animating avatars in out-of-distribution poses is not yet possible because the mapping from observation space to canonical space does not generalize faithfully to unseen poses. In this work, we address these shortcomings and propose a model to create animatable clothed human avatars with detailed geometry that generalize well to out-of-distribution poses. To achieve detailed geometry, we combine an articulated implicit surface representation with volume rendering. For generalization, we propose a novel joint root-finding algorithm for simultaneous ray-surface intersection search and correspondence search. Our algorithm enables efficient point sampling and accurate point canonicalization while generalizing well to unseen poses. We demonstrate that our proposed pipeline can generate clothed avatars with high-quality pose-dependent geometry and appearance from a sparse set of multi-view RGB videos. Our method achieves state-of-the-art performance on geometry and appearance reconstruction while creating animatable avatars that generalize well to out-of-distribution poses beyond the small number of training poses.

翻译:将人类身体模型与不同变相结合起来,最近使得衣着人能够从几组多视图 RGB 视频的稀薄成形。虽然最先进的方法能够以神经光场(NERF)取得现实的外观,但推断几何往往缺乏细节。此外,由于缺少几何限制,因此,在分布之外对动动动动动动动动动动动动画还不可能,因为从观测空间到可视空间的映射图无法将忠实地概括为隐形。在这项工作中,我们解决了这些缺点,并提出了一个模型,以创建具有详细地理测量的可成像的可成型人衣的衣着面形形形形形形形形形形色色色的模型。为了实现详细的几何貌,我们将一个清晰的隐含的表面表示与体形体形色色色色相结合。为了概括,我们提出了一个新的用于同时光-表面交叉搜索和通信搜索的根基算法,因为我们的算法使得有效的点取样和准确的点可以概括为看不见的外观。我们提议的管道可以产生具有高质量、高度依赖的小型的面形形形形形形形形形形形形形形形形形形形形形形形形形形形形形色和外图的外图的外图和外图的外形形形形形形形形形形形形形形形形形形形形形影。