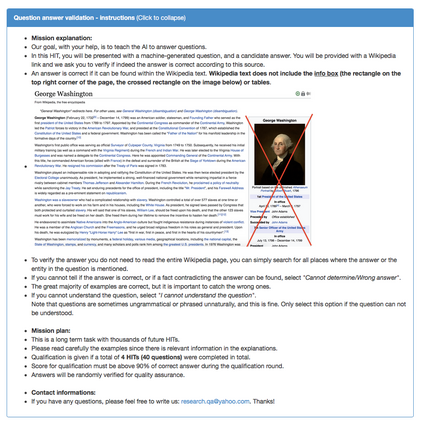

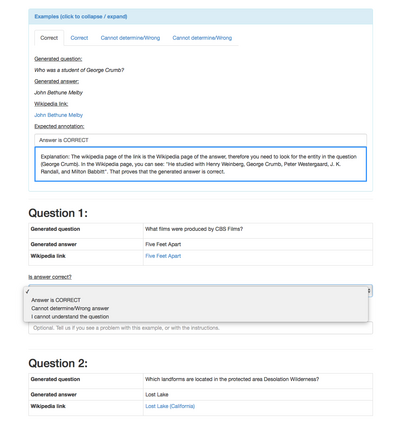

Existing benchmarks for open-domain question answering (ODQA) typically focus on questions whose answers can be extracted from a single paragraph. By contrast, many natural questions, such as "What players were drafted by the Brooklyn Nets?" have a list of answers. Answering such questions requires retrieving and reading from many passages, in a large corpus. We introduce QAMPARI, an ODQA benchmark, where question answers are lists of entities, spread across many paragraphs. We created QAMPARI by (a) generating questions with multiple answers from Wikipedia's knowledge graph and tables, (b) automatically pairing answers with supporting evidence in Wikipedia paragraphs, and (c) manually paraphrasing questions and validating each answer. We train ODQA models from the retrieve-and-read family and find that QAMPARI is challenging in terms of both passage retrieval and answer generation, reaching an F1 score of 26.6 at best. Our results highlight the need for developing ODQA models that handle a broad range of question types, including single and multi-answer questions.

翻译:开放式问题解答的现有基准(ODQA)通常侧重于可以从一个段落中解答答案的问题。相反,许多自然问题,例如“布鲁克林网络起草的什么角色?” 都有一份答案清单。回答这些问题需要大量检索和阅读许多段落。我们引入了QAMPARI(ODQA)基准,其中问题的答案是实体清单,分布在许多段落。我们创建了QAMPARI(QAMPA),方法是:(a) 提出问题,从维基百科的知识图表和表格中找到多个答案;(b) 自动将答案与维基百科段落中的支持性证据对齐,以及(c) 手动对问题进行分解并验证每个答案。我们从检索和阅读的家庭对ODQA模型进行培训,发现QAMPA在通过检索和回答生成两方面都具有挑战性,最多达到26.6分的F1分。我们的结果突出表明,需要开发ODQA模式,处理范围广泛的问题类型,包括单一和多答问题。