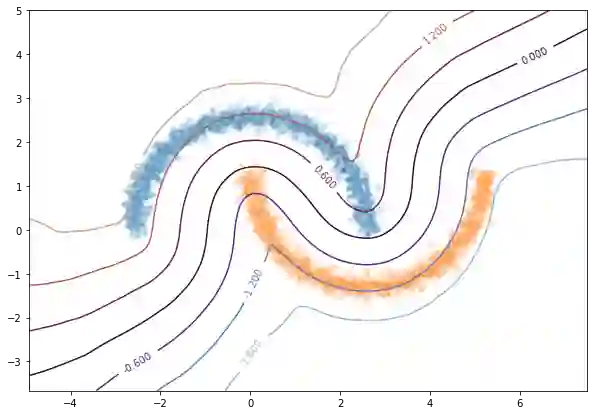

Adversarial examples have pointed out Deep Neural Networks vulnerability to small local noise. It has been shown that constraining their Lipschitz constant should enhance robustness, but make them harder to learn with classical loss functions. We propose a new framework for binary classification, based on optimal transport, which integrates this Lipschitz constraint as a theoretical requirement. We propose to learn 1-Lipschitz networks using a new loss that is an hinge regularized version of the Kantorovich-Rubinstein dual formulation for the Wasserstein distance estimation. This loss function has a direct interpretation in terms of adversarial robustness together with certifiable robustness bound. We also prove that this hinge regularized version is still the dual formulation of an optimal transportation problem, and has a solution. We also establish several geometrical properties of this optimal solution, and extend the approach to multi-class problems. Experiments show that the proposed approach provides the expected guarantees in terms of robustness without any significant accuracy drop. The adversarial examples, on the proposed models, visibly and meaningfully change the input providing an explanation for the classification.

翻译:Adversarial 实例表明深神经网络易受当地小噪音的影响,已经表明限制其Lipschitz常态会提高稳健性,但更难用古典损失功能来学习。我们提议基于最佳运输的新二元分类框架,将Lipschitz限制纳入这一理论要求。我们提议使用Kantorovich-Rubinstein双倍配方的固定版本新损失来学习1-Lipschitz网络,这是瓦塞尔斯坦距离估计的固定版本。这一损失函数直接解释对抗性强健性,加上可验证的稳健性约束。我们还证明,这一固定版本仍然是最佳运输问题的双重表述,并有一个解决办法。我们还确定了这一最佳解决办法的若干几何特性,并将这一方法扩大到多级问题。实验表明,拟议的方法在稳健性方面提供了预期的保证,而没有显著的准确性下降。关于拟议模型的对抗性实例,明显和有意义地改变了为分类提供解释的投入。