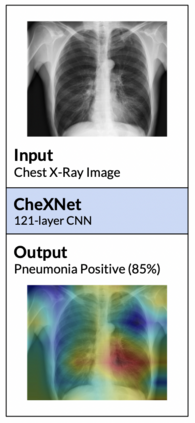

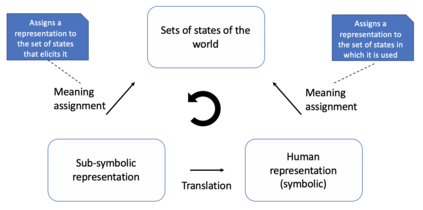

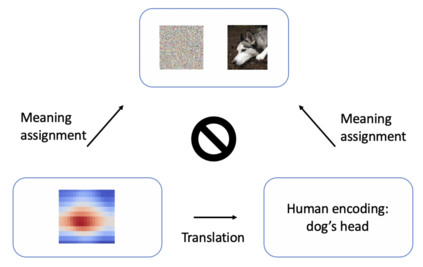

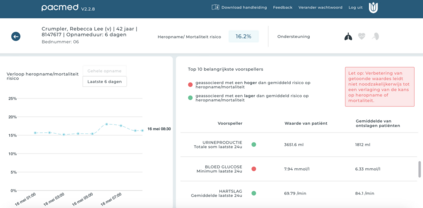

The recent spike in certified Artificial Intelligence (AI) tools for healthcare has renewed the debate around adoption of this technology. One thread of such debate concerns Explainable AI and its promise to render AI devices more transparent and trustworthy. A few voices active in the medical AI space have expressed concerns on the reliability of Explainable AI techniques and especially feature attribution methods, questioning their use and inclusion in guidelines and standards. Despite valid concerns, we argue that existing criticism on the viability of post-hoc local explainability methods throws away the baby with the bathwater by generalizing a problem that is specific to image data. We begin by characterizing the problem as a lack of semantic match between explanations and human understanding. To understand when feature importance can be used reliably, we introduce a distinction between feature importance of low- and high-level features. We argue that for data types where low-level features come endowed with a clear semantics, such as tabular data like Electronic Health Records (EHRs), semantic match can be obtained, and thus feature attribution methods can still be employed in a meaningful and useful way.

翻译:最近经认证的人工智能(AI)医疗工具的激增重新引发了关于采用这一技术的辩论。这种辩论的一线涉及可解释的AI及其使AI设备更加透明和可信赖的承诺。在医学AI空间活跃的一些声音对可解释的AI技术的可靠性表示关切,特别是特性归属方法的可靠性表示关切,质疑这些技术的使用,并将其纳入准则和标准。尽管存在合理的关切,但我们争辩说,目前对当地热量后解释方法的可行性的批评,通过概括一个与图像数据特有的问题,将婴儿与浴水丢弃在一边。我们首先将这一问题定性为在解释和人类理解之间缺乏语义匹配。为了可靠地理解何时可以使用特征重要性,我们区分低层次特征和高层次特征的重要性。我们认为,在数据类型中,低层次特征具有清晰的语义性,例如电子健康记录(EHRs)等表格数据,语义性匹配,因此特征归属方法仍然可以有意义和有用地使用。