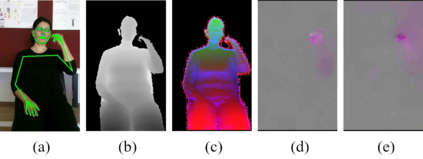

Sign language is commonly used by deaf or mute people to communicate but requires extensive effort to master. It is usually performed with the fast yet delicate movement of hand gestures, body posture, and even facial expressions. Current Sign Language Recognition (SLR) methods usually extract features via deep neural networks and suffer overfitting due to limited and noisy data. Recently, skeleton-based action recognition has attracted increasing attention due to its subject-invariant and background-invariant nature, whereas skeleton-based SLR is still under exploration due to the lack of hand annotations. Some researchers have tried to use off-line hand pose trackers to obtain hand keypoints and aid in recognizing sign language via recurrent neural networks. Nevertheless, none of them outperforms RGB-based approaches yet. To this end, we propose a novel Skeleton Aware Multi-modal Framework with a Global Ensemble Model (GEM) for isolated SLR (SAM-SLR-v2) to learn and fuse multi-modal feature representations towards a higher recognition rate. Specifically, we propose a Sign Language Graph Convolution Network (SL-GCN) to model the embedded dynamics of skeleton keypoints and a Separable Spatial-Temporal Convolution Network (SSTCN) to exploit skeleton features. The skeleton-based predictions are fused with other RGB and depth based modalities by the proposed late-fusion GEM to provide global information and make a faithful SLR prediction. Experiments on three isolated SLR datasets demonstrate that our proposed SAM-SLR-v2 framework is exceedingly effective and achieves state-of-the-art performance with significant margins. Our code will be available at https://github.com/jackyjsy/SAM-SLR-v2

翻译:耳聋或哑哑人通常使用手势、身体姿势甚至面部表情的快速而微妙的手势运动,通常使用手势、身体姿势、甚至面部表达式的快速而微妙的动作。当前手语识别方法通常通过深层神经网络产生特征,由于数据有限和吵闹,因此难以适应。最近,基于骨架的行动识别因其主题不易和背景差异性质而引起越来越多的关注,而基于骨架的SLR(SAM-SLR-v2)由于缺少手语说明而仍在探索中。一些研究人员试图使用离线手姿势追踪器获取手势关键点,并协助通过经常的神经网络识别手势语言。然而,这些手语识别方法中没有一个通过深厚的神经网络产生特征。为此,我们提出了一个新的Skeleton认知多式框架,其中含有孤立的Semblegal-SLR(SAM-V2)模式,以学习和将拟议的多式特征表达方法连接到更高的认识度框架。我们的国家图像网络(SL-GRC-RC-RM-Slaevalal-Sal-Smary Smary Sal-Sal-Sal-Sal-Sal-Sleval-Smary-Sleval )将展示其他的模型模型模型模型定位模型模型模型模型模型模型模型的模型,从而可以提供一个基于的内基数基数基数基数基数基数。