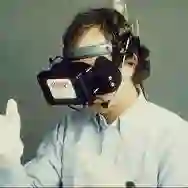

A cluster of research in Affective Computing suggests that it is possible to infer some characteristics of users' affective states by analyzing their electrophysiological activity in real-time. However, it is not clear how to use the information extracted from electrophysiological signals to create visual representations of the affective states of Virtual Reality (VR) users. Visualization of users' affective states in VR can lead to biofeedback therapies for mental health care. Understanding how to visualize affective states in VR requires an interdisciplinary approach that integrates psychology, electrophysiology, and audio-visual design. Therefore, this review aims to integrate previous studies from these fields to understand how to develop virtual environments that can automatically create visual representations of users' affective states. The manuscript addresses this challenge in four sections: First, theories related to emotion and affect are summarized. Second, evidence suggesting that visual and sound cues tend to be associated with affective states are discussed. Third, some of the available methods for assessing affect are described. The fourth and final section contains five practical considerations for the development of virtual reality environments for affect visualization.

翻译:Affective Economic的一组研究表明,通过实时分析用户的电生理活动,可以推断用户的感官状态的某些特征。然而,目前尚不清楚如何利用从电生理信号中提取的信息对虚拟现实(VR)用户的感官状态进行视觉展示。VR中用户感官状态的视觉化可导致为心理健康护理提供生物反馈疗法。了解VR中如何直观地显示感官状态需要一种跨学科的方法,将心理学、电生理学和视听设计结合起来。因此,本审查的目的是综合这些领域以前的研究,以了解如何开发虚拟环境,从而自动生成用户感官状态的视觉表现。手稿将这一挑战分为四节:第一,与情感和影响有关的理论摘要。第二,讨论显示视觉和声音提示往往与感官状态有关的证据。第三,一些评估影响的方法得到了描述。第四和最后一节载有五个实际考虑因素,用于发展影响视觉化的虚拟现实环境。