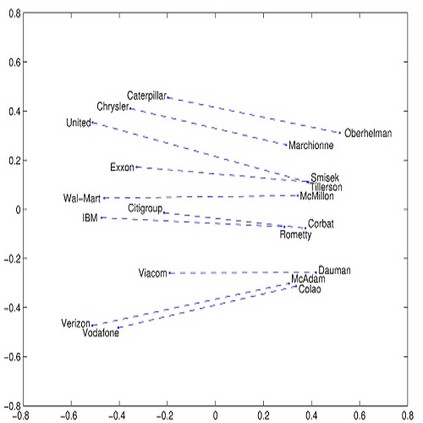

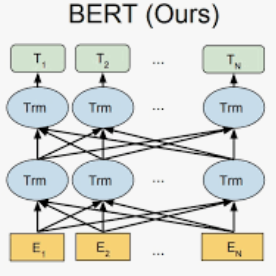

Popular word embedding methods such as GloVe and Word2Vec are related to the factorization of the pointwise mutual information (PMI) matrix. In this paper, we link correspondence analysis (CA) to the factorization of the PMI matrix. CA is a dimensionality reduction method that uses singular value decomposition (SVD), and we show that CA is mathematically close to the weighted factorization of the PMI matrix. In addition, we present variants of CA that turn out to be successful in the factorization of the word-context matrix, i.e. CA applied to a matrix where the entries undergo a square-root transformation (ROOT-CA) and a root-root transformation (ROOTROOT-CA). While this study focuses on traditional static word embedding methods, to extend the contribution of this paper, we also include a comparison of transformer-based encoder BERT, i.e. contextual word embedding, with these traditional methods. An empirical comparison among CA- and PMI-based methods as well as BERT shows that overall results of ROOT-CA and ROOTROOT-CA are slightly better than those of the PMI-based methods and are competitive with BERT.

翻译:暂无翻译